Abstract

Context:

Evaluating systems is one of the essential items affecting faculty member performance. Using an appropriate evaluating system is essential for performing different proper roles for faculty members.Objective:

This study aimed to systematically review the models, tools, and challenges of evaluating the performance of clinical faculty members.Methods:

This systematic review investigated eight international and four national electronic databases in 2019. Descriptive and thematic analyses were done to extract the most relevant information about the models, tools, and challenges of evaluating the performance of clinical faculty members.Results:

In total, 15163 articles were identified, of which 25 met the inclusion criteria. The findings were demonstrated in four main categories of the model of evaluating the performance of clinical faculty members, education, data gathering tools, and challenges of evaluating the performance of clinical faculty members. The main subthemes for evaluating the performance of the clinical faculty member model were systems, structure, indicators, and process.Conclusions:

This study recommends policymakers and educational managers to design an appropriate evaluating tool. Further research should be conducted to develop a practical system for solving the mentioned challenges.Keywords

Clinical Faculty Members Performance Clinical Teacher Faculty Member Instructor Evaluation Methods Medical Education

1. Context

Faculty members are the most costly workforce in universities, and thus the professor's evaluation system should be able to act as a mirror of the professor's performance commensurate with their responsibilities in the areas of educational duties, research, service delivery, management, and collegial and extraterrestrial behaviors (1, 2). The comprehensiveness of this system, while providing justice, helps to achieve more favorable results in the educational system (3, 4).

While a dentist or engineer can immediately see the result of their work, the result of a teacher's work is not easy to see and measure in a short time. On the other hand, a large percentage of what learners gain is the result of their previous learning; therefore, it is challenging for a teacher to see the impact of their work (5, 6).

Teacher evaluation can have different functions (7). One of the most tangible goals and applications of teacher evaluation is its role in managerial decisions. These decisions include hiring, renewing contracts, requiring correction, and even releasing a professor. This use of evaluation has attracted much attention from both managers and professors. A good evaluation of the teacher also gives them enough information about how they work; thus, they know whether they have done a good and valuable job. A good evaluation, in addition to reassuring the teacher, leads to increased job satisfaction among teachers (1, 7-9).

According to the latest decree of the Iran Ministry of Health, the duties of professors are classified into seven areas, including education, research, personal development, executive and managerial activities, providing health services, specialized and health promotion, specialized activities outside the university, and cultural activities (10).

Educational tasks in medical universities cover a wide range of activities. These activities include theoretical and practical teaching, counseling and guidance for students, supervising clinical and educational dissertations, active participation in morning rounds and reports, night watch, on-call services, journal clubs, and workshops for professors, students, and staff (9-11).

Educational appraisal is essential in finding and promoting educational quality and ensuring continuous improvement. The performance of faculty members, considered one of the primary building blocks of universities, makes a significant contribution to the output of an educational system. The importance of understanding and recognizing the performance of clinical faculty members is inevitable (12).

However, evaluation of clinical faculty members faces some challenges. One of the major problems of faculty members' promotion regulations is the incompatibility of scores given to some of their activities (13). Also, professor performance assessment requires collecting data on educational activities, comparing these data with specific and designed standards, and judging the extent to which predetermined goals have been achieved (14).

Evaluating faculty members in this area certainly has its difficulties and complexities. Therefore, the existence of a comprehensive and inclusive system that includes all professional aspects of medical professors seems necessary. The primary purpose of this study was to systematically review the models, tools, and challenges of evaluating the performance of clinical faculty members.

2. Methods

2.1. Definitional Concepts

The present study was a systematic review of articles and documents evaluating the performance of clinical faculty members. This systematic review followed the preferred reporting items for systematic reviews and meta-analysis (PRISMA) guidelines.

2.2. Search Strategy and Selection Criteria

Eight international (EMBASE, ProQuest, Science Direct, Web of Knowledge, Scopus, PubMed, Ovid, and Google Scholar) and four national (Civilica, Irandoc, Magiran, and SID) electronic databases were searched to find published studies and grey literature on evaluating models and methods of clinical faculty members performance. The search was done in 2019 and was limited to a specific time frame from 1990 to 2019. The key terms were identified and selected by consulting research experts in this field, and the search strategy was developed in partnership with a research team. The search terms adopted include:

(Assess* OR Measure* OR Judge OR Estimate* OR Evaluate* OR Appraise* OR Rank OR Categorize* OR Grade OR Status OR Classify* OR report or Metric OR Model OR Investigate* OR Promote* OR Develop* OR System OR Plan OR Implement* OR Affair OR Level OR Perform OR calculate* OR Outcome) AND (Clinic* OR Medic* OR Therapy* OR Curate* OR Health OR Hospital) AND (Professor OR Member OR Fellow OR Trainer OR Mentor OR Tutor OR lecturer OR Teacher OR Staff OR Researcher OR Activity OR Mission OR Workload OR Contribution OR Effort) AND (College OR Institution OR School OR Department OR Faculty OR Campus OR Academia OR Academy OR Academe OR University OR Academic OR Education).

In the initial search process, we reviewed reputable journals in this field. The references of identified articles were also independently hand-searched to find more specific and related articles and studies. We used EndNote software version X9 to manage the search library, duplicate screen articles, and extract irrelevant articles. The number of documents generated from the defined databases is indicated in Table 1.

The Number of Articles/Abstracts Generated from the Databases

| Database | Number of Documents |

|---|---|

| EMBASE | 1327 |

| ProQuest | 429 |

| Science Direct | 293 |

| Web of Knowledge | 6954 |

| Scopus | 1003 |

| PubMed | 4280 |

| Ovid | 791 |

| Google Scholar | 44 |

| Civilica, Irandoc, Magiran and SID | 22 |

| Total | 15143 |

2.3. Study Screening and Selection

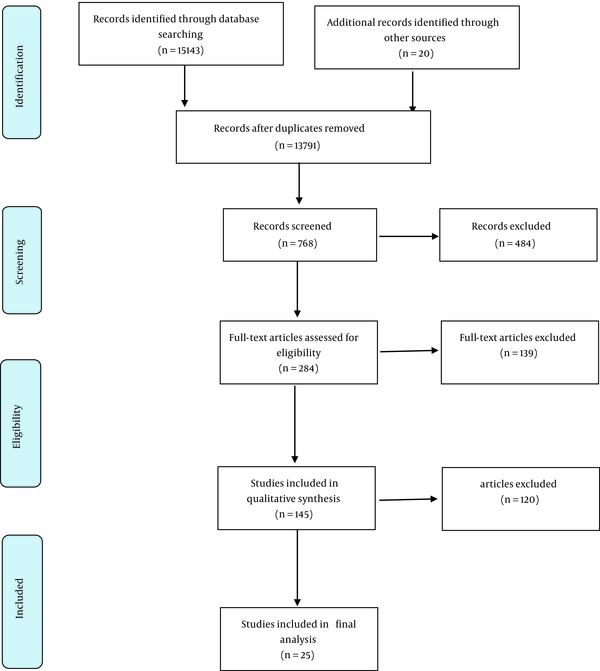

This study undertook a three-stage screening process to select relevant studies and documents. Initially, the authors conducted independent searches in different databases based on the search strategy. Secondly, the title and abstract of identified articles and documents were screened independently by the authors to assess their eligibility for inclusion in the review. The authors used the inclusion and exclusion criteria in this stage. Finally, the available full texts of the selected articles were reviewed to confirm whether the studies met the research question of this review. A standard quality assessment of the retrieved articles was conducted using the critical appraisal skills programme (CASP). Two authors reviewed each article independently for the risk of bias. Any disagreements were resolved through discussion or consultation with a third author. The process for selecting and reviewing the articles is indicated in Figure 1.

Flow chart of study identification and selection process

2.4. Inclusion and Exclusion Criteria

All studies with different study designs and methodologies evaluating the methods of clinical faculty members were included. Also, all studies from 1990 to February 2019 were included. The exclusion criteria were studies with no data on the research question's scope, books, guidelines, peer reviews, conference papers, and reports. Also, articles whose full texts were not available or written in languages other than English and Persian were excluded. Articles published in Persian were addressed based on the type of article.

2.5. Data Extraction

The authors screened and summarized the full texts of eligible studies and documents according to the designed descriptive and thematic analysis forms. The forms included the data of the author, the country in which the study was carried out, the study year, the study design, and critical results. The thematic analysis method was used to analyze the data. Thus, the findings of the final studies were coded line by line and then the codes were grouped. Finally, the initial study themes were obtained. After this stage, the themes and sub-themes were examined and compared in terms of similarities and differences between the studies, and the final themes were obtained. MAXQDA software was used to analyze the data. Finally, the manuscript was evaluated using the PRISMA checklist.

2.6. Data Analysis

A thematic synthesis approach was used to gather information, and two authors performed inductive analysis. For designing this table, the authors extracted findings and coded each study's findings. They then grouped the codes due to their similarity. Finally, they analyzed the grouped findings to classify them into four main themes. The findings were analyzed using MAXQDA software, and 1506 codes were identified after thematic analysis. These codes were categorized into four main themes. The two authors checked the accuracy and completeness of the extracted data.

3. Results

The screening process yielded a total of 15143 documents and 20 gray literature (stage 1). The duplicated studies were removed, and of 13791 studies, after reviewing the titles and abstracts, 484 were excluded because they were not relevant to evaluating the performance of clinical faculty members (stage 2). A total of 145 articles were left for the full-text review (stage 3). Subsequently, 139 studies were discarded because they did not meet the inclusion criteria (Figure 1). Finally, 25 studies were included in the final analysis (Table 2).

Characterization of Studies

| Authors | Year | Method | Main Results |

|---|---|---|---|

| Troncon (15) | 2004 | Descriptive, semi-quantitative study | Focusing on shortage of resources and organizational problems, cultural aspects, and the lack of a better educational climate are the weaknesses of traditional medical schools. |

| McVey et al. (16) | 2015 | Observational cohort study | Assessing technical and communication skills as part of a national continuing education process is recommended. Devoting further resources to objective skills evaluation is essential for the educational system. |

| Moore et al. (17) | 2018 | Synthesis | Results show that practitioners will have a more explicit approach to helping clinicians and providers. |

| Vaughan et al. (18) | 2015 | Survey | It is essential to assess and accredit local surgical specialization programs and training of non-physician surgical practitioners. |

| O’Keefe et al. (19) | 2013 | Cross-sectional surveys | This study observed differences in staff education, training, and competencies, suggesting that enhanced epidemiologic training might be needed in local health departments serving smaller populations. |

| McNamara et al. (20) | 2013 | Qualitative design | Each participant's current role and everyday practice is essential when using mentoring, coaching, and action learning interventions. This method helps the participant to develop and demonstrate clinical leadership skills. |

| Cantillon et al. (21) | 2016 | Qualitative survey | Becoming a clinical teacher entails negotiating one's identity and practice between two potentially conflicting planes of accountability. Clinical CoPs are primarily conservative and reproductive of teaching practice, whereas accountability to institutions is potentially disruptive of teacher identity and practice. |

| Savari et al. (22) | 2018 | Multimethod research | Three general themes were identified in this study: Clarifying and determining healthy dietary behaviors and actions, teaching life skills and adopting healthy diet behaviors, and utilizing social norms for adopting healthy diet patterns. |

| Haghdoost and Shakibi (23) | 2006 | Cross-sectional study | Some differences were found between the perceptions of students about their lecturers when compared with the perceptions of staff about their colleagues. Students were more concerned with the personality of their lecturers. |

| Horneffer et al. (24) | 2016 | Cross-sectional study | An intensified didactic training program for student tutors may help them to improve. More studies should be done to optimize the concept regarding time expenditure and costs. |

| Mohammadi et al. (25) | 2011 | Short communication | The study's results provided reliable information about department chairs' concerns and reactions to this system. The researchers found strengths and threats to developing a faculty member activity measurement system. |

| Shahhosseini and Danesh (26) | 2014 | Qualitative study | This study focused on effective measures to improve faculty members' situation increase their efficiency, effectiveness, and productivity. |

| Vieira et al. (27) | 2014 | Exploratory study | The strategy used in this study was partially effective but could be improved mainly by more research on its duration, including a discussion of actual cases. |

| Boerboom et al. (28) | 2011 | Questionnaire | MCTQ is a valid and reliable instrument to evaluate clinical teachers' performance during short rotations. |

| Young et al. (29) | 2014 | Developing the form | Respectful interactions with students were the most influential item in the global rating of faculty performance. The method used in this document is a moderately reliable tool for assessing the professional behaviors of clinical teachers. |

| McQueen et al. (30) | 2016 | Grounded theory approach | The barriers to effective assessment and feedback were identified in this study, and they should be addressed to improve postgraduate medical training. |

| Ipsen et al. (31) | 2010 | The nominal group process consensus method | The documents of this study suggest that it is possible to develop standardized measurements of educational works. The studied faculty emphasized developing the work schedule. |

| Guraya et al. (32) | 2018 | A single-stage survey-based randomized study | This study has found time constraints and insufficient support for research as critical barriers to medical professors' research productivity. The authors recommended having financial and technical support and a lesser administrative workload. |

| van Roermund et al. (33) | 2011 | A qualitative study | The critical role played by the teachers' feelings and expectations regarding their work was studied in this research. This recommended that in developing a new teaching model and faculty development programs, attention should be paid to teachers' existing identification model and the culture and context. |

| Wang et al. (34) | 2012 | Non-experimental research | The authors found that faculty members are not satisfied with the evaluative process and emphasize the need for improvements and development in evaluation tools. |

| Tsingos-Lucas et al. (35) | 2016 | Mixed-method study | This study showed that students and professors perceive the RACA as an effective educational tool that may increase skill development for future clinical practice. |

| Shaterjalali et al. (4) | 2018 | Delphi | The results of this study indicated the necessity of forming a teaching team, paying attention to the selection criteria, and planning requirements for assigning responsibilities to the teaching. |

| Roos et al. (36) | 2014 | A mixed method evaluation | Findings showed the success of a 5-day education program in embedding knowledge and skills to improve the performance of medical educators. By using qualitative and quantitative measures, this approach could serve as a framework to assess the effectiveness of comparable interventions. |

| Nandini et al. (37) | 2015 | Descriptive study | Absenteeism of students, overcrowding of wards, and lack of uniformity of study materials were essential factors. |

| Colletti et al. (38) | 2010 | Survey | The authors designed a framework. A five-domain instrument consistently accounted for variations in faculty teaching performance as rated by resident physicians. This instrument may be useful for the standardized assessment of instructional quality. |

| Oktay et al. (11) | 2017 | Cross-sectional study | The findings showed that the evaluator group and residents met the 360-degree assessment, and this method was readily accepted in the studied university residency training program setting. However, only evaluations by faculty, nurses, self, and peers were reliable for any value assessment. |

They are models of evaluating the performance of clinical faculty members, education, data gathering tools, and challenges of evaluating the performance of clinical faculty members. The main subthemes and categories of the model evaluating the performance of clinical faculty members are displayed in Table 3 in detail. Other themes and their findings are indicated in Table 4.

Subthemes and Categories of the Model of Evaluating the Performance of Clinical Faculty Members

| Theme | Subtheme | Category |

|---|---|---|

| Model of evaluating the performance of clinical faculty members | System | Necessary features in system design |

| Computation systems for faculty activities | ||

| Evaluation resources | ||

| Shoaa system | ||

| 360 degree evaluation | ||

| Balanced scorecard | ||

| Indicators | Clinical scope | |

| Research scope | ||

| Educational scope | ||

| Executive scope | ||

| Research in education | ||

| Individual development | ||

| Citizenship | ||

| Informal roles | ||

| Structure | Individuals or units responsible for data collection and analysis | |

| Individuals or units responsible for judging the performance | ||

| Individuals or units responsible for reviewing the reports | ||

| Competency committee for review of documents | ||

| Complaints review committee | ||

| Process | Method of collecting work data of the faculty members | |

| Identification of feedback system | ||

| Evaluation time | ||

| Confidential or anonymous assessments and non-confidential assessments | ||

| Committee rating | ||

| Analysis of available output data including | ||

| Design and certification standards for continuing professional education | ||

| Developmental and aggregate two-dimensional evaluation | ||

| Developing impact mapping | ||

| Awards by geographic impact level |

Main Themes and the Findings of Systematic Review

| Theme | Findings |

|---|---|

| Education | Motivate the students and colleagues |

| Availability | |

| Communication skill | |

| Provide and use educational facilities | |

| Educational planning | |

| Creating a favorable educational environment | |

| Features of being a master role model | |

| Guidance advice | |

| Student participation | |

| Class management | |

| Pay attention to educational rules | |

| Evaluate learners' performance | |

| Recognizing students | |

| Teaching skills | |

| Content mastery | |

| Personality characteristics | |

| Motivate the students and colleagues | |

| Availability | |

| Communication skill | |

| Provide and use educational facilities | |

| Educational planning | |

| Data gathering tools | Developing and applying the evaluation system for educational activities |

| Assessment of the professor in the emergency medicine program | |

| Calculation of the American educational performance | |

| American clinical education assessment | |

| Evaluation of clinical dentistry professors | |

| The peer evaluation system | |

| The effectiveness of clinical education in assessing the developmental evaluation of faculty members | |

| Assessment of resident anesthesia supervision | |

| Evaluation of anesthesia training quality | |

| Evaluation of residents of clinical education | |

| Systematic evaluation of the educational quality of medical faculty members | |

| Evaluate the educational performance of the faculty members of the medical school | |

| Clinical education evaluation tool related to CanMEDS roles | |

| Canadian clinical educational evaluation | |

| Surgeon self-assessment and resident assessment of Dutch | |

| Seeing a colleague (US) | |

| Evaluation of Dutch resident professors | |

| Assessing the supervision of anesthesia residents | |

| Evaluation by medical students | |

| Australian clinical education quality questionnaire | |

| Self-assessment questionnaire and Dutch education quality resident | |

| Evaluation of radiology professors by residents | |

| Residents' evaluation of clinical professor performance | |

| Questionnaire of clinical, educational effectiveness | |

| Educational framework questionnaire for evaluating clinical professors | |

| Features required for tool preparation | |

| Training effectiveness calculation tool | |

| Evaluation tool with stakeholder opinion | |

| Calculation of clinical and educational activities | |

| Evaluation of the quality of teaching theoretical courses | |

| The Master's Clinical Training Questionnaire | |

| Self-assessment criteria | |

| Challenges | Zero and one act of some bosses |

| Looking for an ideal computing system that never materializes | |

| Fear of being manipulated by statistics | |

| Lack of information and data culture | |

| Lack of trust in evaluation systems | |

| Not applying a specific framework to all groups | |

| Performing no difference between active and inactive members | |

| Unclear responsibilities of faculty members | |

| Differences between clinical and non-clinical groups | |

| The need to provide infrastructure | |

| The low motivation of faculty members | |

| The challenges of cultural change | |

| The possibility of the system being played by scientific members | |

| Probability of faculty members seeking a grade | |

| Not considering the quality of work | |

| Probability of interaction between different performance calculation systems | |

| Lack of controlled questions to avoid random comments | |

| Lack of coverage of all factors affecting teacher evaluation | |

| Lack of training of people involved in the evaluation process | |

| Excessive attention to research results concerning educational activities | |

| Lack of attention to religious values in the evaluation system | |

| Fear of disclosing peer review results | |

| Lack of trust between faculty members | |

| Inaccurate use of results | |

| Lack of appropriate tools for evaluation | |

| Unnecessary bureaucratic requirements | |

| Focus on the number of articles for evaluation | |

| Inadequate quantitative and qualitative indicators | |

| The subjectivity of some promotion indicators | |

| Lack of a unified protocol |

3.1. Models of Evaluating the Performance of Clinical Faculty Members

The findings of this study showed four main subthemes for evaluating the performance of the clinical faculty member model. They were systems, the structure, indicators, and the process (Table 5).

Clinical Faculty Performance Evaluation Models

| Systems | Structure | Indicators | Process |

|---|---|---|---|

| Shoaa system; balanced scorecard; 360 degree evaluation | Individuals or units responsible for data collection and analysis; individuals or unit responsible for performing judgment; individuals or units responsible for reviewing reports; competency committee for evidence; complaints review committee | Educational scope; executive scope; research in education; individual development; citizenship; informal roles | Identification of the feedback system; evaluation time; confidential or anonymous assessments and non-confidential assessments; committee rating, analysis of available output data; design and certification standards for continuing professional education; developmental and aggregate two-dimensional evaluation; developing impact mapping; awards by geographic impact level |

3.2. Systems

The categories under the system subtheme were necessary features in system design, computation systems for faculty activities, evaluation resources, the Shoaa system, 360 degree evaluation, and the balanced scorecard.

Many articles have focused on necessary features in system design. Participation of professors in the design and implementation of the evaluation system (39), identification of standard time spent in various activities (40), item resolution, attention to the personal characteristics of the professor and the differences of the department, appropriate application format, completion guide, ranking of professors in the department and faculty of the university, between the primary faculty (41), landscape setting (42), verifiers (42), the analysis interval (42), minimum expectations (42), evaluation time (within one month) (42), being on the Web (42), self-reporting (42), contingency design to fit each department (42), existence of written procedures and policies for clinical evaluations, explaining performance evaluation objectives (43), and indigenous standards for system design (44) are some examples of considering features in system design by other researchers.

Due to computation systems for faculty activities, the method of relative value (9, 45, 46) has been considered by various researchers as follows: Forming a working group, identifying the main areas of professors' activities for comparison, listing all specific activities, determining the relative value range, determining the average relative value for activities, determining the relative value of other activities in proportion to the average activity, determining the time dimension of activities, identifying the activities of senior faculty members in each field, selecting the score of that activity as an excellent criterion, normalizing to pay deprivation, testing the system with several masters, modifying systems, implementing the system with all faculty members temporarily, making new corrections, and finalizing for final implementation are the examples of codes that are in the relative value group.

Evaluation resources, the Shoaa system (20), 360 degree evaluation (11, 47, 48), and the balanced scorecard (1) were other categories under the system subtheme.

3.3. The Structure

Individuals or units responsible for data collection and analysis, individuals or unit responsible for performing judgment, individuals or units responsible for reviewing reports, the competency committee for evidence, and the complaints review committee were the main categories of the structure of evaluating the performance of the clinical faculty member model.

3.4. Indicators

Indicators were categorized into eight categories: Clinical scope, research scope, educational scope, executive scope, research in education, individual development, citizenship, and informal roles.

The clinical scope had some subcategories such as specialty and medical knowledge, system-based learning, clinical skills, clinical responsibility, clinical awards, quality of professors' services, resource management, clinical/hospital monitoring, recognizing faculty members as elite medicine, new medical services, case reports, clinical activity, role modeling, contractual services, on-call shifts, and regular shifts.

The subcategories of research scope were the number of research projects, grants and rewards, lectures and conferences, publications, referee and editor of a journal, guidelines development, number of inventions, job awards or foreign certificates, faculty reputation of research, supporting the faculty from research mission, and thesis advisers.

The results of this study showed that educational scope focuses on mentoring and consulting, training hours, resource management and cost-effectiveness training, role modeling, educational awards, quality of education, journal clubs, areas of clinical education, learners score, educational impact score, assessment of learners, training place, evidence-based medical education, number of learners, internships, educational innovations, laboratory activity, non-clinical education, educational evaluation score, and adult education.

The executive scope subcategories were the manager of a department, deputy, and school, manager of committees, and educational leadership and training management.

Education research is another indicator that has 10 subcategories of community-based education and research, research opportunity, strategic planning of a field of study, curriculum review and curriculum development, a referee, grants for educational research, educational scholarship products, a grant index, educational awards, and personnel development.

Due to the research findings, the other indicator was individual development. The individual development subcategories were facilitation skills, the formal teaching skills course, advanced degrees, certificates and renewal of specialized and sub-specialized certificates, and participation in educational activities and workshops.

Citizenship was another indicator that focuses on establishing a proper working relationship with colleagues, facilitating personal and professional development, role modeling cooperation, facilitating respect, effectiveness, and interaction with the team, paying attention to personnel training, and supporting staff.

3.5. The Process

Method of collecting work data of the faculty members, identification of the feedback system (49), evaluation time (41), confidential or anonymous assessments and non-confidential assessments (50), committee rating, analysis of available output data (51), design and certification standards for continuing professional education (52), developmental and aggregate two-dimensional evaluation (49), developing impact mapping (53), and awards by geographic impact level (54) were the main categories of the process.

4. Discussion

Evaluating the performance of clinical faculty members refers to taking action for a better education system. The aim of evaluating clinical faculty members is to design a fair, equitable, and practical evaluating system to ensure clinical teaching effectiveness. Evaluating the performance of clinical faculty members is an ongoing process in medical universities (1). The current systematic review provides four main themes of evaluating the performance of the clinical faculty member model, education, data gathering tools, and challenges of evaluating the performance of clinical faculty members. The main categories for evaluating the performance of the clinical faculty member model are systems, the structure, indicators, and the process. Some studies evaluated the status of clinical faculty members with different methods. Based on the reported results of these studies, all methods have their challenges (REF). Based on the findings of this systematic review, the main challenges of evaluating the system of clinical faculty members’ performance are lack of information and data culture, lack of trust in evaluation systems, not applying a specific framework to all groups of faculty members, unclear responsibilities of faculty members, low motivation of faculty members, cultural change challenges, not considering the quality of work, lack of coverage of all factors affecting faculty member evaluation, lack of training of people involved in the evaluation process, fear of disclosing peer review results, lack of trust between faculty members, and inaccurate use of results.

Numerous studies have been conducted worldwide to evaluate the performance of clinical faculty members. Most of the studies have been done quantitatively, and through questionnaires, they have examined professors' performance cross-sectionally. Some of these studies have focused on developing a tool for measuring teachers' performance and its psychometrics. In each tool, different dimensions have been used to evaluate professors' performance. Studies have also been conducted using the review method to introduce areas and items involved in the performance evaluation of clinical professors. In one study conducted in Iran in 2018, factors affecting the evaluation results of university faculty members were examined from the perspective of university professors. Accordingly, universities use two sources, namely students and administrators, to evaluate professors. Two dimensions of the educational system and faculty members' characteristics were used to measure the effectiveness of the evaluation (2). Another study was conducted in Germany in 2015 to develop a framework for the basic competencies of clinical professors. The final model of the research was six core competencies for clinical professors. It showed the reflection and progress of personal training and the use of systems related to teaching and learning (55). A study was conducted in Denmark in 2010 to identify the essential classes from the point of view of clinical faculty members. The faculty members introduced six essential classes. They ranked the participation of residents and clinical faculty members, time for management and development, and formal educational activities such as occasional evening lectures (56). A study conducted in the United States in 2010 covered the field of study for clinical professors, including formal educational research such as new educational technology, grants for educational research, clinical trials, advanced degrees, and research such as public health master, referee board membership, the referee introduced, educational grants, and magazine editors (57).

Some tools and checklists have been developed for evaluating clinical faculty members performance by different organizations (11, 29, 58, 59). The findings of this systematic review showed the primary data-gathering tools and checklists to evaluate the performance of clinical faculty members. Ahmadi et al. (as cited by Haghdoost, and Shakibi) developed a study on the adapted personnel evaluation standards for monitoring and continuous improvement of a faculty evaluation system in the context of medical universities in Iran. This study attempted to assess multiple faculty roles, including educational, clinical, and healthcare services (23). The findings of our study showed other items to evaluate the performance of clinical faculty members. For evaluating a faculty member, a multidisciplinary approach is needed. Many studies focus on just one item of evaluation. For example, Kamran performed a study to design a method for the evaluation of the teaching quality assessment form in Lorestan, Iran (60). Also, Chandran designed a novel analysis tool to assess the quality and impact of educational activities (59). The findings of this study showed that all checklists and data-gathering tools had strengths and weaknesses.

5. Conclusions

Educational tasks in medical universities cover a wide range of activities. These activities include theoretical and practical teaching, counseling and guidance for students, supervising clinical and educational dissertations, active participation in morning rounds and reports, night watch, enclave, club journal, and workshops for professors, students, and staff. Given the breadth and variety of activities in this field of tasks, evaluating faculty members in this field certainly has its difficulties and complexities. Therefore, it is necessary to have a comprehensive and inclusive system that includes all professional aspects of medical professors.

In general, evaluating systems has consequences on the performance of faculty members. Policymakers and educational managers have an essential role in dealing with evaluating mechanisms and their effects on faculty members. Using a fair and general evaluating system is crucial and any neglect can hurt a faculty member's performance. In this systematic review, we provided a comprehensive discussion and summarized all aspects of evaluating the performance of clinical faculty member models, tools, and challenges. In conclusion, the present study's findings could help policymakers design an appropriate model for performance evaluation.

References

-

1.

Huntington J, Dick III JF, Ryder HF. Achieving educational mission and vision with an educational scorecard. BMC Med Educ. 2018;18(1):245. [PubMed ID: 30373590]. [PubMed Central ID: PMC6206627]. https://doi.org/10.1186/s12909-018-1354-4.

-

2.

Kamali F, Yamani N, Changiz T, Zoubin F. Factors influencing the results of faculty evaluation in Isfahan University of Medical Sciences. J Educ Health Promot. 2018;7:13. [PubMed ID: 29417073]. [PubMed Central ID: PMC5791434]. https://doi.org/10.4103/jehp.jehp_107_17.

-

3.

Safari Y, Yoosefpour N. Dataset for assessing the professional ethics of teaching by medical teachers from the perspective of students in Kermanshah University of Medical Sciences, Iran (2017). Data Brief. 2018;20:1955-9. [PubMed ID: 30294649]. [PubMed Central ID: PMC6171092]. https://doi.org/10.1016/j.dib.2018.09.060.

-

4.

Shaterjalali M, Yamani N, Changiz T. Who are the right teachers for medical clinical students? Investigating stakeholders' opinions using modified Delphi approach. Adv Med Educ Pract. 2018;9:801-9. [PubMed ID: 30519136]. [PubMed Central ID: PMC6235154]. https://doi.org/10.2147/AMEP.S176480.

-

5.

Schoen J, Birch A, Adolph V, Smith T, Brown R, Rivere A, et al. Four-Year Analysis of a Novel Milestone-Based Assessment of Faculty by General Surgical Residents. J Surg Educ. 2018;75(6):e126-33. [PubMed ID: 30228036]. https://doi.org/10.1016/j.jsurg.2018.08.008.

-

6.

van Lierop M, de Jonge L, Metsemakers J, Dolmans D. Peer group reflection on student ratings stimulates clinical teachers to generate plans to improve their teaching. Med Teach. 2018;40(3):302-9. [PubMed ID: 29183183]. https://doi.org/10.1080/0142159X.2017.1406903.

-

7.

Yamani N, Changiz T, Feizi A, Kamali F. The trend of changes in the evaluation scores of faculty members from administrators' and students' perspectives at the medical school over 10 years. Adv Med Educ Pract. 2018;9:295-301. [PubMed ID: 29750066]. [PubMed Central ID: PMC5935079]. https://doi.org/10.2147/AMEP.S157986.

-

8.

Walensky RP, Kim Y, Chang Y, Porneala BC, Bristol MN, Armstrong K, et al. The impact of active mentorship: results from a survey of faculty in the Department of Medicine at Massachusetts General Hospital. BMC Med Educ. 2018;18(1):108. [PubMed ID: 29751796]. [PubMed Central ID: PMC5948924]. https://doi.org/10.1186/s12909-018-1191-5.

-

9.

Yue JJ, Chen G, Wang ZW, Liu WD. Factor analysis of teacher professional development in Chinese military medical universities. J Biol Educ. 2017;51(1):66-78. https://doi.org/10.1080/00219266.2016.1171793.

-

10.

Hamedi-Asl P, Saleh S, Hojati H, Kalani N. The Factors Affecting the Tenured Faculty Member Evaluation Score from the Perspective of Students of Jahrom University of Medical Sciences in 2016. J Res Med Dent Sci. 2018;6(2):233-9.

-

11.

Oktay C, Senol Y, Rinnert S, Cete Y. Utility of 360-degree assessment of residents in a Turkish academic emergency medicine residency program. Turk J Emerg Med. 2017;17(1):12-5. [PubMed ID: 28345067]. [PubMed Central ID: PMC5357104]. https://doi.org/10.1016/j.tjem.2016.09.007.

-

12.

Golsha R, Sheykholeslami AS, Charnaei T, Safarnezhad Z. Educational Performance of Faculty Members from the Students and Faculty Members’ Point of View in Golestan University of Medical Sciences. J Clin Basic Res. 2020;4(1):6-13. https://doi.org/10.29252/jcbr.4.1.6.

-

13.

Shafian S, Salajegheh M. Faculty Members’ Promotion: Challenges and Solutions. Strides Dev Med Educ. 2021;18(1). e1033. https://doi.org/10.22062/sdme.2021.195223.1033.

-

14.

Jamshidian S, Yamani N, Sabri MR, Haghani F. Problems and challenges in providing feedback to clinical teachers on their educational performance: A mixed-methods study. J Educ Health Promot. 2019;8:8. [PubMed ID: 30815479]. [PubMed Central ID: PMC6378827]. https://doi.org/10.4103/jehp.jehp_189_18.

-

15.

Troncon LE. Clinical skills assessment: limitations to the introduction of an "OSCE" (Objective Structured Clinical Examination) in a traditional Brazilian medical school. Sao Paulo Med J. 2004;122(1):12-7. [PubMed ID: 15160521]. https://doi.org/10.1590/s1516-31802004000100004.

-

16.

McVey RM, Louridas M, Giede C, Grantcharov TP, Covens A. Introduction of a Structured Assessment of Clinical Competency for Fellows in Gynecologic Oncology: A Pilot Study. J Gynecol Surg. 2015;31(1):17-21. https://doi.org/10.1089/gyn.2014.0038.

-

17.

Moore Jr DE, Chappell K, Sherman L, Vinayaga-Pavan M. A conceptual framework for planning and assessing learning in continuing education activities designed for clinicians in one profession and/or clinical teams. Med Teach. 2018;40(9):904-13. [PubMed ID: 30058424]. https://doi.org/10.1080/0142159X.2018.1483578.

-

18.

Vaughan E, Sesay F, Chima A, Mehes M, Lee B, Dordunoo D, et al. An assessment of surgical and anesthesia staff at 10 government hospitals in Sierra Leone. JAMA Surg. 2015;150(3):237-44. [PubMed ID: 25607469]. https://doi.org/10.1001/jamasurg.2014.2246.

-

19.

O'Keefe KA, Shafir SC, Shoaf KI. Local health department epidemiologic capacity: a stratified cross-sectional assessment describing the quantity, education, training, and perceived competencies of epidemiologic staff. Front Public Health. 2013;1:64. [PubMed ID: 24350233]. [PubMed Central ID: PMC3860004]. https://doi.org/10.3389/fpubh.2013.00064.

-

20.

McNamara MS, Fealy GM, Casey M, O'Connor T, Patton D, Doyle L, et al. Mentoring, coaching and action learning: interventions in a national clinical leadership development programme. J Clin Nurs. 2014;23(17-18):2533-41. [PubMed ID: 24393275]. https://doi.org/10.1111/jocn.12461.

-

21.

Cantillon P, D'Eath M, De Grave W, Dornan T. How do clinicians become teachers? A communities of practice perspective. Adv Health Sci Educ Theory Pract. 2016;21(5):991-1008. [PubMed ID: 26961285]. https://doi.org/10.1007/s10459-016-9674-9.

-

22.

Savari L, Shafiei M, AllahverdiPour H, Matlabi H. Analysis of the third-grade curriculum for health subjects: Application of Health Education Curriculum Analysis Tool. J Multidiscip Healthc. 2018;11:205-10. [PubMed ID: 29719403]. [PubMed Central ID: PMC5922235]. https://doi.org/10.2147/JMDH.S152454.

-

23.

Haghdoost AA, Shakibi MR. Medical student and academic staff perceptions of role models: an analytical cross-sectional study. BMC Med Educ. 2006;6:9. [PubMed ID: 16503974]. [PubMed Central ID: PMC1402291]. https://doi.org/10.1186/1472-6920-6-9.

-

24.

Horneffer A, Fassnacht U, Oechsner W, Huber-Lang M, Boeckers TM, Boeckers A. Effect of didactically qualified student tutors on their tutees' academic performance and tutor evaluation in the gross anatomy course. Ann Anat. 2016;208:170-8. [PubMed ID: 27328407]. https://doi.org/10.1016/j.aanat.2016.05.008.

-

25.

Mohammadi A, Mojtahedzadeh R, Emami Razavi SH. Challenges of measuring a faculty member activity in medical schools. Iran Red Crescent Med J. 2011;13(3):203-7. [PubMed ID: 22737464]. [PubMed Central ID: PMC3371942].

-

26.

Shahhosseini Z, Danesh M. Experiences of Academic Members About their Professional Challenges: a Content Analysis Qualitative study. Acta Inform Med. 2014;22(2):123-7. [PubMed ID: 24825939]. [PubMed Central ID: PMC4008043]. https://doi.org/10.5455/aim.2014.22.123-127.

-

27.

Vieira MA, Gadelha AA, Moriyama TS, Bressan RA, Bordin IA. Evaluating the effectiveness of a training program that builds teachers' capability to identify and appropriately refer middle and high school students with mental health problems in Brazil: an exploratory study. BMC Public Health. 2014;14:210. [PubMed ID: 24580750]. [PubMed Central ID: PMC3975921]. https://doi.org/10.1186/1471-2458-14-210.

-

28.

Boerboom TB, Dolmans DH, Jaarsma AD, Muijtjens AM, Van Beukelen P, Scherpbier AJ. Exploring the validity and reliability of a questionnaire for evaluating veterinary clinical teachers' supervisory skills during clinical rotations. Med Teach. 2011;33(2):e84-91. [PubMed ID: 21275538]. https://doi.org/10.3109/0142159X.2011.536277.

-

29.

Young ME, Cruess SR, Cruess RL, Steinert Y. The Professionalism Assessment of Clinical Teachers (PACT): the reliability and validity of a novel tool to evaluate professional and clinical teaching behaviors. Adv Health Sci Educ Theory Pract. 2014;19(1):99-113. [PubMed ID: 23754583]. https://doi.org/10.1007/s10459-013-9466-4.

-

30.

McQueen SA, Petrisor B, Bhandari M, Fahim C, McKinnon V, Sonnadara RR. Examining the barriers to meaningful assessment and feedback in medical training. Am J Surg. 2016;211(2):464-75. [PubMed ID: 26679827]. https://doi.org/10.1016/j.amjsurg.2015.10.002.

-

31.

Ipsen M, Eika B, Morcke AM, Thorlacius-Ussing O, Charles P. Measures of educational effort: what is essential to clinical faculty? Acad Med. 2010;85(9):1499-505. [PubMed ID: 20531150]. https://doi.org/10.1097/ACM.0b013e3181e4baca.

-

32.

Guraya SY, Khoshhal KI, Yusoff MSB, Khan MA. Why research productivity of medical faculty declines after attaining professor rank? A multi-center study from Saudi Arabia, Malaysia and Pakistan. Med Teach. 2018;40(sup1):S83-9. [PubMed ID: 29730951]. https://doi.org/10.1080/0142159X.2018.1465532.

-

33.

van Roermund TC, Tromp F, Scherpbier AJ, Bottema BJ, Bueving HJ. Teachers' ideas versus experts' descriptions of 'the good teacher' in postgraduate medical education: implications for implementation. A qualitative study. BMC Med Educ. 2011;11:42. [PubMed ID: 21711507]. [PubMed Central ID: PMC3163623]. https://doi.org/10.1186/1472-6920-11-42.

-

34.

Wang KE, Fitzpatrick C, George D, Lane L. Attitudes of affiliate faculty members toward medical student summative evaluation for clinical clerkships: a qualitative analysis. Teach Learn Med. 2012;24(1):8-17. [PubMed ID: 22250930]. https://doi.org/10.1080/10401334.2012.641478.

-

35.

Tsingos-Lucas C, Bosnic-Anticevich S, Smith L. A Retrospective Study on Students' and Teachers' Perceptions of the Reflective Ability Clinical Assessment. Am J Pharm Educ. 2016;80(6):101. [PubMed ID: 27667838]. [PubMed Central ID: PMC5023972]. https://doi.org/10.5688/ajpe806101.

-

36.

Roos M, Kadmon M, Kirschfink M, Koch E, Junger J, Strittmatter-Haubold V, et al. Developing medical educators--a mixed method evaluation of a teaching education program. Med Educ Online. 2014;19:23868. [PubMed ID: 24679671]. [PubMed Central ID: PMC3969510]. https://doi.org/10.3402/meo.v19.23868.

-

37.

Nandini C, Suvajit D, Kaushik M, Chandan C. Students’ and Teachers’ Perceptions of Factors Leading to Poor Clinical Skill Development in Medical Education: A Descriptive Study. Educ Res Int. 2015;2015:1-3. https://doi.org/10.1155/2015/124602.

-

38.

Colletti JE, Flottemesch TJ, O'Connell TA, Ankel FK, Asplin BR. Developing a standardized faculty evaluation in an emergency medicine residency. J Emerg Med. 2010;39(5):662-8. [PubMed ID: 19959319]. https://doi.org/10.1016/j.jemermed.2009.09.001.

-

39.

Kamalzadeh H, Abedini S, Aghamolaei T, Abedini S. [Perspectives of medical students regarding criteria for a good university professor, Bandar Abbas, Iran]. Hormozgan Med J. 2010;14(3):233-7. Persian.

-

40.

Arah OA, Heineman MJ, Lombarts KM. Factors influencing residents' evaluations of clinical faculty member teaching qualities and role model status. Med Educ. 2012;46(4):381-9. [PubMed ID: 22429174]. https://doi.org/10.1111/j.1365-2923.2011.04176.x.

-

41.

Stalmeijer RE, Dolmans DH, Wolfhagen IH, Peters WG, van Coppenolle L, Scherpbier AJ. Combined student ratings and self-assessment provide useful feedback for clinical teachers. Adv Health Sci Educ Theory Pract. 2010;15(3):315-28. [PubMed ID: 19779976]. [PubMed Central ID: PMC2940045]. https://doi.org/10.1007/s10459-009-9199-6.

-

42.

Mohammadi A, Arabshahi KS, Mojtahedzadeh R, Jalili M, Valian HK. A model for evaluation of faculty members' activities based on meta-evaluation of a 5-year experience in medical school. J Res Med Sci. 2015;20(6):563-70. [PubMed ID: 26600831]. [PubMed Central ID: PMC4621650]. https://doi.org/10.4103/1735-1995.165958.

-

43.

Kikukawa M, Stalmeijer RE, Okubo T, Taketomi K, Emura S, Miyata Y, et al. Development of culture-sensitive clinical teacher evaluation sheet in the Japanese context. Med Teach. 2017;39(8):844-50. [PubMed ID: 28509610]. https://doi.org/10.1080/0142159X.2017.1324138.

-

44.

Sutkin G, Wagner E, Harris I, Schiffer R. What makes a good clinical teacher in medicine? A review of the literature. Acad Med. 2008;83(5):452-66. [PubMed ID: 18448899]. https://doi.org/10.1097/ACM.0b013e31816bee61.

-

45.

Flowers C. Confirmatory Factor Analysis of Scores on the Clinical Experience Rubric: A Measure of Dispositions for Preservice Teachers. Educ Psychol Meas. 2016;66(3):478-88. https://doi.org/10.1177/0013164405282458.

-

46.

Yeh MM, Cahill DF. Quantifying physician teaching productivity using clinical relative value units. J Gen Intern Med. 1999;14(10):617-21. [PubMed ID: 10571707]. [PubMed Central ID: PMC1496754]. https://doi.org/10.1046/j.1525-1497.1999.01029.x.

-

47.

Ahmady S, Changiz T, Brommels M, Gaffney FA, Thor J, Masiello I. Contextual adaptation of the Personnel Evaluation Standards for assessing faculty evaluation systems in developing countries: the case of Iran. BMC Med Educ. 2009;9:18. [PubMed ID: 19400932]. [PubMed Central ID: PMC2680845]. https://doi.org/10.1186/1472-6920-9-18.

-

48.

Bastani P, Amini M, Taher Nejad K, Shaarbafchi Zadeh N. Faculty members’ viewpoints about the present and the ideal teacher evaluation system in Tehran University of Medical Sciences. J Adv Med Prof. 2013;1(4):140-7.

-

49.

Kamali F, Yamani N, Changiz T. Investigating the faculty evaluation system in Iranian Medical Universities. J Educ Health Promot. 2014;3:12. [PubMed ID: 24741652]. [PubMed Central ID: PMC3977402]. https://doi.org/10.4103/2277-9531.127572.

-

50.

Clark JM, Houston TK, Kolodner K, Branch Jr WT, Levine RB, Kern DE. Teaching the teachers: national survey of faculty development in departments of medicine of U.S. teaching hospitals. J Gen Intern Med. 2004;19(3):205-14. [PubMed ID: 15009774]. [PubMed Central ID: PMC1492160]. https://doi.org/10.1111/j.1525-1497.2004.30334.x.

-

51.

Geraci SA, Hollander H, Babbott SF, Buranosky R, Devine DR, Kovach RA, et al. AAIM report on master teachers and clinician educators part 4: faculty role and scholarship. Am J Med. 2010;123(11):1065-9. [PubMed ID: 21035595]. https://doi.org/10.1016/j.amjmed.2010.07.005.

-

52.

Kopelow M, Campbell C. The benefits of accrediting institutions and organisations as providers of continuing professional education. J Eur CME. 2013;2(1):10-4. https://doi.org/10.3109/21614083.2013.779580.

-

53.

Roth LM, Schenk M, Bogdewic SP. Developing clinical teachers and their organizations for the future of medical education. Med Educ. 2001;35(5):428-9. [PubMed ID: 11328511]. https://doi.org/10.1046/j.1365-2923.2001.00946.x.

-

54.

Berk RA. Using the 360 degrees multisource feedback model to evaluate teaching and professionalism. Med Teach. 2009;31(12):1073-80. [PubMed ID: 19995170]. https://doi.org/10.3109/01421590802572775.

-

55.

Burgess A, Goulston K, Oates K. Role modelling of clinical tutors: a focus group study among medical students. BMC Med Educ. 2015;15:17. [PubMed ID: 25888826]. [PubMed Central ID: PMC4335700]. https://doi.org/10.1186/s12909-015-0303-8.

-

56.

Geraci SA, Kovach RA, Babbott SF, Hollander H, Buranosky R, Devine DR, et al. AAIM Report on Master Teachers and Clinician Educators Part 2: faculty development and training. Am J Med. 2010;123(9):869-72. [PubMed ID: 20800159]. https://doi.org/10.1016/j.amjmed.2010.05.014.

-

57.

Fluit CR, Bolhuis S, Grol R, Laan R, Wensing M. Assessing the quality of clinical teachers: a systematic review of content and quality of questionnaires for assessing clinical teachers. J Gen Intern Med. 2010;25(12):1337-45. [PubMed ID: 20703952]. [PubMed Central ID: PMC2988147]. https://doi.org/10.1007/s11606-010-1458-y.

-

58.

Jochemsen-van der Leeuw HG, van Dijk N, Wieringa-de Waard M. Assessment of the clinical trainer as a role model: a Role Model Apperception Tool (RoMAT). Acad Med. 2014;89(4):671-7. [PubMed ID: 24556764]. [PubMed Central ID: PMC4885572]. https://doi.org/10.1097/ACM.0000000000000169.

-

59.

Chandran L, Gusic M, Baldwin C, Turner T, Zenni E, Lane JL, et al. Evaluating the performance of medical educators: a novel analysis tool to demonstrate the quality and impact of educational activities. Acad Med. 2009;84(1):58-66. [PubMed ID: 19116479]. https://doi.org/10.1097/ACM.0b013e31819045e2.

-

60.

Kamran A, Zibaei M, Mirkaimi K, Shahnazi H. Designing and evaluation of the teaching quality assessment form from the point of view of the Lorestan University of Medical Sciences students - 2010. J Educ Health Promot. 2012;1:43. [PubMed ID: 23555146]. [PubMed Central ID: PMC3577396]. https://doi.org/10.4103/2277-9531.104813.