1. Background

There are more than 68,000 new cases of kidney cancer and 25,600 deaths related to kidney cancer in China every year, with the prevalence rates showing a rising annual trend (1, 2). Renal cell carcinoma (RCC) accounts for more than 90% of all kidney cancer cases (3). Although diagnosis of RCC through biopsy is accurate, the invasive and inconvenient nature of this modality makes it less acceptable for physicians and patients (4, 5). On the other hand, computed tomography (CT) examination and other radiological imaging technologies have become the primary diagnostic tools for RCC, enabling active surveillance (6, 7). The increasing prevalence of CT examination has facilitated the early detection of RCC (8). Nevertheless, the most common benign renal tumor, that is, renal angiomyolipoma (AML), shares significant similarity with RCC on CT images (9, 10), which frequently leads to misdiagnosis and subsequently, management dilemmas, such as unnecessary biopsies and treatments (7, 11, 12). Therefore, a better diagnostic strategy is needed for the active surveillance of RCC.

Computer systems can accurately transform subtle texture features into quantitative data, and machine learning (ML) algorithms can build predictive models from big data (13). Therefore, computer-aided diagnosis (CAD), empowered by ML algorithms and trained by massive biomedical images, can provide a promising solution to help physicians establish a diagnosis (14). Developments in recent years have led CAD to outperform empirical predictions regarding both efficiency and accuracy for diagnosis of cancer (15).

Moreover, previous studies have applied the support vector machine (SVM) algorithm for RCC diagnosis and clearly distinguished RCC from AML (16, 17). However, the relatively few features drawn from CT images in these studies may prevent the application of these models in a more complicated scenario. Also, benchmarking at baseline between different algorithms is required for selecting the most suitable algorithm for RCC CAD, as generalization to real-world scenarios is rarely discussed in a specific cohort in these studies.

2. Objectives

The present study aimed to evaluate the performance of different supervised ML algorithms to diagnose RCC based on CT examinations.

3. Patients and Methods

3.1. Patients

This retrospective study was approved by the institutional ethics review board of Hunan Cancer Hospital, Hunan, China (No.: 2008-3). A total of 69 patients were included in this study as they met the following inclusion criteria: (1) pathological confirmation of RCC or AML; (2) diagnosis in the last five years; and (3) undergoing a three-phase CT scan before any treatment or surgery. All patients were randomly divided into two datasets, that is, training and testing sets.

3.2. CT Image Acquisition

The CT images were acquired using a SOMATOM Definition AS VA48A scanner (Siemens, Germany) at the department of diagnostic radiology of Hunan Cancer Hospital. The CT scanning protocol was applied for all 69 patients. Accordingly, 85 mL of nonionic contrast agent (Omnipaque 350, GE Healthcare, USA) was administered at a rate of 3 mL/s. The CT scan protocol included three phases: Unenhanced phase (UP), corticomedullary phase (CMP, with a 25-sec delay after contrast injection), and nephrographic phase (NP, with a 50-sec delay after contrast injection). In the diagnostic process, no pathophysiological condition requiring an adjustment based on the protocol was found.

3.3. CT Image Texture Extraction

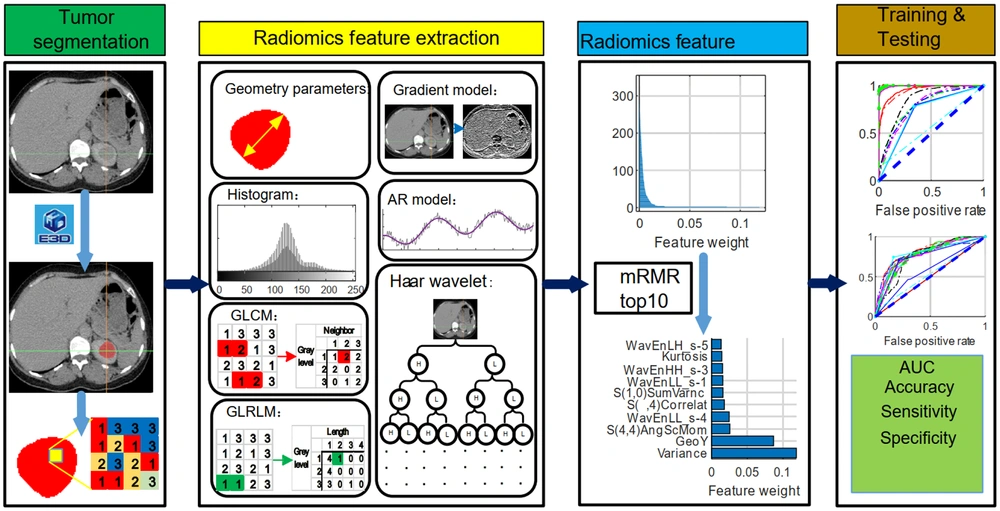

The E3D software (e3d-med.com) was used for marking the region of interest (ROI) (18). The ROI was contoured by experienced physicians at our hospital. The texture features of ROI were then extracted, digitalized, and quantified in MaZda software according to its manual (19-21). Briefly, 352 features were drawn from seven categories (Appendix 1), which were as follows: Autoregressive model (AR model, including coefficients of neighboring pixels, reflecting coarse-to-fine stratification), geometric parameters (GP, including the characteristics of ROI, such as location, orientation, size, and geometric and topological descriptors), gradient model (GM, a direction which changes in the grayscale intensity, representing the image intensity distribution), gray-level co-occurrence matrix (GLCM, computed from the intensities of pairs of pixels, describing homogeneity), gray-level run-length matrix (GLRLM, calculated in four directions, that is, horizontal, vertical, 45°, and 135° angles, indicating image coarseness), the Haar wavelet (HW, spatial frequencies at multiple scales, identifying coarseness), and grayscale histogram (GH, including characteristics reflecting image uniformity). All features were normalized by the 3-sigma method. Next, the weight of each feature was evaluated by the minimum redundancy-maximum relevance (mRMR) algorithm (22), and the top 10 weight features were selected to train and test the diagnostic ML models (Figure 1).

3.4. Diagnostic ML Models

The training and testing of ML models were performed in Python 3.7, using the Scikit-learn package (23). The following supervised ML algorithms were used with default parameters: AdaBoost classifier, CatBoost classifier, decision tree classifier, extra-trees classifier, extreme gradient boosting, Gaussian process classifier, gradient boosting classifier, k-nearest neighbor classifier, linear discriminant analysis, logistic regression, multi-level perceptron (MLP) classifier, naive Bayes classifier, quadratic discriminant analysis, random forest classifier, ridge classifier, and SVM (linear kernel). All models were run under default parameters (Appendix 2), with a prediction value ≤ 0.5 indicating a benign tumor and > 0.5 indicating RCC. Besides, the performance of the models was evaluated by the receiver operating characteristic (ROC) curve. The accuracy of diagnosis (ACC), sensitivity, and specificity were calculated as follows (24):

where TP represents a true positive, TN represents a true negative, FP represents a false positive, and FN represents a false negative.

4. Results

The age of the patients is presented in Table 1. There was no significant difference in terms of sex or age between the two groups. A total of 5,360 CT images were obtained from 69 patients. The samples were further divided into a training dataset (28 RCC and 20 AML cases; 3,653 CT images) and a testing dataset (12 RCC and 9 AML cases; 1,707 CT images) (Table 1).

Abbreviations: AML, angiomyolipoma; RCC, renal cell carcinoma; SD, standard deviation; N, patient number; i.no, CT image number.

a P-value on t-test.

b P-value on chi-square test.

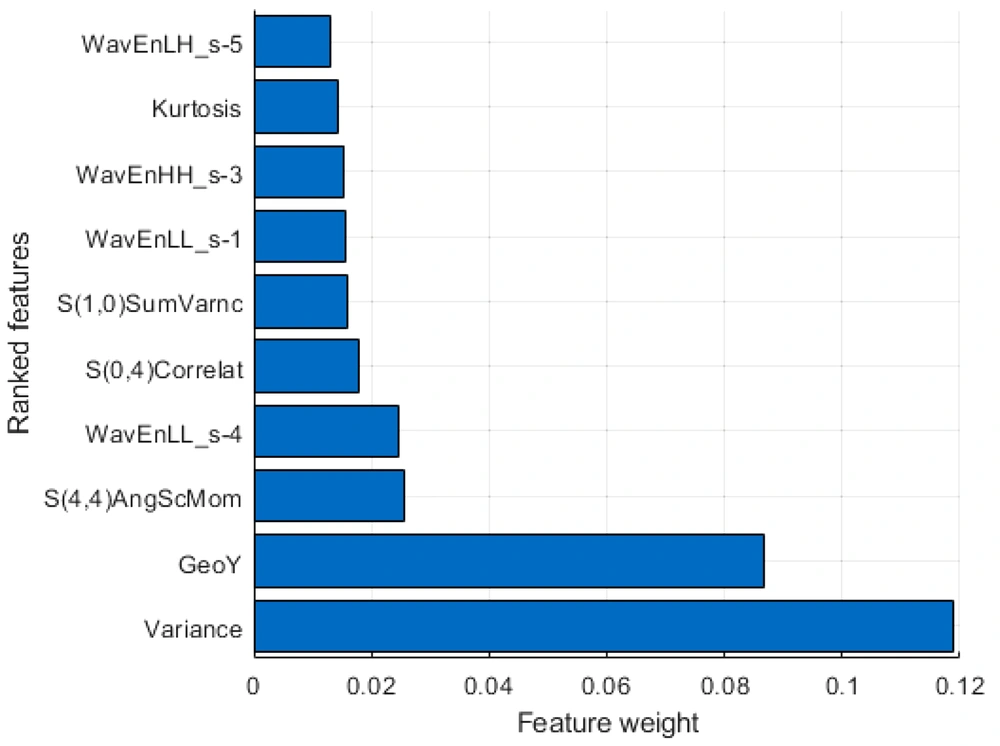

The workflow and strategies applied in this study are presented in Figure 1. Briefly, the CT images were digitized in the E3D software, and the ROI was marked manually. A total of 352 radionics features were extracted in seven categories (Table 2). The weight of each feature was evaluated by the mRMR algorithm (22), and the top 10 features (Figure 2) were selected for the training and testing diagnostic models using 16 supervised ML algorithms. The models were established by the training dataset and validated by the testing dataset. The performance of the models was mainly evaluated by the ROC curve, area under the ROC curve (AUC), and ACC.

| Feature type | Number of features extracted | Number of selected features | Top 10 features | Feature weight |

|---|---|---|---|---|

| Geometric parameters | 73 | 1 | GeoY | 0.0868 |

| Histogram | 9 | 2 | Variance | 0.1188 |

| Kurtosis | 0.0143 | |||

| Gray-level concurrence matrix | 220 | 3 | S(1,0) SumVarnc | 0.0158 |

| S(0,4) Correlat | 0.0177 | |||

| S(4,4) AngScMom | 0.0254 | |||

| Gray-level run-length matrix | 20 | 0 | - | - |

| Gradient model | 5 | 0 | - | - |

| Autoregressive model | 5 | 0 | - | - |

| Haar wavelet | 20 | 4 | WavEnLL_s-1 | 0.0156 |

| WavEnHH_s-3 | 0.0153 | |||

| WavEnHH_s-4 | 0.0245 | |||

| WavEnLH_s-5 | 0.0132 |

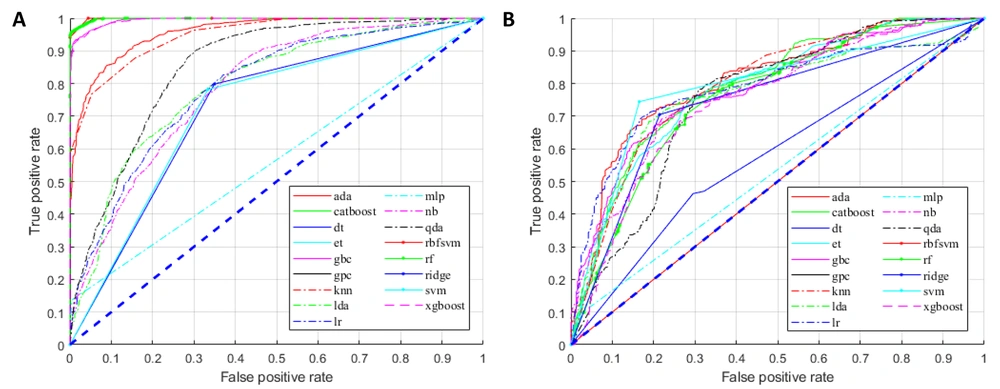

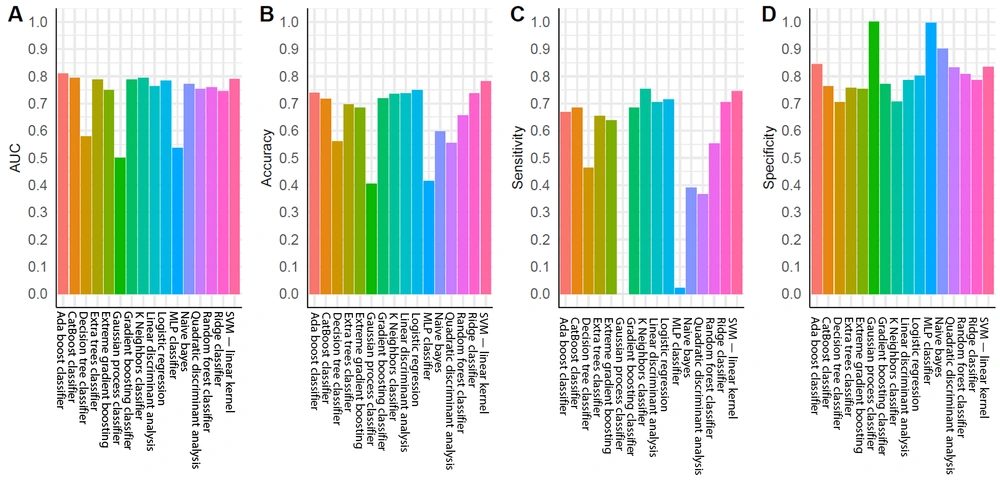

For the training group, all established models, except for the MLP classifier, showed promising performance (Figure 3A). Nonetheless, overfitting was observed, as the AUCs of some models were close to one (Figure 3A). In the testing group, as expected, the models generally had lower AUCs (Figure 3B). However, models built by the AdaBoost classifier, CatBoost classifier, gradient boosting classifier, k-nearest neighbor classifier, linear discriminant analysis, logistic regression, ridge classifier, and SVM (linear kernel) exhibited discriminating potentials for the testing dataset, with AUC of ≥ 0.75 and ACC of ≥ 0.70 (Figure 4A and B).

In contrast, three models in the testing group, including the decision tree classifier, Gaussian process classifier, and MLP classifier, had AUCs below 0.6 (Figures 3A and 4A), suggesting a poor discrimination power. The SVM model showed the most promising result. Since the AUC values were similar for the tests of training and testing datasets (0.73 and 0.79, respectively), the model had good stability. The ACC of the SVM model (linear kernel) was also the highest in the test (Figure 4). The specificity of the tested models was majorly higher than their sensitivity, and some algorithms with high AUCs had a low ACC (Figure 4); therefore, better performances could be achieved with fine-tuning parameters.

5. Discussion

In this study, 16 algorithms were compared for discriminating RCC from AML. After quantification in MaZda software, 3-sigma normalization, and weight measurement using the mRMR algorithm, the top 10 weight features were fed into all the models with default parameters. Unlike deep learning algorithms, these algorithms showed high explainability, as the main features were clearly defined and carefully selected based on the ranking of weights. Some of the algorithms showed reasonable results based on the AUC of ROC, specificity, and sensitivity analyses. Among all tested algorithms, the SVM (linear kernel) model and AdaBoost classifier yielded the most promising results for the further development of RCC CAD systems.

The SVM algorithm is one of the most common algorithms in CAD development (25, 26). The high prevalence of SVM in our study is consistent with previous research, which found the SVM algorithm to be sensitive for RCC diagnosis (16, 17). In these studies, the AUC of SVM algorithm ranged from 0.8 to 0.9. However, no testing dataset was applied in their models to evaluate overfitting. The present study improved the credibility of SVM algorithm by benchmarking multiple models and applied a carefully designed dataset with properly divided training/testing sets. The AdaBoost classifier had the highest AUC in the testing dataset (Figure 4A). Previous studies have also reported the high AUC of AdaBoost classifier and its potential application in medical imaging processing, particularly in CT imaging (27, 28). However, in the current study, its ACC was only 0.74 due to significant discrepancy between sensitivity and specificity (Figure 4B - D). Therefore, for the AdaBoost classifier, the default threshold setting could not achieve the finest resolution, and more adjustments were required. Research also suggests that the AdaBoost algorithm is sensitive to noise signals, but is less likely to be overfitting; therefore, it is widely tested in ML diagnostic studies (29-31).

Although the results of 16 supervised ML algorithms were different in the present study, each algorithm had its own merits and limitations. The results were greatly influenced by factors, such as data quality, data size, context, feature selection, and manual processing. Therefore, to establish a CAD system that can function in the real world, it is important to compare and examine different strategies repeatedly. Meanwhile, classification of training and testing datasets can greatly affect the results. In the current study, to mimic real-world diagnostics using limited resources, the training and testing sets were divided by patients; therefore, when evaluating the models, the interference of patient-specific factors could be minimized. In our parallel experiment, the training and testing sets were divided by images, which resulted in strong overfitting for many algorithms (Appendix 3). Also, due to the limited number of patients, the characteristics of RCC against AML were not fully recognized by the algorithms. Besides, the relatively small number of patients might have caused bias during model establishment (32).

To reduce the complexity of comparisons, all models in this study were run under a default setting at a cutoff point of 0.5 (0: AML, 1: RCC). Since the present study aimed to perform ML algorithm benchmarking for the diagnosis of RCC, a cutoff point of 0.5 was considered, without any probability threshold optimization. Besides, it should be noted that supervised ML algorithms are original with default parameters, and not all optimization strategies can be considered suitable for the model framework to improve performance. This idea was based on the intuitive concept that the models should be consistently improving in terms of performance. It also suggests the need for further optimization of the current models (e.g., increasing the number of patients, diversifying the source of images, and running models under optimized settings and cutoff points).

Beyond the supervised ML algorithm used in this study, unsupervised ML and deep learning algorithms are being increasingly applied in CAD development to reduce reliance on manual annotation and provide unknown details from radionics (13). The simple experimental design of the current study aimed to provide a baseline benchmarking of 16 algorithms to further indicate the potential application of ML algorithms in CAD systems with highly explainable feature extraction and a rather simple parameter design.

In conclusion, diagnostic classifiers based on ML algorithms for big data were potentially valuable tools for the accurate diagnosis of RCC. The present study suggested candidate algorithms that might show the best performance.