1. Background

Ultrasonography (US) is an indispensable tool in breast imaging, and complements both mammography and magnetic resonance imaging of the breast (1-4). It increases the specificity of mammography; in particular, both the number of false-negative lesions in dense breasts and false-positive lesions that could lead to unnecessary biopsies are reduced (5, 6). However, lack of reproducibility concerning lesion assessment and operator-dependency of breast US interpretation still remains problematic (6-12). The breast imaging, reporting and data system (BI-RADS) was published by the American college of radiology (ACR) to offer a common description for the characterization of breast abnormalities, and to communicate effectively with the physician (5, 13-16).

At some hospitals, US images are examined by skilled faculty members. At resident-training hospitals, however, the examinations may be conducted by residents. Part of the lack of reproducibility may be the product of inherent operator skill, which represents a small but unavoidable limitation for US as a modality. More commonly, however, the inconsistencies result from disparities in lesion assessment. Misunderstanding about the BI-RADS US lexicon or the lack of clinical practice may disturb accurate assessment.

Many reports (17-21) have published the rates of discrepancies between radiology residents and experienced radiologists in emergency diagnostic imaging examinations of the head and abdomen. However, to the best of our knowledge, a few studies have reported the difference in the interpretation of breast US images between residents and faculty radiologists. Thus, we evaluated the discrepancy between faculty members and radiology residents in breast US interpretations.

2. Objectives

The aim of our study was to evaluate inter-observer variability and performance discrepancies between faculty members and radiology residents, when assessing breast lesions, using the fifth edition (2013) of the ACR BI-RADS US lexicon. We also examined whether inter-observer agreement would improve in a second assessment after one education session.

3. Patients and Methods

3.1. Patients and Imaging

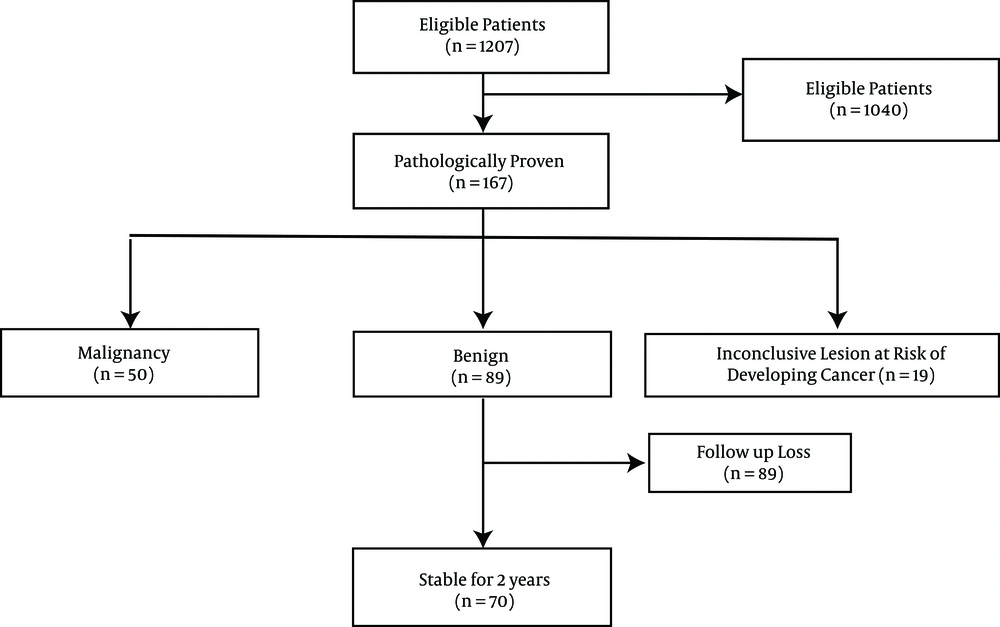

This retrospective study was approved by the institutional review board of our institution. We selected cases from female patients, who had undergone bilateral whole breast US and US guided core needle biopsies or surgical excisions between January 2008 and August 2014 (Figure 1). All US imaging was scanned using high-resolution US equipment (IU22; Philips, Bothell, WA, USA) by a 12 MHz linear transducer. We recruited only histologically proven malignant and benign lesions. Benign lesions that underwent biopsies, had been radiographically stable for two years. We excluded lesions at risk of developing cancer, such as atypical lobular hyperplasia, atypical ductal hyperplasia, radial scar, papilloma, and phyllodes tumors. Ultimately, 120 lesions from 108 patients were selected for our study.

3.2. Study Protocol

The training program of residents at our institution includes six months of training in breast radiology imaging (three months for second-year residents, two additional months for third-year residents, and one additional month for fourth-year residents), scanning breast US and reporting using the ACR BI-RADS lexicon.

To measure performance discrepancy, we divided faculty members, senior residents and junior residents to three subgroups. We then analyzed inter-observer agreement and receiver operating characteristic (ROC) curves for each subgroup (22, 23). To estimate observer variability and performance discrepancies depending on years of practice, two faculty members with four and > ten years of breast imaging experience respectively, two junior residents who had completed the three months of breast imaging training, and two senior residents who had completed the full six months of training, assessed the images without a special education session.

To identify whether inter-observer agreement could be enhanced by one education session, the participant gathered for a day, after the first assessment. A total of six participants checked the fifth edition of BI-RADS-US lexicon in the education session, and then keenly discussed thirty cases that had been excluded in the present study. At four weeks after this education session, the original US images were rearranged randomly by the investigator, and then participants reassessed the images separately.

3.3. Image Assessment

We reviewed pre-existing breast US images retrospectively to compare the performance of the faculty members and the residents. For assessments of lesions, the participants examined at least two static US images including radial and anti-radial images or transverse and longitudinal images. Each participant chose the most proper terms to assess breast abnormalities, using the fifth edition of BI-RADS-US. The BI-RADS lexicon for US is presented in Box 1. Following the description of the lesion, the final category was divided to five groups, based on the BI-RADS-US: category 3 (probably benign), 4a (low suspicion for malignancy), 4b (moderate suspicion for malignancy), 4c (high suspicion for malignancy), and 5 (highly suggestive of malignancy). In the present study, agreement among observers for the presence of associated findings and special cases was not assessed, and we concentrated on US features of masses, such as shape, orientation, margin, echo pattern, and posterior features. For the designation of tissue composition, at least four static images including each quadrant of the ipsilateral breast were also provided. Mammographic images and clinical background were not given to eliminate the probability of bias in the assessment of the US images.

| Tissue Composition (Screening Only) |

|---|

| A. Homogeneous background echotexture-fat a |

| B. Homogeneous background echotexture-fibroglandular a |

| C. Heterogeneous background echotexture a |

| Shape |

| Oval |

| Round |

| Irregular |

| Orientation |

| Parallel |

| Nonparallel |

| Margin |

| Circumscribed |

| Noncircumscribed: indistinct, angular |

| Microlobulated, spiculated |

| Echo pattern |

| Anechoic |

| Hyperechoic |

| Complex cystic and solid a |

| Hypoechoic |

| Isoechoic |

| Heterogeneous a |

| Posterior features |

| No posterior features |

| Enhancement |

| Shadowing |

| Combined pattern |

| Calcifications |

| Calcifications in a mass |

| Calcifications outside of a mass |

| Intraductal calcifications a |

| Final assessment |

| Category 3 |

| Category 4 (a, b, c) |

| Category 5 |

aNew in the fifth BI-RADS lexicon for breast sonography.

3.4. Statistical Analysis

The statistical analysis was performed using the SPSS software (version 11.5 for Windows; SPSS Inc. Chicago, IL, USA). The kappa coefficient (κ) was calculated to determine inter-observer variability for BI-RADS terminology and the final category between the two readers. The κ values were interpreted, using the guidelines of Landis and Koch (24, 25): poor agreement, κ = 0.00-0.20; fair agreement, κ = 0.21 - 0.40; moderate agreement, κ = 0.41 - 0.60; good agreement κ = 0.61 - 0.80; and excellent agreement, κ = 0.81 - 1.00. To determine the inter-observer reliability between faculty radiologists, senior residents, and junior residents, intra-class correlation coefficients were calculated with 95% confidence intervals.

The area under the receiver operating characteristic (ROC) curves (AUC) was analysed with 95% confidence Intervals (CI), to compare the diagnostic performance of faculty radiologists, senior residents, and junior residents, using MedCalc (version 10.1.6.0; MedCalc software, Ghent, Belgium). The sensitivity and specificity for each category were also evaluated for a subgroup of the observers. A P value of < 0.05 was considered to indicate statistical significance.

4. Results

In total, 120 lesions in 108 patients met the selection criteria and were included in this study. The 108 patients ranged in age from 23 to 76 years (mean, 51.7 years). The size range of the lesions was 3 - 37 mm (mean, 17.4 mm) in the maximal dimension. Of the lesions, 50 were found to be malignant: invasive ductal carcinoma in 39 lesions, ductal carcinoma in situ in eight, invasive lobular carcinoma in two, and medullary carcinoma in one. The other 70 lesions were benign: fibroadenoma in 21 lesions, fibrocystic change in 19, stromal fibrosis in 12, ductal epithelial hyperplasia in 11, fibroadenomatous hyperplasia in five, fat necrosis in one, and adenosis in one.

4.1. Observer Agreement

Agreement between the faculty members was fair-to-good for all criteria; however, between residents, agreement was poor-to-moderate. The inter-observer variability for each subgroup (faculty, senior resident, junior resident) is presented in Table 1. For the two faculty members, inter-observer agreement was moderate for tissue composition, shape, posterior features and calcifications (κ = 0.47, 0.49, 0.53 and 0.48, respectively) (95% CI: 0.35 - 59; 0.36 - 61; 0.42 - 0.65; 0.36 - 0.60), good for orientation (κ = 0.66) (95% CI: 0.59 - 75), and fair for margin, echo pattern, and final assessment (κ = 0.32, 0.36 and 0.40, respectively) (95% CI: 0.15 - 47; 0.20 - 51; 0.25 - 0.52). For the two senior residents, inter-observer agreement was fair for tissue composition, shape, posterior features and calcifications (κ = 0.36, 0.33, 0.40 and 0.39, respectively) (95% CI: 0.17 - 50; 0.20 - 47; 0.25 - 0.51; 0.23 - 55), moderate for orientation (κ = 0.59) (95% CI: 0.45 - 73), and poor for margin, echo pattern and final assessment (κ = 0.11, 0.15 and 0.19, respectively) (95% CI: 0.02 - 0.21; 0.04 - 0.25; 0.07 - 0.30). For the two junior residents, inter-observer agreement was fair for shape, orientation and calcifications (κ = 0.22, 0.30 and 0.23, respectively) (95% CI: 0.12 - 0.33; 0.20 - 0.41; 0.13 - 0.33). For all the remaining criteria such as tissue composition, margin, echo pattern, posterior features and final assessment, poor agreement was observed (κ = 0.15, 0.18, 0.12 and 0.14, respectively) (95% CIs: 0.04 - 0.25; 0.07 - 0.28; 0.02 - 0.22 and 0.03 - 0.24, respectively).

| Final Assessments | Faculty Member a | Senior Resident a | Junior Resident a | |||

|---|---|---|---|---|---|---|

| Primary Assessment | Secondary Assessment | Primary Assessment | Secondary Assessment | Primary Assessment | Secondary Assessment | |

| Tissue composition | 0.47 | 0.51 | 0.36 | 0.42 b | 0.15 | 0.30 b |

| Shape | 0.49 | 0.48 | 0.33 | 0.40 b | 0.22 | 0.35 |

| Orientation | 0.66 | 0.66 | 0.59 | 0.60 | 0.30 | 0.40 |

| Margin | 0.32 | 0.37 | 0.11 | 0.21 b | 0.18 | 0.17 |

| Echo pattern | 0.36 | 0.41 b | 0.15 | 0.29 b | 0.12 | 0.19 |

| Posterior features | 0.53 | 0.54 | 0.40 | 0.49 b | 0.20 | 0.38 b |

| Calcifications | 0.48 | 0.51 | 0.39 | 0.45 | 0.23 | 0.35 |

| Final assessment | 0.40 | 0.47 b | 0.19 | 0.23 b | 0.14 | 0.16 |

an = 2.

bThe degree of agreement was higher at the second assessment, which was performed following the education session.

The observers then received an education session on the US criteria, and then performed a second assessment. With regards to the changes seen in each group, the agreement was one level higher in the faculty radiologists and junior residents for two criteria, and in the senior residents for seven criteria, after the education session. In the faculty radiologists, the degree of agreement was higher than moderate for all criteria, excluding the margin. In the senior residents, the agreement was one level higher for all of the criteria except orientation, following the training. However, in the junior residents, there was no increase in degree of agreement for any of the criteria except for tissue composition and posterior features, and the degree of agreement was lower than fair for all criteria. At second assessment, the respective 95% CI of the faculty radiologist, senior resident, and junior resident were 0.39 - 0.62, 0.25 - 0.59 and 0.13 - 0.47 for tissue composition, 0.35 - 0.60, 0.25 - 0.55 and 0.17 - 0.53 for shape, 0.54 - 0.77, 0.46 - 0.70 and 0.25 - 0.55 for orientation, 0.21 - 0.52, 0.06 - 0.37 and 0.05-0.30 for margin, 0.27 - 0.55, 0.06 - 0.52 and 0.05-0.32 for echo pattern, 0.44-0.64, 0.40-0.60 and 0.33-51 for posterior features, 0.39 - 0.62, 0.32 - 0.55 and 0.25 - 0.45 for calcifications, and 0.35 - 0.59, 0.13 - 0.34 and 0.04 - 0.29 for final assessment.

The intra-class correlation coefficients for final assessment between the faculty radiologist, senior resident, and junior resident were 0.55 (95% CI, 0.44 - 0.66) at primary assessment, and 0.57 (95% CI, 0.42 - 0.69) at the secondary assessment. The agreement was considered to be poor.

4.2. Diagnostic Performance

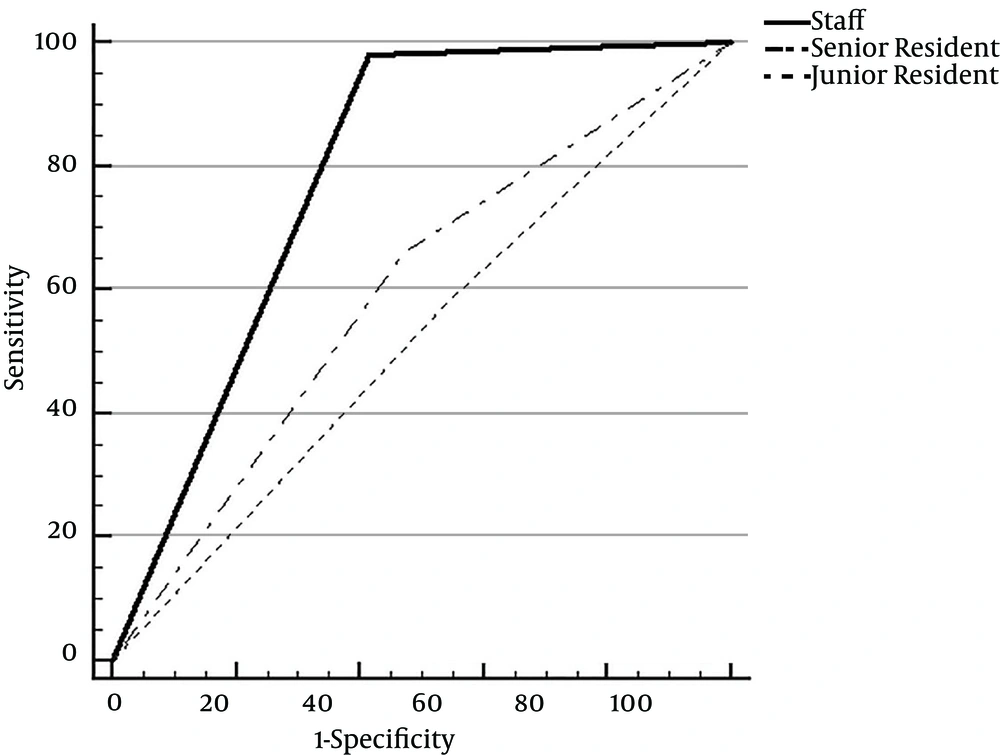

The sensitivity and specificity acquired from the faculty members, senior residents, and junior residents are shown in Table 2. In the ROC curve analysis, the respective AUC values of the faculty radiologists, senior residents, and junior residents were 0.78 (95% CI, 0.70 - 0.85), 0.59 ((95% CI, 0.50 - 0.68), and 0.52 (95% CI, 0.43 - 0.61) (Figure 2). There was a significantly higher diagnostic accuracy for the faculty radiologists than the senior and junior residents (P = 0.0001 and < 0.0001, respectively). However, there was no significant difference in diagnostic performance between the senior and junior residents.

| Faculty Member | Senior Resident | Junior Resident | |

|---|---|---|---|

| Sensitivity | 98.0 | 66.0 | 58.0 |

| Specificity | 58.6 | 52.9 | 45.7 |

aValues unit is %.

Receiver operating characteristic curves for faculty members, senior and junior residents; The area under the curve was 0.78 for faculty members, 0.59 for senior residents, and 0.52 for junior residents. There was a significant difference between area under the receiver operating characteristic curves of faculty members and residents.

5. Discussion

In our study, agreement between the faculty radiologists was fair-to-good for all criteria; however, between residents, agreement was poor-to-moderate. Therefore, investigative reporting of breast US by residents is inadvisable. At the academic medical centres under study, US of the breast, as well as the abdominal organs, are examined by radiology residents and experienced faculty members. The residents actually operate the ultrasonic equipment themselves and then interpret the images that they, themselves, have produced, in a preliminary form. At the end, the final report is confirmed by the attending faculty member. The variability in agreement regarding image assessment between radiology residents and skilled faculty members has been previously studied, and these reports (19, 26-28) showed that the degree of agreement was greater than 90% between radiology residents and faculty members in the assessment of head and pulmonary angiography computerized tomography (CT). However, to date, the discrepancies between faculty radiologists and residents in the assessment of breast US images have not been fully established; this was the motivation for our research. We found that, overall, the agreement between the two faculty radiologists was greater than for the residents. The comparison of diagnostic performance was also significantly higher for the faculty radiologists, yet there was no significant difference between junior and senior residents.

Previous reports have demonstrated that certain breast US features are reliable for differentiation of benign and malignant breast lesions (29-33). However, reader discrepancy in mass assessment by US is responsible for differences in lesion detection and variation in lesion description and subsequent management. Several researches have reported inter-observer variability in the assessment of breast masses with the use of the BI-RADS US lexicon, fourth edition (7, 11). The fifth edition of BI-RADS for US was published in 2013 (13). However, to the best of our knowledge, observer variability, using the BI-RADS new lexicon for US, fifth edition (2013), has not been widely studied.

Our results using the BI-RADS fifth edition are similar to previous studies using the fourth edition. In the new BI-RADS lexicon for US, the boundary term was removed, yet in our data, this seemed to have little effect in determining a final category. The agreement for the final category of faculty radiologists was fair-to-moderate in our study. This result was similar to that in studies by Elverici et al. (7), Lee et al. (10), and Berg et al. (8), using the BI-RADS fourth edition. According to a study by Elverici et al. (7), the observer agreement was good for orientation, moderate for shape, echo pattern and posterior feature, and was fair for margin and final category for two experienced radiologists. Regarding our results, data of the faculty members was similar to that of Elverici et al. (7), yet our results for residents were poor. Also, as in other studies (34), the agreement of all three subgroups in the current study was lowest for margin and highest for orientation. We assume the reason is that the terminology for characterization of the mass margin is multiple, and even overlapped. Our study showed that the disagreement among readers, when labelling mass margin and echo pattern, was more with a heterogeneous background echo-texture than homogeneous background. This is probably because the observers were uncertain and confused when trying to detect and classify abnormalities in heterogeneous tissue composition with posterior shadowing. This confusion is not minor, because the designation of circumscribed margin can encourage observers to decide that the lesion is benign and thus produce a false negative. In contrast, the designation of a not-circumscribed margin may contribute to a false positive lesion and an unnecessary biopsy.

Interestingly, following the education session, the agreement was one level higher for all of the criteria except orientation in the senior residents; however, there was no improvement in the degree of agreement for the six criteria in the junior residents. The agreement for the final category was one level higher in the senior residents after the education session, yet the degree of agreement was still only fair. These data imply that a single education session was not adequate to improve the agreement level and performance of the residents. Therefore, we suggest that attending radiologists need a more careful review and confirmation of the preliminary interpretation. The successful training of residents would appear to require clinical experience through the practice of breast US, continuous consensus reading, and steady feedback and correlation between US findings and the pathology results.

Our study had several limitations. First, this study included only benign masses that underwent biopsies. Thus, typical ‘probably benign’ or ‘benign’ lesions on US findings were not included in our study; this exclusion may have led to lower specificity. In real clinics, the specificity may be higher. Second, readers did not actually operate the ultrasonic equipment themselves, and were instead provided by at least two static US images. We think it is very difficult to measure performance discrepancy. Thus, we divided faculty members, senior residents and junior residents into three subgroups, and then we analyzed inter-observer agreement and ROC curves for each subgroups. Finally, there was selection bias because only images particularly chosen by an investigator were evaluated. Our results are limited due to our small sample size. Additional studies are required with a larger series of patients.

Our study showed that the reader agreement for sonographic BIRADS lexicon was higher among faculty radiologists than among residents. In addition, there was significantly higher diagnostic accuracy for the faculty members when compared to the senior and junior residents. Therefore, we recommend continued professional resident training to improve the degree of agreement and performance for breast US.