1. Background

Bronchoscopy is a minimally invasive method to investigate inside the airway tree structure, for lung cancer diagnosis and staging. Before bronchoscopy operation, pulmonary nodules are detected on computed tomography (CT) scans of patients. Afterward, a bronchoscope is maneuvered through the airways to a region near the nodule. Transbronchial biopsy (TBB) is then performed to biopsy nodules.

This procedure has some difficulties for physicians. The physician should relate CT slices to bronchoscopic video images mentally and has no guidance to maneuver the bronchoscope through true branches to reach the target.

Image guided bronchoscopy systems have been developed in the last decades to help physicians navigate bronchoscope to reach target in a precise and fast way. These systems might track and show the bronchoscope’s tip position on CT-driven airway tree structure.

Bronchoscopy tracking methods are categorized to image-based (1-5), electromagnetic tracker (EMT) based (6-9), and hybrid methods (10, 11).

Image-based methods usually use CT-derived virtual bronchoscopy (VB). Comparing similarity between real bronchoscopy video frames and VB images at different positions of virtual camera, the position of the tip of the bronchoscope in the CT coordinate system is acquired. Although these methods can reach the position precisely, they cannot track bronchoscope in real time. This problem is caused by time consumed by VB generation at different positions for each live video frame and measuring their similarity with each live video frame that arrives.

EMT systems have an electromagnetic field generator that induces voltage in tiny coils embedded in a sensor. They can report the sensor’s position with high frame rate, however they suffer intrinsic errors and errors caused by ferromagnetic materials in the ambient.

EMT-based methods use landmarks or centerline to find a rigid transformation between EMT coordinate system and CT coordinate system. Using landmarks or centerline increases the overall bronchoscopy procedure time, which may be harmless for the patients. Furthermore, because of intrinsic EMT system errors and respiratory motion, these methods cannot track the bronchoscope accurately.

In 2000, Solomon et al. proposed and compared two EMT-based image registration methods for CT-guided bronchoscopy. These methods use real skin markers or the inner surface of the trachea for registration between EMT and CT space. They achieved 57% for total percentage of successfully registered frames (12).

Hybrid methods try to overcome the problems of traditional methods by combining them. In fact, they are supposed to achieve high speed of EMT-based methods and accuracy of image-based methods. These methods require landmark-based or centerline-based registration before main bronchoscopy which could be time consuming and hence unfavorable. After achieving a rigid transformation between CT and EMT coordinate system, VB image is searched in a smaller search space that best matches real bronchoscopic video frame.

In 2005, Mori et al. proposed a hybrid method for bronchoscope tracking. They used the EMT sensor position as the starting point for intensity based registration between real and virtual bronchoscopy images. The method was tested using a bronchial phantom model with simulated respiratory motion and a 64.5% percentage of total successfully registered frames was achieved (13).

In 2010, Luo et al. proposed a new scheme for hybrid bronchoscope tracking, and evaluated it on a dynamic motion phantom. Hybrid methods fail when the starting point acquired by EMT is too far from the actual pose. To overcome this problem, they used a threshold for Euclidean distance between current EM sensor position and the position acquired in CT. For this study, the percentage of successfully registered frames was 75% (14). In another study in 2010, the same authors modified the above method by using a sequential Mont Carlo sampler to find an optimum starting point for intensity based registration, and the percentage of successfully registered frames was 92% (11).

In 2013, Holmes et al. proposed an image-based system for technician-free bronchoscopy guidance (15). They developed their work as a hands-free system in 2015 (16).

Hence our method uses real bronchoscopy images and EMT data simultaneously, it might be reported as a hybrid method that would overcome problems in other hybrid techniques. The proposed method matches the bronchoscopy image contours with the mapped CT contours at different positions to achieve the true position. Synchronous EMT data helps us to do this in less wrong positions, and achieve the true position faster.

2. Objectives

We aimed to develop a continuous guiding method for bronchoscopy with high tracking accuracy by matching bronchoscopy image contours with CT contours, and speed it up by using synchronous EMT data, and to evaluate it on airway phantom with simulated respiratory motion.

3. Materials and Methods

Real time bronchoscope tracking aims to find real camera position at CT space when each frame arrives.

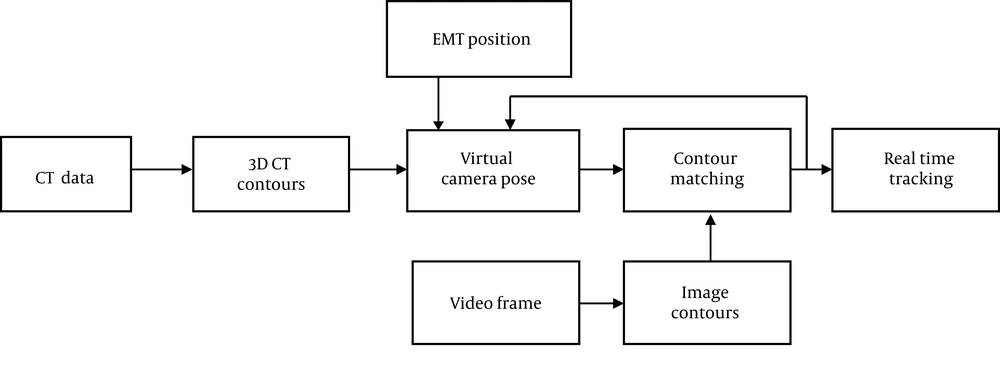

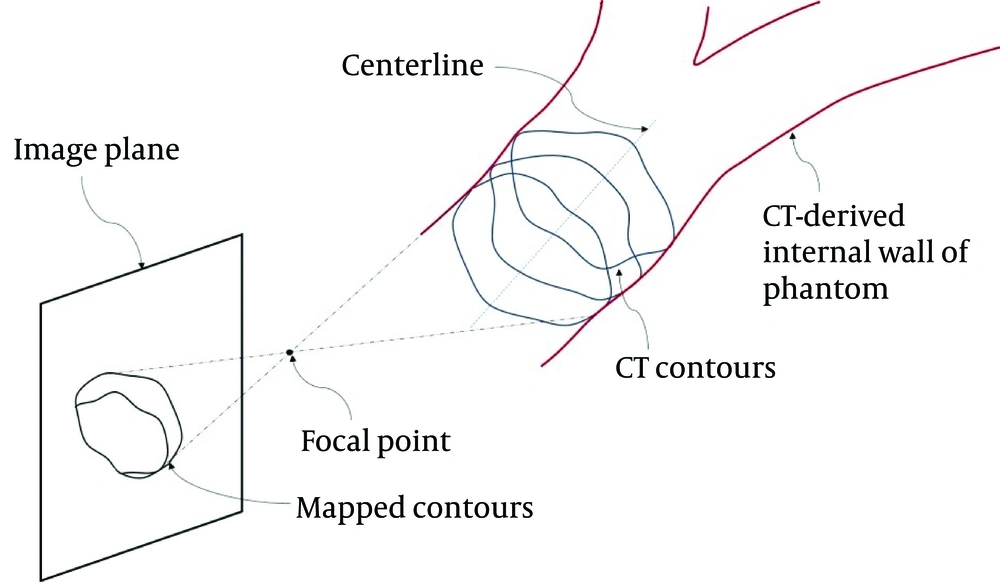

As illustrated in Figure 1, CT data is modeled as contours in each branch. Then, a virtual camera pose is set using EMT pose relative to last frame’s pose. Afterward, CT contour which is visible to the camera is mapped to virtual camera’s image plane in a perspective way. If the contours that are detected in the current frame are matched to the mapped CT contours, registration is done and the system waits for the next frame. Otherwise, a new virtual camera pose is set, using the last camera pose of the current frame, and EMT pose is compared to the last frame.

3.1. Data Acquisition

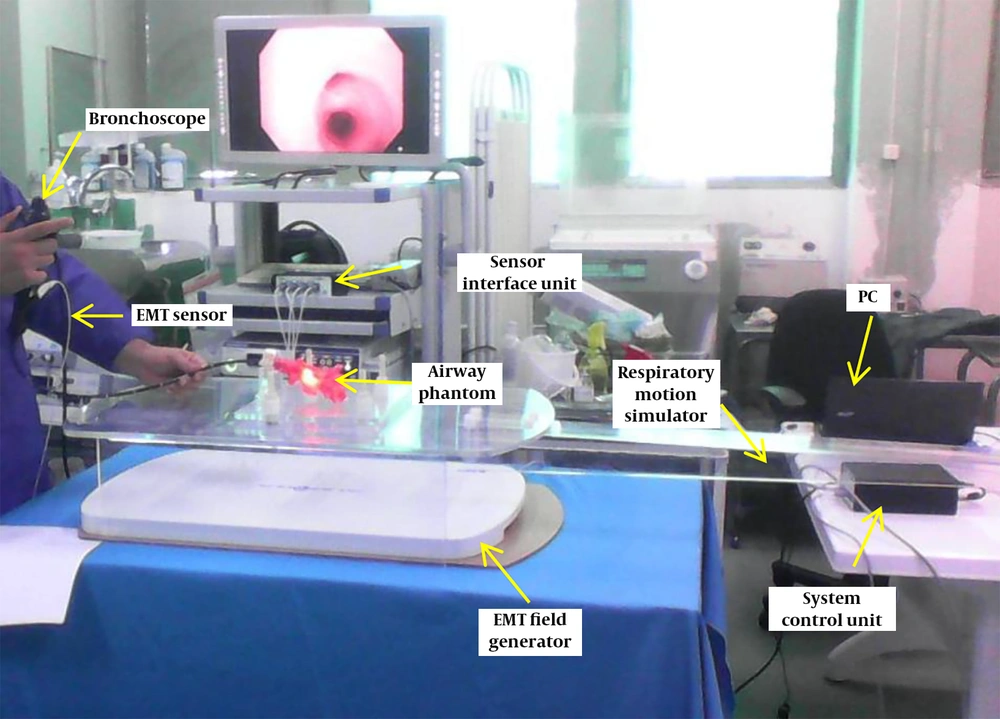

Our setup for data acquisition consisted of Olympus bronchoscopy system, NDI Aurora EMT system, airway phantom with simulated respiratory motion, and a personal computer (PC). A BlackMagicDesign capture card was used to transfer video frames from bronchoscopy system to PC (Figure 2).

NDI Aurora EMT system has four components including electromagnetic (EM) sensors, EMT field generator, system control unit (SCU) which sends commands and sends sensor information to PC, and sensor interface unit (SIU) that connects sensor(s) to SCU. The intrinsic error of sensor we used was less than 0.6 mm. EM sensor was attached to the bronchoscope via the biopsy channel. The Olympus bronchoscopy system captures the video frames with a resolution of 576 × 720 pixels.

A software called SyVER (synchronized video-EMT recorder) was developed to synchronize EMT frames with live video frames from the capture card precisely. Frame rate was set to 15 Hz. Each video frame was considered with its corresponding EMT frame (6 degree of freedom [DOF] position).

A stepper motor was used to apply motion to the phantom and a 2 mm 6 DOF EM sensor was fixed into the biopsy channel of bronchoscope so that its tip was at the tip of the bronchoscope.

At each trial, the bronchoscopist tried to maneuver the bronchoscope through all branches of the airway phantom. Twenty trials were performed by four experts with four different respiratory motions. Table 1 shows the trials.

| Maximal motion | Number of trials | Average trial timea (s) | Number of frames |

|---|---|---|---|

| 6 mm | 5 | 127.9 ± 7.8 | 9591 |

| 12 mm | 5 | 134.6 ± 12.1 | 10095 |

| 18 mm | 5 | 130.4 ± 10.9 | 9781 |

| 24 mm | 5 | 131.9 ± 14.3 | 9894 |

Trials Performed for Sweeping Airway Tree with Bronchoscope

Phantom CT data was acquired by a 16-slice Siemens CT scanner with pitch 1, 440 mA, 0.5 mm slice thickness and 0.5 × 0.5 mm pixel size before the video-EMT data acquisition during live bronchoscopy.

3.2. Contour Detection

Shape analysis methods are widely used in object recognition, matching, registration, and analysis. A contour is defined as curve represented by a set of chained image points.

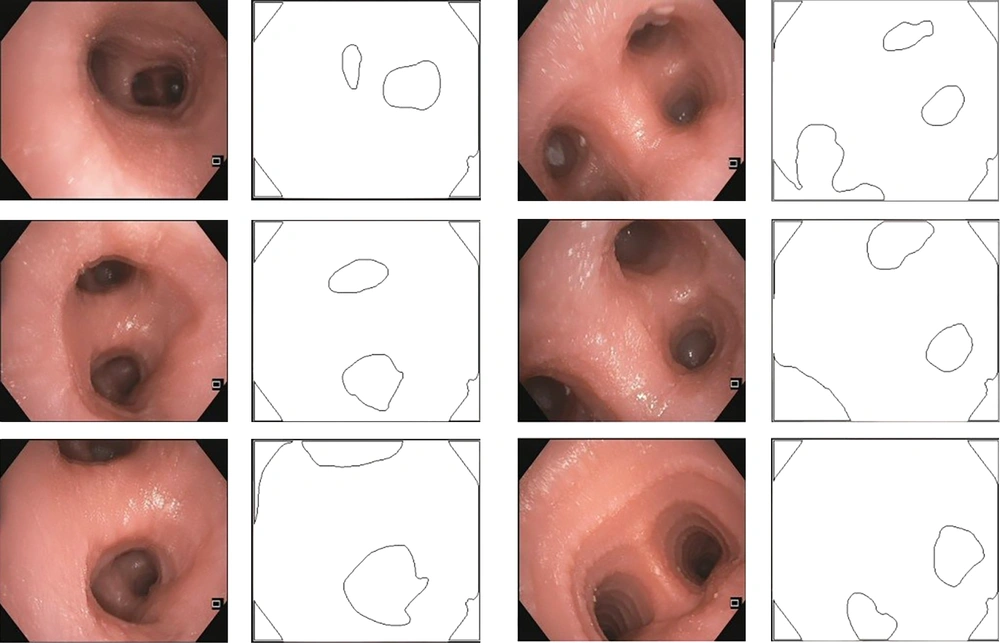

For processing of bronchoscopic video images, the image is first converted in gray because we used to work on grayscale picture. A simple threshold is applied to roughly get contours. The open and close trick is applied by a round 7-pixel structing element to get more smooth contours. Finally, a contour detection algorithm is applied as described by Suzuki, 1985 (17). Suzuki showed a way to analyze the topological structure of binary images by border following. The contour detection algorithm first determines border points, surroundness among connected components, outer border and hole border, and surroundness among borders. Then, it scans the input binary image with a raster and interrupts the raster when a border point is found following the starting point of either an outer border or a hole border, and assigns a uniquely identifiable number (NBD) to the newly found border. After following the entire border, the raster scan continues until it reaches the lower right corner of the image. The output is shown in Figure 3.

3.3. CT Data Analysis

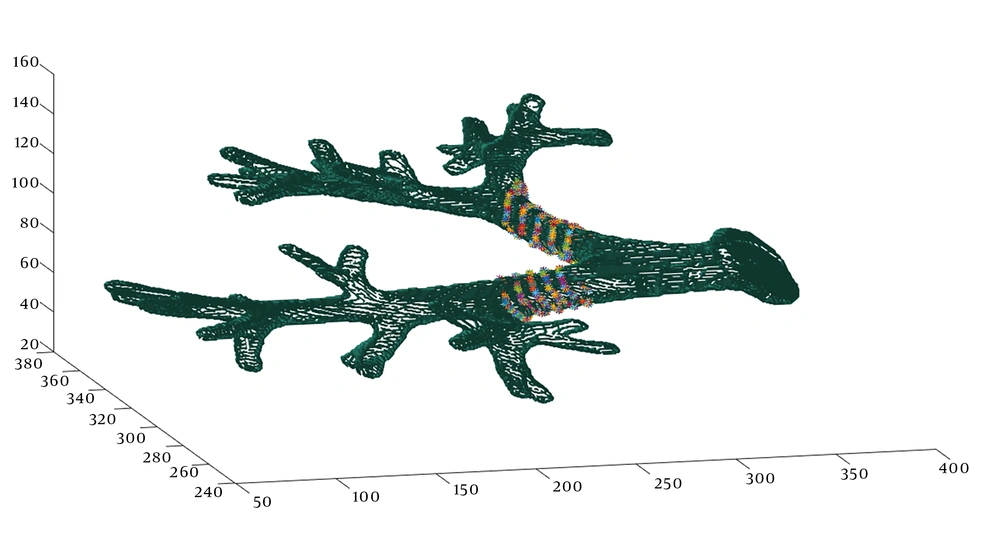

CT data analysis can be performed offline, before real time tracking. 3D structure of airway tree phantom was segmented by thresholding CT data. Since extraction of the internal wall of the airway phantom is important, it was acquired manually slice by slice and then the whole structure of the internal wall was reconstructed. Afterward, the internal wall of the 3D structure of airway tree phantom should be modeled as contours. Subsequently, at each branch, two points were considered as starting point and endpoint of the branch. At each bifurcation, the endpoint of the main branch was considered as the starting point of bifurcated branches. Centerline of each branch was roughly considered as a line that connects the starting point to the endpoint. The centerline of each branch was divided into 1 mm segments. At each segment a plane was considered perpendicular to the line, and intersection of the plane with the structure of the internal wall was modeled as a CT contour. Therefore, the whole structure was modeled by 96 CT contours. Each CT contour saves its shape and 6 degree of freedom (DOF) position (Figure 4).

3.4. Contour Based 2D/3D Registration

For the purpose of real time tracking, we set a virtual camera at a 6 DOF position that maps its visible CT contours on its virtual plane using perspective projection (Figure 5). Virtual camera’s intrinsic parameters were set to intrinsic parameters of the real camera that was acquired by a checkerboard pattern. When each video frame arrives, we look for a 6 DOF position of virtual camera that best matches mapped CT contours with the contours of live video frame.

Some methods for contour matching have been developed (18-20). For this purpose, after finding the contours, the epipolar geometry of two images is recovered, the contours are matched, and the false match is found. For false matches, the epipolar geometry is recomputed, and contours are rematched.

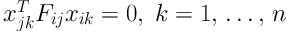

To recover epipolar geometry, we use Hartley’s improved 8-point algorithm to compute the fundamental matrix Fij. The fundamental matrix Fij is a 3 × 3 matrix satisfying

for any match pair in the two images i and j.

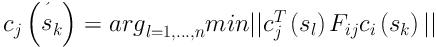

In order to find the corresponding point cj(śk), we computed

Afterward, for the contours which are not matched, the epipolar geometry is recomputed.

3.5. Validation

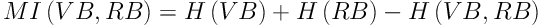

For validation of tracking accuracy, at each frame, virtual bronchoscopy (VB) image was generated at the position reported by the proposed method. Afterward, similarity between VB image and real video frame was measured using mutual information (MI) as follows (21):

Where H(A) represents the entropy of image A, and MI(VB, RB) is the mutual information between VB image and real bronchoscopy (RB) image. When the mutual information between RB and VB images is more than 0.73 of the entropy of RB image, we consider that they are similar images, and thus the image registration for that frame is successful. These criteria were set by an expert in a visual investigation scheme.

4. Results

CT data analysis was performed in MATLAB before real time data processing. Real time data analysis was performed on 20 trials with different respiratory motions using Microsoft visual studio C++. Each trial consisted of 1500 - 2100 frames, or 100 - 140 sec at a 15 Hz frame rate. Each frame consists of a video frame and its synchronized EMT position.

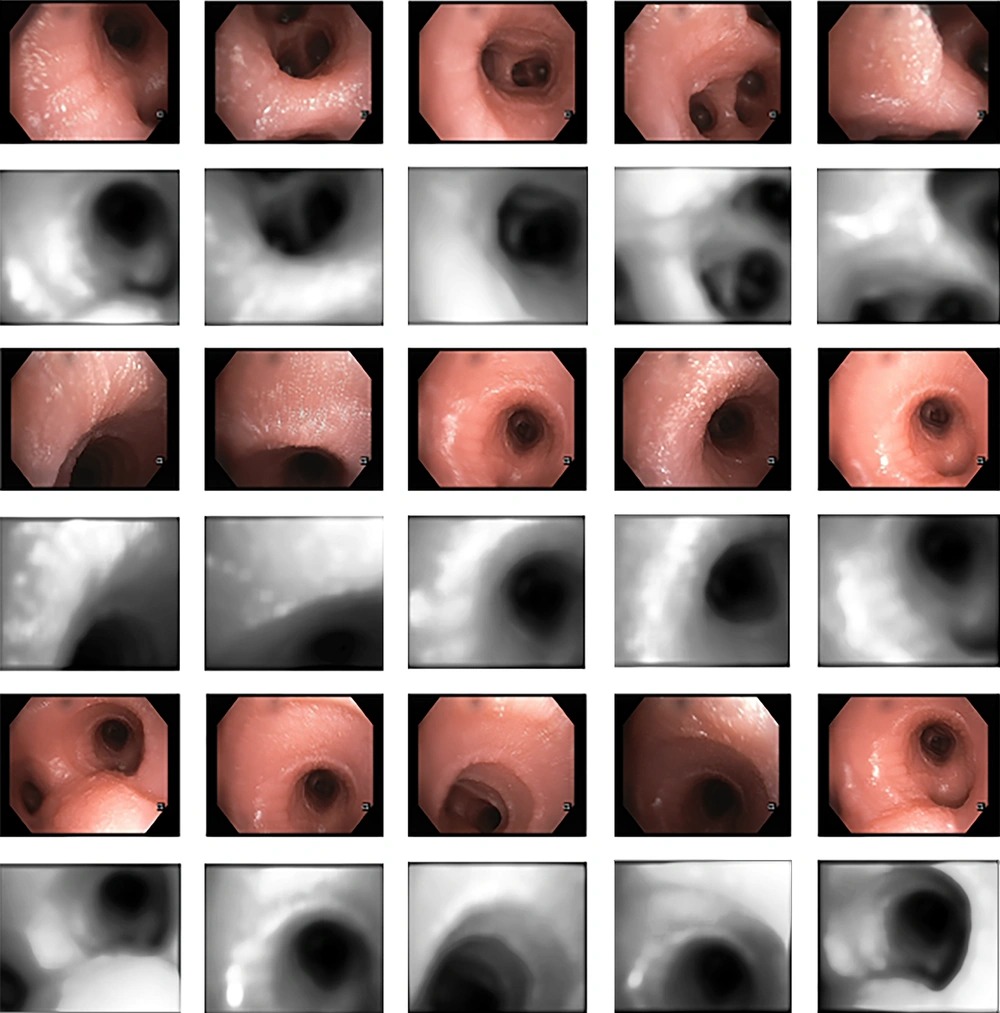

Figure 6 shows bronchoscopy frames and CT-derived VBs generated at the position reported by the proposed method. For each trial, the accuracy was calculated as the ratio of successfully registered frames in the trial to number of frames of the trial. Finally, the overall accuracy was calculated as the ratio of successfully registered frames to total frames. Trial accuracy was considered to investigate respiratory motion effect on tracking accuracy. We compared our method with four other tracking schemes: 1) Solomon et al. (12) an EMT based method, 2) Mori et al. (13) a hybrid method, 3) Luo et al. (14) a hybrid method, and 4) Luo et al. (11) a hybrid method. Table 2 shows the percentage of successfully registered frames compared to others.

| Maximal motion | Successfully registered frames, No. (%) | ||||

|---|---|---|---|---|---|

| Solomon et al. (12) | Mori et al. (13) | Luo et al. (14) | Luo et al. (11) | Our method | |

| 6 mm | 850 (66.1) | 958 (74.6) | 1034 (80.5) | 1224 (95.3) | 9377 (97.8) |

| 12 mm | 783 (59) | 863 (65.1) | 1018 (76.8) | 1244 (93.8) | 9785 (96.9) |

| 18 mm | 894 (56.8) | 972 (61.8) | 1153 (73.3) | 1431 (91) | 9397 (96.1) |

| 24 mm | 716 (48.8) | 850 (57.9) | 1036 (70.6) | 1300 (88.6) | 9378 (94.8) |

| Total | 3243 (57.4) | 3643 (64.5) | 4241 (75) | 5199 (92) | 37919 (96.3) |

Comparison of Registered Results

For investigation of the capability of real time tracking, we ran our algorithm for each trial on a PC with core i5 @1.8GHz and 4 GB RAM. This experiment shows that the total time needed to run the program and get the results is 91% of total live bronchoscopy time. So, this method can be used for real time tracking.

5. Discussion

The aim of this study was to develop a hybrid bronchoscope tracking method that enables real time tracking with a high registration performance under respiratory motion, in particular, to deal with the limitations of image-based registration speed. We used contour-based registration and EMT synchronous data. According to the results, the CT-video registration can be represented by contour-based registration with a high rate of successfully registered frames. The proposed method has a per frame registration speed of 1/15 of second, so the bronchoscope could be tracked real time by a rate of 15 frame/sec. Furthermore, the performance of frame registration is improved.

The previous method, landmark-based registration, is highly sensitive to respiratory motion. The proposed method is robust to respiratory motion compared to other studies, as it uses EMT data in differential mode instead of landmark-based EMT data registration.

The initial pose of virtual camera from where image registration starts, may cause failure. Therefore, as for previous studies (11, 13, 14), tracking accuracy suffers from dependencies on initialization of image registration. We have already suppressed it by using synchronous EMT data in differential mode. Since we use synchronous EMT data in differential mode, it may diverge when coughing or bubbling happens in the real airway structure. Additionally, bubbling may produce unwanted contours and cause failure in real conditions.

In conclusion, this paper presented a real time bronchoscope tracking method that used EMT data in differential mode, and contour matching, and evaluation on an airway tree phantom with simulated respiratory motion. The novelty of this work is developing a method that uses bronchoscopy images and EMT data in a unique way. Using contours, as useless information of bronchoscopy images, caused a faster registration procedure compared to intensity-based registration. Using differential approach for EMT data, it is not required to consider any landmark or centerline in the airway tree structure that is very difficult for the physician and consumes much more time for bronchoscopy. We strongly recommend using contour matching combined with differential EMT data instead of intensity-based image registration and landmark-based EMT registration. Respiratory motion errors are caused by point movement in a point-based registration approach. Because our method does not use point-based registration, it is respiratory motion error free.

In the future, we plan to perform an in vivo study to validate our method in real conditions to evaluate how much coughing and bubbling affect tracking accuracy.