1. Background

Evidence synthesis and informed decision-making need to correct reporting the interventional studies in health inquiries. Based on evidence, study validity and application of data in secondary research is under the impression of incomplete and defective reporting (1). Poor reporting of study findings eliminates the possibility of repeating the results, comparing them with existing knowledge, and generalizing them to other populations or using them in reviews and/or meta-analyses (2). The employment of reporting guidelines led to improved precision, transparency, completeness, enhanced value, and quality of publications in the field of health research (3). There are reporting guidelines for many of the study designs. Examples of the most commonly used reporting guidelines contain: CONSORT statement (consolidated standards of reporting trials) (4), TREND statement (transparent reporting of evaluations with nonrandomized designs) (5), PRISMA statement (preferred reporting items for systematic reviews and meta-analyses) (6), STARD statement (standards for reporting diagnostic accuracy) (7), and STROBE statement (strengthening the reporting of observational studies in epidemiology) (8).

The report of clinical trials (RCTs) is considered the gold standard in scientific evidence and current clinical decision-making should be mainly based on their results (9). The high-quality systematic reviews are main factors affecting policy making and clinical performance in health care, which are mainly composed of RCTs.

Based on evidence, the reports of RCTs do not have the optimum quality. Incomplete and ambiguous reports distort the judgment of readers about reliability and validity of trial results. It also challenges researchers to extract information and conduct systematic reviews (10). In 1995, in order to eliminate the existing concerns, the CONSORT statement, developed by the CONSORT Group, delineates the recommendations of items that are to be included in RCT publications to assure appropriate and complete reporting. In the early 1990s, two international groups of experts recognized the problems with the reporting of RCTs and generated the impetus to improve their reporting, and formulated the first sets of RCTs reporting guidelines. Shortly afterward, the work of each of these groups, the Asilomar Working Groups, Recommendations for Reporting of Clinical Trials in Biomedical Literature and the Standardized Reporting of Trials statement by Canadian experts were merged under the leadership of the Journal of the American Medical Association to produce the first CONSORT statement in 1996. Subsequent revisions of the CONSORT statement were published in 2001 and 2010. CONSORT statement has a 25-item list that describes how to write a title, abstract, introduction, methods, results, discussion, registration, and access study protocol as well as sources of research funding (11, 12).

Although RCTs are considered the best choice to examine the causal relationships and effectiveness of the research, the employment of these designs is not always appropriate or feasible; instead, studies with nonrandomized designs are frequently used (1). The TREND statement was published by Des Jarlais et al. (5), in 2004. It was designed to investigate the quality of nonrandomized trials reports in the field of behavioral and public health. The CONSORT (2001) guideline was the basis for designing and developing the TREND checklist. It focused on empirical studies with nonrandomized designs to report different parts of a study such as intervention and comparison conditions, research design, and methods of adjusting for possible biases in evaluations. The TREND checklist includes 22 items (59 subitems) including questions about different parts of an article such as title, abstract, introduction, methods, results, and discussion (5). On both CONSORT and TREND checklists, many of the items have two or more subitems, and response options are dichotomous (yes or no).

The adoption of reporting guidelines for health care research studies by professional journals is a publication trend that becomes more evident in the future and assists authors to compose their submissions, and reviewers to assess the merits of manuscripts. Ultimately, contribution to the body of knowledge in the specialty areas of clinical practice for the care of patients is enhanced as the finding of published studies meeting more rigorous standards of review. Standardization of published studies in health care facilitates the analysis of findings found in systematic reviews and meta-analyses that are vital to the development of evidence-based approaches to care (13). The editorial team of the Jundishapur Journal of Chronic Disease Care (JJCDC) decided to adopt the publication guidelines for all types of research papers.

2. Objectives

The current study aimed at evaluating the report quality of experimental studies published in the Jundishapur Journal of Chronic Disease Care.

3. Methods

In the current cross sectional study, all issues of journal were reviewed from the first (July 2012) to the latest (July 2018) year of publication. Three investigators separately examined each article and specified whether the authors were committed to reporting the items in each checklist. Each investigator's response to each question of the checklists was recorded as yes or no. To evaluate the quality of RCT reporting, the most recent version of the CONSORT statement was used (CONSORT 2010) (11). CONSORT statement has 25 items. If studies had a non-randomized design, the 22-item TREND checklist was used. Data for descriptive statistics were analyzed with Microsoft Excel 2010.

4. Results

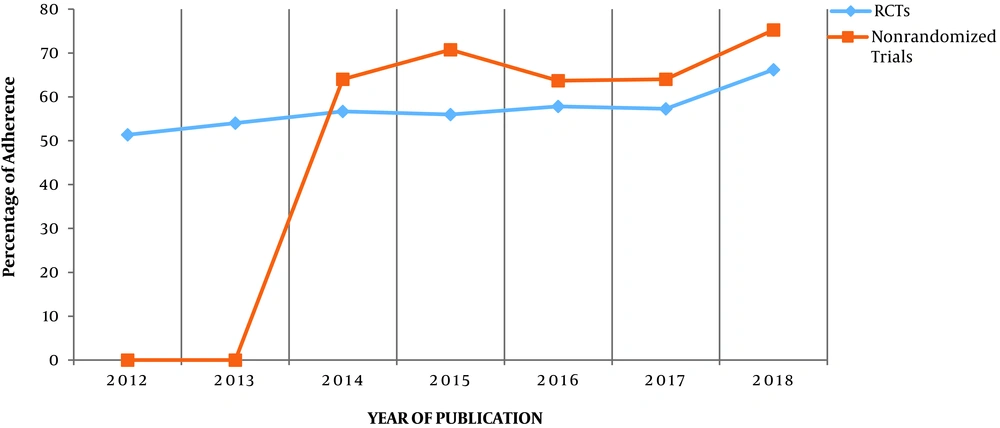

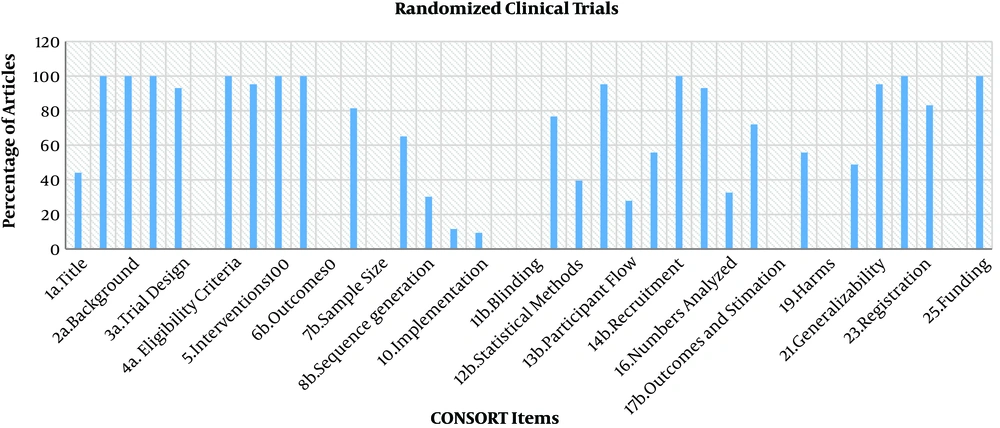

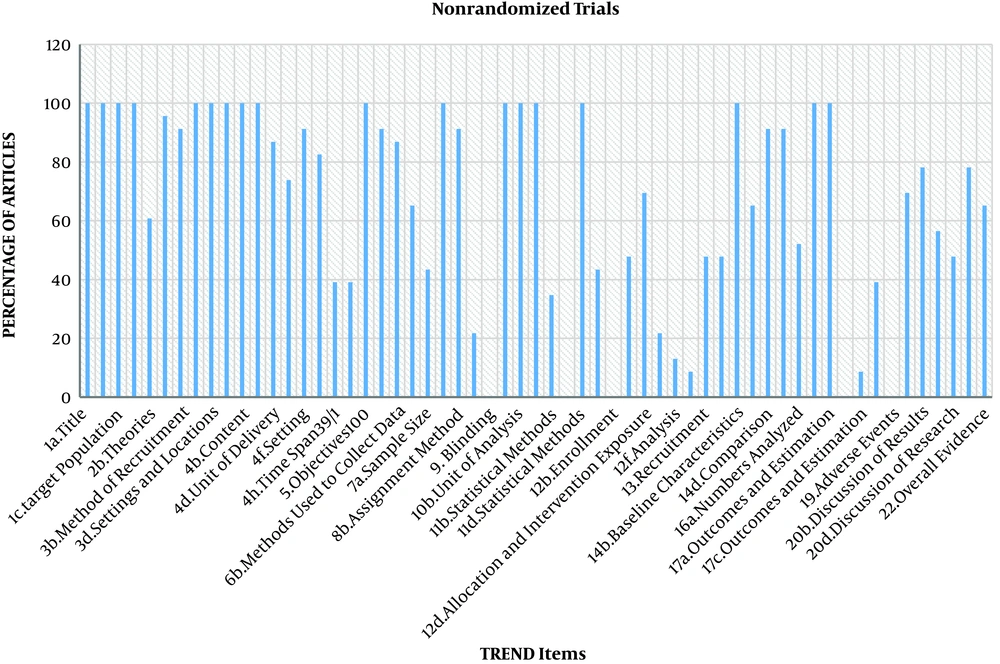

The study identified 43 RCTs and 23 nonrandomized trials. The percentage of adherence to both checklists was more than 50% since 2014, but no article met all criteria of the CONSORT and TREND statements. The results are shown in Tables 1 and 2, and Figures 1-3.

| Section/Topic Item | Checklist Item No. | CONSORT Item | Reported, No. (%) | Unreported, No. (%) |

|---|---|---|---|---|

| Title and abstract | ||||

| 1a | Identification as a randomized trial in the title | 19 (44.1) | 24 (55.8) | |

| 1b | Structured summary of trial design, methods, results, and conclusions (for specific guidance see CONSORT for abstracts) | 43 (100) | 0 | |

| Introduction | ||||

| Background and objectives | 2a | Scientific background and explanation of rationale | 43 (100) | 0 |

| 2b | Specific objectives or hypotheses | 43 (100) | 0 | |

| Methods | ||||

| Trial design | 3a | Description of trial design (such as parallel, factorial) including allocation ratio | 40 (93.1) | 3 (6.9) |

| 3b | Important changes to methods after trial commencement (such as eligibility criteria), with reasons | 0 | 43 (100) | |

| Participants | 4a | Eligibility criteria for participants | 43 (100) | 0 |

| 4b | Settings and locations where the data were collected | 41 (95.3) | 2 (4.65) | |

| Interventions | 5 | The interventions for each group with sufficient details to allow replication, including how and when they were actually administered | 43 (100) | 0 |

| Outcomes | 6a | Completely defined pre-specified primary and secondary outcome measures, including how and when they were assessed | 43 (100) | 0 |

| 6b | Any changes to trial outcomes after the trial commenced, with reasons | 0 | 43 (100) | |

| Sample size | 7a | How sample size was determined | 35 (81.39) | 8 (18.60) |

| 7b | When applicable, explanation of any interim analyses and stopping guidelines | 0 | 43 (100) | |

| Randomization | ||||

| Sequence generation | 8a | Method used to generate the random allocation sequence | 28 (65.1) | 15 (34.8) |

| 8b | Type of randomization; details of any restriction (such as blocking and block size) | 13 (30.2) | 30 (69.7) | |

| - Allocation concealment mechanism | 9 | Mechanism used to implement the random allocation sequence (such as sequentially numbered containers), describing any steps taken to conceal the sequence until interventions were assigned | 5 (11.6) | 38 (88.3) |

| - Implementation | 10 | How generated the random allocation sequence, how enrolled participants, and how assigned participants to interventions | 4 (9.3) | 39 (90.6) |

| Blinding | 11a | If done, who was blinded after assignment to interventions (for example, participants, care providers, those assessing outcomes) and how | 2 (4.65) | 41 (95.3) |

| 11b | If relevant, description of the similarity of interventions | 0 | 43 (100) | |

| Statistical methods | 12a | Statistical methods used to compare groups for primary and secondary outcomes | 33 (76.7) | 10 (23.2) |

| 12b | Methods for additional analyses, such as subgroup analyses and adjusted analyses | 17 (39.5) | 26 (60.4) | |

| Results | ||||

| Participant flow (a diagram is strongly recommended) | 13a | For each group, the numbers of participants who were randomly assigned, received intended treatment, and were analyzed for the primary outcome | 41 (95.3) | 2 (4.65) |

| 13b | For each group, losses and exclusions after randomization, together with reasons | 12 (27.9) | 31 (72) | |

| Recruitment | 14a | Dates defining the periods of recruitment and follow-up | 24 (55.8) | 19 (44.1) |

| 14b | Why the trial ended or was stopped | 0 | 43 (100) | |

| Baseline data | 15 | A table showing baseline demographic and clinical characteristics for each group | 40 (93) | 3 (6.9) |

| Numbers analyzed | 16 | For each group, number of participants (denominator) included in each analysis and whether the analysis was by original assigned groups | 14 (32.5) | 29 (67.4) |

| Outcomes and estimation | 17a | For each primary and secondary outcome, results for each group, and the estimated effect size and its precision (such as 95% confidence interval) | 31 (72) | 12 (27.9) |

| 17b | For binary outcomes, presentation of both absolute and relative effect sizes is recommended | 0 | 43 (100) | |

| Ancillary analyses | 18 | Results of any other analyses performed, including subgroup analyses and adjusted analyses, distinguishing pre-specified from exploratory | 24 (55.8) | 19 (44.1) |

| Harms | 19 | The all-important harms or unintended effects in each group (for specific guidance see CONSORT for harms) | 0 | 43 (100) |

| Discussion | ||||

| Limitations | 20 | Trial limitations, addressing sources of potential bias, imprecision, and, if relevant, multiplicity of analyses | 21 (48.8) | 22 (51.1) |

| Generalizability | 21 | Generalizability (external validity, applicability) of the trial findings | 41 (95.3) | 2 (4.65) |

| Interpretation | 22 | Interpretation consistent with results, balancing benefits and harms, and considering other relevant evidence | 43 (100) | 0 |

| Other information | ||||

| Registration | 23 | Registration number and name of trial registry | 35 (83.1) | 8 (18.6) |

| Protocol | 24 | Where the full trial protocol can be accessed, if available | 0 | 43 (100) |

| Funding | 25 | Sources of funding and other support (such as supply of drugs), role of funders | 43 (100) | 0 |

| Section/Topic Item | Item No. | Descriptor | Reported, No. (%) | Unreported, No. (%) |

|---|---|---|---|---|

| Title and Abstract | ||||

| Title and abstract | 1 | |||

| Information on how unit were allocated to interventions | 23 (100) | 0 | ||

| Structured abstract recommended | 23 (100) | 0 | ||

| Information on target population or study sample | 23 (100) | 0 | ||

| Introduction | ||||

| Background | 2 | |||

| Scientific background and explanation of rationale | 23 (100) | 0 | ||

| Theories used in designing behavioural interventions | 14 (60.8) | 9 (39.1) | ||

| Methods | ||||

| Participants | 3 | |||

| Eligibility criteria for participants, including criteria at different levels in recruitment/sampling plan (e g, cities, clinics, subjects) | 22 (95.6) | 1 (4.3) | ||

| Method of recruitment (e g, referral, self-selection), including the sampling method if a systematic sampling plan was implemented | 21 (91.3) | 2 (8.6) | ||

| Recruitment setting | 23 (100) | 0 | ||

| Settings and locations where the data were collected | 23 (100) | 0 | ||

| Interventions | 4 | |||

| Details of the interventions intended for each study condition and how and when they were actually administered, specifically including: | 23 (100) | 0 | ||

| Content: what was given? | 23 (100) | 0 | ||

| Delivery method: how was the content given? | 23 (100) | 0 | ||

| Unit of delivery: how were the subjects grouped during delivery? | 20 (86.9) | 3 (13) | ||

| Deliverer: who delivered the intervention? | 17 (73.9) | 6 (26) | ||

| Setting: where was the intervention delivered? | 21 (91.3) | 2 (8.6) | ||

| Exposure quantity and duration: how many sessions or episodes or events were intended to be delivered? How long were they intended to last? | 19 (82.6) | 4 (17.39) | ||

| Time span: how long was it intended to take to deliver the intervention to each unit? | 9 (39.1) | 14 (60.8) | ||

| Activities to increase compliance or adherence (e g, incentives) | 9 (39.1) | 14 (60.8) | ||

| Objectives | 5 | Specific objectives and hypotheses | 23 (100) | 0 |

| Outcomes | 6 | Clearly defined primary and secondary outcome measures | 21 (91.3) | 2 (8.6) |

| Methods used to collect data and any methods used to enhance the quality of measurements | 20 (86.9) | 3 (13) | ||

| Information on validated instruments such as psychometric and biometric properties | 15 (65.2) | 8 (34.7) | ||

| Sample size | 7 | How sample size was determined and, when applicable, explanation of any interim analyses and stopping rules | 10 (43.4) | 13 (56.5) |

| Assignment method | 8 | |||

| Unit of assignment (the unit being assigned to study condition, e g, individual, group, community) | 23 (100) | 0 | ||

| Method used to assign units to study conditions, including details of any restriction (e g, blocking, stratification, minimization) | 21 (91.3) | 2 (8.6) | ||

| Inclusion of aspects employed to help minimize potential bias induced due to non-randomization (e g, matching) | 5 (21.7) | 18 (78.26) | ||

| Blinding (masking) | 9 | Whether or not participants, those administering the interventions, and those assessing the outcomes were blinded to study condition assignment; if so, statement regarding how the blinding was accomplished and how it was assessed. | 0 | 23 (100) |

| Unit of Analysis | 10 | |||

| Description of the smallest unit that is being analyzed to assess intervention effects (e g, individual, group, or community) | 23 (100) | 0 | ||

| If the unit of analysis differs from the unit of assignment, the analytical method used to account for this (e g, adjusting the standard error estimates by the design effect or using multilevel analysis) | 23 (100) | 0 | ||

| Statistical methods | 11 | |||

| Statistical methods used to compare study groups for primary methods outcome (s), including complex methods of correlated data | 23 (100) | 0 | ||

| Statistical methods used for additional analyses, such as a subgroup analyses and adjusted analysis | 8 (34.7) | 15 (65.2) | ||

| Methods for imputing missing data, if used | 0 | 23 (100) | ||

| Statistical software or programs used | 23 (100) | 0 | ||

| Results | ||||

| Participant flow | 12 | |||

| Flow of participants through each stage of the study: enrollment, assignment, allocation, and intervention exposure, follow-up, analysis (a diagram is strongly recommended) | 10 (43.4) | 13 (56.5) | ||

| Enrollment: the numbers of participants screened for eligibility, found to be eligible or not eligible, declined to be enrolled, and enrolled in the study | 0 | 23 (100) | ||

| Assignment: the numbers of participants assigned to a study condition | 11 (47.8) | 12 (52.17) | ||

| Allocation and intervention exposure: the number of participants assigned to each study condition and the number of participants who received each intervention | 16 (69.5) | 7 (30.4) | ||

| Follow-up: the number of participants who completed the follow-up or did not complete the follow-up (i e, lost to follow-up), by study condition | 5 (21.7) | 18 (78.26) | ||

| Analysis: the number of participants included in or excluded from the main analysis, by study condition | 3 (13) | 20 (86.9) | ||

| Description of protocol deviations from study as planned, along with reasons | 2 (8.6) | 21 (91.3) | ||

| Recruitment | 13 | Dates defining the periods of recruitment and follow-up | 11 (47.8) | 12 (52.17) |

| Baseline data | 14 | |||

| Baseline demographic and clinical characteristics of participants in each study condition | 11 (47.8) | 12 (52.17) | ||

| Baseline characteristics for each study condition relevant to specific disease prevention research | 23 (100) | 0 | ||

| Baseline comparisons of those lost to follow-up and those retained, overall and by study condition | 15 (65.2) | 8 (34.7) | ||

| Comparison between study population at baseline and target population of interest | 21 (91.3) | 2 (8.6) | ||

| Baseline equivalence | 15 | Data on study group equivalence at baseline and statistical methods used to control for baseline differences | 21 (91.3) | 2 (8.6) |

| Numbers analyzed | 16 | |||

| Number of participants (denominator) included in each analysis for each study condition, particularly when the denominators change for different outcomes; statement of the results in absolute numbers when feasible | 12 (52.1) | 11 (47.8) | ||

| Indication of whether the analysis strategy was intention to treat or, if not, description of how non-compliers were treated in the analyses | 23 (100) | 0 | ||

| Outcomes and estimation | 17 | |||

| For each primary and secondary outcome, a summary of results for each estimation study condition, and the estimated effect size and a confidence interval to indicate the precision | 23 (100) | 0 | ||

| Inclusion of null and negative findings | 0 | 23 (100) | ||

| Inclusion of results from testing pre-specified causal pathways through which the intervention was intended to operate, if any | 2 (8.6) | 21 (91.3) | ||

| Ancillary analyses | 18 | Summary of other analyses performed, including subgroup or restricted analyses, indicating which are pre-specified or exploratory | 9 (39.1) | 14 (60.8) |

| Adverse events | 19 | Summary of all important adverse events or unintended effects in each study condition (including summary measures, effect size estimates, and confidence intervals) | 0 | 23 (100) |

| Discussion | ||||

| Interpretation | 20 | |||

| Interpretation of the results, taking into account study hypotheses, sources of potential bias, imprecision of measures, multiplicative analyses, and other limitations or weaknesses of the study | 16 (69.5) | 7 (30.4) | ||

| Discussion of results taking into account the mechanism by which the intervention was intended to work (causal pathways) or alternative mechanisms or explanations | 18 (78.2) | 5 (21.7) | ||

| Discussion of the success of and barriers to implementing the intervention, fidelity of implementation | 13 (56.5) | 10 (43.4) | ||

| Discussion of research, programmatic, or policy implications | 11 (47.8) | 12 (52.1) | ||

| Generalizability | 21 | Generalizability (external validity) of the trial findings, taking into account the study population, the characteristics of the intervention, length of follow-up, incentives, compliance rates, specific sites/settings involved in the study, and other contextual issues | 18 (78.2) | 5 (21.7) |

| Overall evidence | 22 | General interpretation of the results in the context of current evidence and current theory | 15 (65.2) | 8 (34.7) |

5. Discussion

Accurate reporting of experimental studies such as RCTs is a significant dimension of good research and essential for health providers and other researchers to value the findings (14). The current study evaluated the quality of reporting of published experimental studies in JJCDC using the CONSORT and TREND statement checklists. According to the obtained results, the adherence to the CONSORT and TREND statements was especially acceptable since 2014, but no article met all criteria. In other words, the quality of reporting improved during the time, however, some of the improved studies have not yet reached the optimum level. Similar results are reported in other studies. The findings indicated that the desired items in reporting the articles are not thoroughly followed by the authors (14-17).

A large part of the low quality of reporting may be due to the lack of knowledge of the authors about the standard checklists. Therefore, it is helpful to inform and train the authors and reviewers to use them.

On the other hand, the editors of the journals should endorse the guidelines and checklists for each type of study in accordance with the methods, and the authors should report their research findings according to the existing guidelines. It was observed that reporting according to the guidelines and checklists affected the quality of reporting (6, 18).

In this regard, the link to these guidelines can be found on the home page of JJCDC for authors, reviewers, and readers. The CONSORT and TREND statement guidelines are also adopted for the publication of randomized and nonrandomized designs in the JJCDC.