1. Background

The objective structured clinical examination (OSCE) constitutes a crucial assessment modality within medical education. Developed by Harden et al. in the 1970s, the OSCE was designed to measure clinical competence in medical students, enabling the discrete assessment of individual clinical skills through a series of predetermined, timed stations (1). This examination format minimizes the influence of chance, ensures standardized conditions for all examinees, and facilitates precise scoring (2). McMaster University's School of Nursing pioneered the application of the OSCE for assessing primary care nursing skills in junior students in 1984 (3). Miller's pyramid provides a framework for clinical assessment, delineating suitable assessment methodologies for various learning stages. This model comprises four hierarchical levels, progressing from the foundational level of knowledge assessment to higher levels evaluating competence, performance, and actual practice. Within this framework, the OSCE is identified as a relevant tool for evaluating the demonstration of individual skills (4). The OSCE serves as a cornerstone of a comprehensive, multi-faceted approach to clinical assessment and has undergone significant evolution to accommodate diverse contexts (5).

The increasing prevalence of virtual OSCEs (VOSCEs) has been observed in recent years. Initially conceived as a novel approach to facilitate remote learner participation in these assessments (6, 7), VOSCEs emerged in the early 2000s under various nomenclatures, including electronic OSCE (e-OSCE), tele-OSCE, and web-OSCE, specifically designed to measure competences relevant to telehealth practices (8). Despite generally positive reception from both professors and students, and demonstrable comparability with traditional OSCE outcomes, VOSCEs have not achieved widespread adoption as a standard assessment modality over the past two decades (7). This limited uptake may be attributed to concerns regarding students' perceived capacity to effectively demonstrate their abilities in a virtual environment, coupled with potential challenges faced by examiners in accurately evaluating student skills remotely (9).

The coronavirus disease 2019 (COVID-19) pandemic significantly accelerated the exploration of virtual platforms for designing and implementing VOSCEs (10, 11). At the pandemic's peak, numerous medical schools transitioned their final-year OSCEs to a virtual format (12). Evidence suggests that VOSCEs effectively facilitated both the learning and assessment of student clinical competence (13, 14). Virtual assessment modalities not only provide students with insights into their strengths and weaknesses in knowledge and clinical skills but also encourage them to capitalize on their strengths and address their deficiencies. Administering clinical skills assessments virtually can promote students' self-awareness of their educational needs prior to entering professional practice. An additional aim of virtual clinical competence examinations is to evaluate students' capabilities across skill-based, educational, and communication domains to ensure their preparedness for clinical practice roles and responsibilities (15, 16).

Research comparing VOSCEs and traditional OSCEs, however, remains limited (8). The field of nursing is fundamentally grounded in practical and clinical skills. Within this context, even a single error can have irreversible consequences, potentially resulting in irreparable harm (17). Consequently, it is of paramount importance to rigorously assess the competence and capabilities of nursing students prior to their transition into professional practice (18).

2. Objectives

Considering the increasing prevalence of technology in higher education teaching and assessment, and recognizing the importance of evaluating the readiness of senior nursing students for clinical practice, this study aimed to develop, implement, and assess the effectiveness of a VOSCE in nursing students.

3. Methods

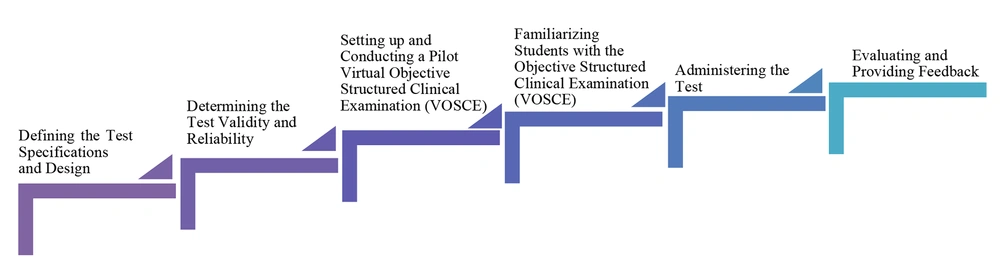

This quasi-experimental study was conducted on a sample of 176 senior nursing students between February 2022 and February 2024. Participants were enrolled in the study through census and were simply randomly assigned to two groups: First the virtual OSCE test, then the traditional OSCE test, or vice versa. The research process, encompassing design, implementation, and evaluation, was structured into six distinct phases.

3.1. Defining the Exam Specifications and Design

- Phase 1: A nine-member team designed the OSCE:

This team comprised representatives from the exam evaluation committee and the clinical skills committee, the faculty dean, the vice-chancellor of education, and nursing faculty members. In terms of academic qualifications, the team included four members holding a PhD in nursing education, one member with a degree in medical education, one member holding a Master's degree in anesthesia education, and three members holding a Master's degree in nursing. Over a three-year period, 15 sessions dedicated to the clinical competence examination were conducted, with three sessions held each semester. These sessions focused on defining key exam parameters, including the number of stations, topics covered, scoring rubric, evaluation methodologies, and assessment checklists. Following these preparatory sessions, a detailed exam specification table and scoring criteria were developed for each station, accompanied by the creation of corresponding scenarios. Initially drafted by the respective instructors, these scenarios underwent review by a designated team. Both checklists and scenarios were meticulously developed, aligning with the course's learning objectives and core curricular resources. Eight stations were deemed appropriate for exam administration, taking into account student learning needs and available resources. These stations encompassed the following areas: Nursing communication and ethics, physical examinations, paraclinical interpretation, basic skills, medication therapy, patient care management, cardiopulmonary resuscitation, and nursing reporting. For comparative analysis, both a traditional in-person OSCE and a VOSCE were designed, each comprising an equivalent number of stations with comparable scenarios.

- Phase 2: Determining the exam validity and reliability:

Ten faculty members reviewed and finalized the checklists and scenarios developed in the preceding phase to establish content validity. Reliability was assessed by calculating Cronbach's alpha (α = 0.92), inter-station scores correlations, and correlations between individual station scores and the overall examination score. Following this analysis, the scenarios underwent further revision and refinement.

- Phase 3: Setting up and conducting a pilot VOSCE:

The pre-determined scenarios, encompassing diverse question formats, such as multiple-choice questions, the patient management problem (PMP) exam, the key feature (KF) exam, and the clinical reasoning problem (CRP) exam, as well as a virtual puzzle exam, were implemented within the Faradid electronic examination platform. This web-based software offers a secure environment conducive to online test administration via the Internet or Intranet, incorporating dedicated management and user functionalities specifically designed for medical university examinations. Both the in-person and virtual exam formats were time-limited to 70 minutes, with individual stations allocated three to five minutes each. In the in-person setting, students transitioned to the subsequent station upon the conclusion of the allotted time. For the virtual exam, the answering function was disabled, and the subsequent question was displayed. A pilot administration of the exam, involving several faculty members, was conducted to identify and subsequently rectify any technical or logistical issues. A designated team member served as the exam day coordinator.

- Phase 4: Familiarizing students with the VOSCE:

In this phase, two webinars were conducted to inform students about the exam administration process and to present a guide on how to participate. Students' questions and ambiguities were addressed. The exam date was then announced to students through the website and other information channels.

- Phase 5: Administering the exam:

A mean of 35 students per semester participated in the clinical nursing competence exam. In total, this study was conducted on a sample of 176 senior nursing students between February 2022 and February 2024. Participants were simply randomly allocated to either a virtual or an in-person testing modality. Following the completion of eight virtual test stations, 88 students initially assigned to the virtual modality were subsequently assigned to in-person testing stations. Conversely, 88 students initially assigned to the in-person modality were subsequently assigned to virtual stations. Both the in-person and virtual examinations were administered at the clinical skills center of the school of nursing. The center provides OSCE stations and computerized testing facilities. Eligible participants were eighth-semester nursing students who had successfully completed all required theoretical coursework and internships. Students were excluded if they had failed any prerequisite courses or had not attended the mandatory orientation webinar. Verification of student identity was conducted using personal identifiers, including national ID and student ID numbers. Technical support was available through the university's information technology (IT) department, with designated personnel addressing any technical difficulties encountered during the examination promptly. Following the conclusion of the examination, each station's answer sheet was submitted to the professor responsible for question design for evaluation. Results were disseminated within 24 hours. Faculty members established a 50 percent correct answer threshold as the minimum passing score for each station. Illustratively, the basic clinical skills station presented students with the equipment and procedural steps for sterile dressing application in a disorganized fashion, requiring them to correctly sequence the steps, analogous to a puzzle. At the cardiopulmonary resuscitation station, students were presented with a scenario depicting a patient experiencing cardiopulmonary arrest, supplemented by an electrocardiogram, and were tasked with identifying the appropriate interventions.

- Phase 6: Evaluating and providing feedback:

A comparative analysis was conducted on student final scores from in-person and virtual clinical competence examinations. Following each administration, the results of this assessment were presented to the faculty's education development office (EDO) to identify both strengths and weaknesses. Feedback and suggestions from colleagues regarding enhancements and remediation of identified weaknesses were incorporated into subsequent semesters' implementations. Furthermore, at the conclusion of each semester, student satisfaction on the VOSCE, including their satisfaction with its implementation, were gathered via an online satisfaction survey. This instrument has 14 questions explored student opinions regarding various facets of the OSCE, encompassing administrative procedures, the perceived impact of the examination, and their satisfaction on future administrations of the exam. In this study, the content validity of the student satisfaction survey was evaluated by ten faculty members, and its reliability was confirmed with a Cronbach's alpha of 0.92. The design, implementation, and evaluation process of the VOSCE is visually represented in Figure 1.

3.2. Ethical Consideration

This paper is part of a research project titled “design and implementation of OSCE test of Virtual Clinical Competence of Students in Nursing School of Abadan University of Medical Sciences”, with ethics code IR.ABADANUMS.REC.1400.063 and project No. 1219. The study was conducted in full compliance with ethical principles, both in its execution and publication. Comprehensive information was provided to participants regarding the purpose of the research, its results, confidentiality, and how the study would be conducted. Informed consent was obtained from all participants.

3.3. Statistical Analysis

The data collected for this study were analyzed using SPSS version 20. Furthermore, the Kolmogorov-Smirnov test was employed to assess whether the data followed a normal distribution. Following this, descriptive statistics, including the mean, standard deviation, and Pearson's correlation coefficient, were calculated.

4. Results

A cohort of 176 nursing students undertook concurrent OSCEs and VOSCEs across a five-semester period. The sample comprised 107 (61.14%) female and 68 (38.86%) male students. The mean score achieved on the VOSCE was 17.35, while the mean score on the in-person OSCE was 16.86. Statistical analysis revealed no significant difference between the two exam modalities (Table 1). A correlational analysis was performed to determine the relationship between individual station scores and the overall examination score. The results demonstrated a direct significant correlation between the total scores achieved on the virtual examination and those earned on the in-person examination (r = 0.899, P < 0.001) within the examined cohort. This correlation was observed across all individual stations in both the virtual and in-person examination formats (Table 2). A student satisfaction survey revealed overwhelmingly positive satisfaction with the VOSCE. Specifically, 94% of respondents felt that the VOSCE enhanced their self-efficacy and confidence. Furthermore, 86% reported that the examination improved their acquisition of practical, field-specific skills. A substantial majority (94%) believed that the virtual format could replace traditional, in-person examinations for many components in the future, and 93% expressed strong support for the VOSCE's continued implementation. These student satisfaction survey results are summarized in Table 3. Colleagues' discussions during EDO meetings further explored the VOSCE's advantages and disadvantages. Key benefits identified included reductions in time, cost, and resource utilization compared to traditional OSCEs. Survey data also suggested that the VOSCE environment resulted in lower student stress levels, positively impacting performance. Additional benefits were the familiarization of students with contemporary assessment methodologies and the expanded range of question types facilitated by the technological platform.

| Groups | Scores | P-Value | ||

|---|---|---|---|---|

| Minimum-Maximum | No. of Failing | Mean ± SD | ||

| Virtual exam | 12.2 - 18.6 | 0 | 17.35 ± 2.83 | > 0.05 |

| In-person exam | 11.8 - 18.1 | 1 | 16.86 ± 3.75 | |

| Variables | r | P-Value |

|---|---|---|

| In-person OSCE and VOSCE stations | 0.899 | 0.001 |

| Communication and ethics | 0.719 | 0.002 |

| Physical examinations | 0.851 | 0.001 |

| Paraclinical interpretation | 0.747 | 0.001 |

| Basic skills | 0.758 | 0.001 |

| Medication therapy | 0.817 | 0.001 |

| Patient care management | 0.829 | 0.001 |

| Cardiopulmonary resuscitation | 0.952 | 0.001 |

| Nursing reporting | 0.721 | 0.002 |

Abbreviation: OSCE, Objective Structured Clinical Examination.

| Dimension and Items | Very Much | Much | Moderate | Low | Very Low | Completely Agree | Agree | No Idea | Disagree | Completely Disagree | No Answer |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Exam administration procedures | |||||||||||

| I was fully informed of the exam administration protocol prior to its commencement. | 35 | 42 | 7 | 11 | 5 | - | - | - | - | - | 0 |

| The exam's length was satisfactory. | 51 | 25 | 11 | 7 | 6 | - | - | - | - | - | 0 |

| The exam's questions were relevant to the course's content. | 54 | 26 | 15 | 4 | 1 | - | - | - | - | - | 0 |

| The exam's questions were consistent with those on the in-person exam. | 69 | 15 | 6 | 6 | 3 | - | - | - | - | - | 1 |

| Effects of administering the exam | - | - | - | - | - | ||||||

| This exam has the potential to increase student motivation. | 86 | 11 | 0 | 1 | 0 | - | - | - | - | - | 2 |

| This exam has encouraged students to review previously learned material. | 81 | 10 | 4 | 4 | 0 | - | - | - | - | - | 0 |

| This exam has allowed students to evaluate their preparedness for hospital admission. | 78 | 15 | 4 | 2 | 1 | - | - | - | - | - | 0 |

| This exam has strengthened students’ performance in clinical skills in the ward. | 75 | 11 | 5 | 5 | 3 | - | - | - | - | - | 1 |

| The implementation of the exam has promoted the acquisition of skills relevant to students' disciplines. | 79 | 14 | 5 | 1 | 1 | - | - | - | - | - | 0 |

| The exam gave you a sense of self-efficacy and confidence. | 79 | 15 | 4 | 2 | 2 | - | - | - | - | - | 0 |

| Administering the exam in the coming years | |||||||||||

| The continuous administration of this exam in coming years will be advantageous for students. | - | - | - | - | - | 85 | 8 | 4 | 3 | 0 | 0 |

| The feedback received from this examination should be systematically conveyed to professors to incorporate relevant insights in future courses. | - | - | - | - | - | 73 | 15 | 7 | 3 | 2 | 0 |

| Virtual exams have the potential to replace in-person exams in many fields in the future. | - | - | - | - | - | 85 | 9 | 6 | 2 | 1 | 0 |

| A pre-exam survey should be administered to students in order to gather feedback regarding the exam administration process. | - | - | - | - | - | 69 | 19 | 5 | 4 | 2 | 1 |

a Values are presented as %.

5. Discussion

This study investigated the feasibility of virtual clinical competence examinations for nursing students, utilizing the Faradid electronic platform. The examination focused on the clinical and practical skills assessed at each station. Results demonstrated no statistically significant difference in mean scores between students who completed the exam virtually and those who took it in person. A significant proportion of the student cohort favored the continued use of virtual examinations, suggesting the potential for transitioning from traditional in-person testing to a virtual format. In a study conducted in Hong Kong, Chan et al. employed virtually designed clinical scenarios. Virtual simulators facilitated student interaction with virtual patients, replicating a real-world clinical environment. This method of virtual clinical simulation has been shown to improve students' decision-making capabilities, critical thinking skills, and overall performance and psychomotor proficiency (17). Furthermore, Arrogante et al. evaluated the clinical competence of nursing students in Paris during the COVID-19 pandemic using a standardized VOSCE. Their findings indicated that the VOSCE achieved comparable results to traditional in-person examinations while simultaneously reducing costs. Virtual OSCEs, utilizing standardized patients, present a viable alternative assessment method. Beyond their applicability during the COVID-19 pandemic, the use of VOSCEs warrants consideration for broader implementation, including in non-pandemic contexts (18). A study conducted in Iran by Tolabi and Yarahmadi exemplifies this potential. Their 2021 virtual clinical competence examination, administered to 42 senior nursing students at Khorramabad University of Medical Sciences via the Porsline platform across 9 stations, yielded results comparable to those of prior in-person examinations at the same institution. This finding suggests that VOSCEs can effectively substitute for traditional, in-person assessments (19). In another study conducted at the Nursing and Midwifery School of Lorestan University of Medical Sciences, Mojtahedzadeh et al. developed, implemented, and assessed a blended (combining in-person and virtual components) competence examination for graduating nursing students. As shown by their findings, this blended approach offers a viable alternative to traditional OSCEs, particularly during pandemics or periods of resource scarcity. The blended methodology allowed for the evaluation of fundamental and advanced clinical skills through in-person stations, while virtual stations, based on presented scenarios, facilitated the assessment of competences in communication, reporting, nursing diagnosis, professional ethics, mental health, and community health. Moreover, the blended format demonstrably reduced the requirements for human resources, station setup costs, and time spent compared to a fully in-person format (20). Prior research corroborates the findings of the present study, collectively demonstrating the efficacy of virtual assessment modalities. Specifically, these investigations indicate that the implementation of VOSCEs offers substantial advantages, extending beyond the exigencies of the COVID-19 pandemic to encompass routine post-pandemic practice. These benefits include, notably, reductions in both costs and time expenditures. Consequently, VOSCEs can serve as a viable alternative when traditional in-person examinations are infeasible. Furthermore, this methodology can be appropriately utilized as a replacement for, or a valuable adjunct to, conventional in-person assessments. Avraham et al. developed a VOSCE preparation program delivered via Zoom for second-year nursing undergraduates at Ben-Gurion University's Department of Nursing. The results indicated a high level of student satisfaction (88%) with the program in 2021, with students reporting feeling adequately prepared for the OSCE (26% agree and 62% strongly agree). Importantly, the study found no statistically significant difference in OSCE performance between students who participated in the 2021 virtual program and those who participated in traditional in-person OSCE preparation programs between 2017 and 2020 (21). Similarly, a traditional in-person OSCE was adapted and implemented using a virtual conferencing platform for nursing students at the University of Texas Medical Branch in Galveston. Survey data revealed that the majority of professors and students perceived the VOSCE as a highly effective method for assessing communication skills, history taking, differential diagnosis, and patient management (22). Hopwood et al. implemented a VOSCE at the Medical School of University College London (UCL) to evaluate the competences of senior medical students. The VOSCE comprised 18 stations designed to assess clinical, theoretical, practical, examination, and professional skills. Feedback solicited from all stakeholders demonstrated a high degree of satisfaction, with 94% recommending the virtual format for future examinations (10). Jenkins et al. employed a simulated virtual pilot examination for urology graduate certification, comprising a VOSCE and a multiple-choice written component. High satisfaction was reported, with 92.9% of examiners and 87.5% of candidates rating the experience positively (9). Similarly, Mojtahedzadeh et al. found equivalent and relatively high levels of faculty member satisfaction with in-person, virtual, and blended formats of a clinical competence examination (20). Recent research suggests that virtual simulations offer benefits beyond knowledge and skill acquisition. They may also foster increased self-confidence, influence attitudes and behaviors, and represent a novel, adaptable, and promising pedagogical tool for novice nurses and nursing students (22). The potential impact of virtual programs on nursing education is substantial. Their accessibility, flexible scheduling, and location flexibility (23) may significantly enhance the development of students' clinical competences (24). These studies corroborate the findings of the present research, indicating substantial satisfaction with virtual assessments among both professors and students. Furthermore, student survey responses in this study revealed that this assessment modality positively influenced their satisfaction, engagement, sense of empowerment, and self-efficacy.

5.1. Conclusions

This study investigated student competence across multiple domains, including knowledge, critical thinking, and clinical judgment, to ascertain their preparedness for professional practice prior to clinical placement. The findings suggest that a VOSCE has the potential to be used as a viable alternative to traditional in-person examinations, or as a valuable component within a blended assessment approach. Student feedback indicated substantial approval for the continuation of virtual examination administration. The findings of this research offer a framework for other nursing departments needing to develop and implement effective strategies for virtual clinical competence assessments. To enhance the efficacy of virtual examinations, professional development opportunities, such as workshops and training sessions, are recommended for professors. Furthermore, universities of medical sciences should invest in robust and contemporary virtual learning centers, equipped with up-to-date, high-quality infrastructure, materials, and resources. To ensure a more rigorous evaluation, research should be conducted across three distinct groups: One engaging in virtual learning, another in traditional in-person instruction, and a third in a hybrid format combining both. Additionally, it is advisable to assess students not only on conventional metrics but also on other relevant dimensions and variables, including clinical self-confidence, self-efficacy, and the degree of skill acquisition.

5.2. Strengths

The virtual examination's efficacy stemmed from several key advantages. Firstly, it provided students with valuable exposure to contemporary assessment methodologies and Virtual Examination platforms, thereby fostering familiarity with digital testing environments. Secondly, the implementation of Virtual Justification sessions for students, coupled with coordination sessions for faculty members, proved crucial in ensuring student satisfaction. These sessions facilitated clear communication and addressed potential anxieties. Finally, the establishment of social messaging groups, utilizing both virtual and face-to-face communication channels, allowed for the dissemination of instructions, clarification of expectations and assigned tasks, and efficient responses to student queries.

5.3. Limitation

A limitation of the study was the inadequate electronic infrastructure, which constrained the development of diverse test questions.