1. Background

At the end of 2019, the world became infected by the coronavirus pandemic, which started in China and spread rapidly to other countries (1). With the spread of coronavirus disease 2019 (COVID-19), restrictions were imposed in many countries (2). These restrictions disrupted many citizens’ daily routines (3). One limitation in controlling this disease was the lockdown of schools and universities, which, according to scientific evidence, has decreased the number of cases and deaths (4, 5). Estimates indicate the lockdown of universities and schools in more than 100 countries and the deprivation of more than 1 billion students (6).

The lockdown of schools, universities, and educational institutions has changed the way of education from a standard system to a virtual, online framework (7, 8). Online education includes learning through the Worldwide Web (9), and despite its benefits, it can never be a substitute for face-to-face education (7). It can lead to long-term consequences for students’ life expectancy and physical and mental health, as well as academic failure or dropout in poor and disabled students (10-12). This sudden shift from face-to-face to online education has significantly affected students, and many are confused about academic success (13). The novelty of online education and the constant change of existing technologies have exacerbated these confusions (14). In face-to-face education, students are more involved in learning processes than in online education because online education has challenges regarding internet access, student communications, student-teacher communication, and teacher-student skills (15).

Academic engagement can be better understood and defined as the interaction between attention and commitment (16). Active engagement in educational settings is essential for learners’ academic achievement (17). Lack of experience in academic engagement is associated with academic failure and academic fatigue in students, leading to reduced academic achievement and, eventually, dropout (18). Dropping out of school leads to higher unemployment, lower welfare and life satisfaction, increased crime, and poorer health (19-22). Academic engagement is more important in online education than in any other educational system because, in online education, students are primarily responsible for learning (23). In fact, in such a system, the achievement of educational goals depends on the active involvement of learners in educational environments. However, some studies showed that learners’ scientific involvement in online education is non-existent or very low in many countries (24).

The results of Mahdavi and Rahimi showed that the academic engagement of Iranian students is relatively unfavorable (25). Iranian students have little interest in participating in classrooms and participate in classrooms with a feeling of boredom. They are less likely to discuss class content with their friends (25). In Iran, some studies mentioned the lack of interaction, discussion, access, and involvement in cooperative learning among students as the challenges of virtual education (26). Similarly, the feeling of the superficiality of online education, along with the lack of motivation, proper interaction between students and professors, and active participation of students in learning, were mentioned as the limitations of virtual education in Iran (27-29).

There are few tools for assessing online academic engagement (30, 31), all of which were before online education became ubiquitous. Therefore, due to the various changes during the coronavirus outbreak, such as the expansion of online education instead of face-to-face education, these tools cannot measure academic engagement correctly because face-to-face communication between students and teachers was very limited and impossible during the corona era. There is a lack of research on online education, and scale building and validation can lead to research advances in online education (30). A scale has been developed in South Korea to measure engagement in e-learning (32). Due to cultural and linguistic differences, using scales in diverse societies requires re-validation (33).

The tool introduced in South Korea is the only scale introduced for online courses during the COVID-19 pandemic and has been used in numerous articles. Various methods have been used to validate this scale. Additionally, there are similarities in midwifery education between Iran and South Korea, such as the use of a national entrance exam, ethical principles based on cultural values, shared goals in professional skills, improvement of health levels, lifelong learning, evidence-based learning, and provision of services based on the highest available standards (34). Therefore, this scale is suitable for assessing academic engagement in Iran.

Iran was severely affected by COVID-19, and the spread of the virus continued despite the restrictions (35, 36). In Iran, since the outbreak of COVID-19, the process of education in universities has been online. According to reports, academic engagement among students has been very undesirable, and there is no estimate of the status of student interaction because of the lack of scales. Therefore, to measure academic engagement, providing a valid scale in online education is very important to help researchers and planners to provide transparent statistics on academic engagement.

2. Objectives

This study aimed to validate the Persian version of engagement in the e-learning scale (EELS).

3. Methods

3.1. Study Design

This cross-sectional study was conducted in 2022 on 1014 nursing and midwifery students of medical sciences universities across Iran. In this study, after stating the objectives of the study, informed telephone consent was obtained from participants by maintaining confidentiality and anonymity, willingness to participate in the study, and having the right to withdraw from the study.

3.2. Sampling Method

The cluster sampling method was used so that ten schools were randomly selected among all nursing and midwifery schools of medical universities. Next, the contact numbers and student codes were obtained by referring to the schools' education departments. The target sample (nursing and midwifery students) was randomly selected from each selected school at different levels of education based on the validation phase. After contacting these students and obtaining informed consent, the questionnaire link was sent to them via WhatsApp, e-mail, or SMS.

3.3. Measurement

This research used three questionnaires: A demographic information questionnaire, EELS, and an Educational Engagement Questionnaire of Schaufeli’s study (EEQSS).

Demographic Characteristics Questionnaire: The questionnaire on demographic characteristics included questions concerning gender, age, and education status.

EELS: This questionnaire was designed and created in 2019 by Lee et al. based on a systematic review of related studies (32). In the first stage, Lee extracted 48 primary items. After evaluating content validity and construct validity, a 24-item [on a 5-point Likert scale (never = 1, rarely = 2, sometimes = 3, often = 4, and always = 5] scale with six dimensions ((1) psychological motivation (6 items); (2) peer collaboration (5 items); (3) cognitive problem-solving (5 items); (4) interactions with instructors (2 items); (5) community support (3 items); and (6) learning management (3 items)) was obtained. On this scale, the scores had a range of 24 - 120. A higher score indicated more engagement of students in e-learning. Lee et al. reported the value of Cronbach's alpha for the whole scale and its dimensions above 0.7 (32). Therefore, this scale has been reported by the researcher as a valid and reliable scale for assessing engagement in e-learning among students (32).

EEQSS: This questionnaire was created by Schaufeli et al. in 1996 to measure the level of students' engagement in academic activities. This questionnaire has 17 items that include three subscales: Vigor (6 questions), dedication (5 questions), and absorption (6 questions) (37). The minimum scale score was 17, and the maximum was 56. Concurrent validity results showed a negative correlation between EEQSS and the burnout scale. Schaufeli et al. obtained the overall reliability of the scale at 0.73. Similarly, Cronbach's alpha was calculated as 0.78, 0.91, and 0.73 for the dimensions of vigor, dedication, and absorption, respectively. Momeni and Radmehr reported Cronbach's alpha reliability as 0.76 for the whole scale and 0.78, 0.8, and 0.67 for the vigor, dedication, and absorption dimensions, respectively (38).

3.4. Forward-Backward Translation

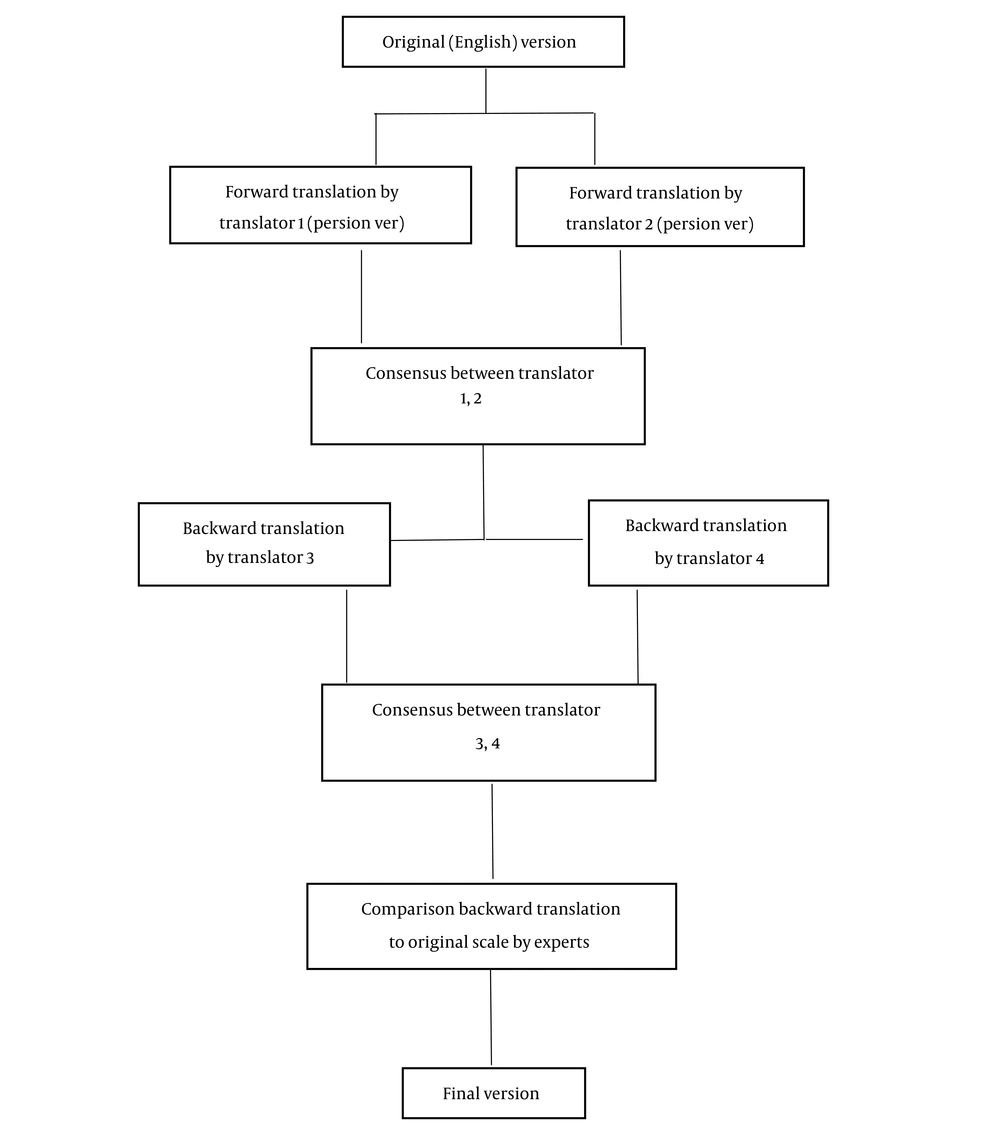

First, the original questionnaire was translated into Persian by two researchers fluent in both languages (Persian and English). Disagreements were resolved by discussion between the two translators. Next, the agreed version was given to two independent translators fluent in English and Persian for back translation (for translation from Persian to English). Another meeting was held with the translators to reach an "agreement on the reverse translation of the questions." Subsequently, an expert group (fields of educational technology, midwifery, nursing, and psychometrics) compared the main and translated backward scales to correct the ambiguities (Figure 1).

After the scale translation, validity (face validity, content validity, structure validity, and convergent validity) and reliability (internal consistency and stability) were checked.

3.5. Statistical Analysis

This research used descriptive statistics indicators, such as frequency and frequency percentage. Data analysis was performed using SPSS version 18 and LISREL version 8.8. The significance level was considered at 0.05.

3.5.1. Face Validity

Face validity was checked in two stages (qualitative and quantitative). The qualitative stage was conducted through face-to-face interviews with ten participants from the target community regarding the simplicity, comprehensibility, and relevance of the items. The quantitative step was performed by calculating the impact score of each item based on the following formula:

Impact = Frequency in % age × Importance.

The minimum value of the impact score for accepting each item was considered 1.5 (39).

3.5.2. Content Validity

Considering that exclusively educational technology experts were selected on the main scale, the research team decided to assess content validity by nursing and midwifery experts in addition to educational technology. In this stage, the items were evaluated by ten experts (educational technology three people, midwifery two people, nursing two people, and psychometrics three people) to check the content validity ratio (CVR) and content validity index (CVI). In CVR, items were evaluated based on a 3-part Likert scale of “essential,” “useful but not necessary,” and “unnecessary.” A CVR value above 0.62 is acceptable based on the Lawshe table (40). In CVI, the items were evaluated based on the score obtained from the mean of three criteria of simplicity, specificity, and clarity based on a 4-point Likert scale (1 = lowest score to 4 = highest score). A score above 0.79 is acceptable (41, 42). Based on content validity, a CVR value less than 0.79 (43) and CVI value less than 0.62 (based on the score of ten experts) were considered as criteria for removing questions (44).

3.5.3. Construct Validity

Exploratory factor analysis (EFA) was used to determine construct validity. First, Kaiser-Meier-Olkin (KMO) and Bartlett coefficient were used to determine the adequacy of the sample size. The value of KMO was considered greater than 0.5 (45). Based on the value of KMO > 0.8, the sample size was sufficient, and based on the value of Bartlett's test < 0.001, it is justified to perform factor analysis. Afterward, hidden factors were extracted by analyzing the principal components and using varimax rotation (46). In construct validity, factors with a value greater than one were considered the main factors (33, 47). The sample size in EFA is 5 - 20 participants per item (48). At this stage, 480 subjects were selected as the sample. The confirmatory factor analysis (CFA) was used to confirm the extracted factors. Coleman reported that a sample size of 200 was appropriate for CFA (49). Therefore, 220 people were selected (20 participants due to sample dropout).

3.5.4. Convergent Validity

Convergent validity was calculated through CFA. Average variance extract (AVE optimal value > 0.5) and composite reliability (CR optimal value > 0.7) were used for convergent validity analysis (50).

3.5.5. Concurrent Validity

At this stage, our questionnaire was distributed to 100 participants along with EEQSS (37), and the correlation between the two tests was assessed.

3.5.6. Reliability

We studied the number of samples required for internal consistency, and one of the criteria was determining the number of samples based on the eigenvalue. Therefore, in this research, after conducting the exploratory factor analysis and calculating the eigenvalue, the sample size was 100 people (51). This research evaluated the reliability of internal consistency with Cronbach’s alpha coefficient and the correlation of each item with the total score. The 2-split-half reliability method was also used. To evaluate the stability reliability, 30 participants completed the research questionnaire and completed it again after two weeks. Then, the correlation coefficient between the two tests was evaluated (52). The value of Cronbach's alpha was considered 0.7 (43).

4. Results

Among 1032 questionnaires distributed to the five sampling stages, 166 students were excluded from the study due to unwillingness to participate or failure to comply with the research criteria. The demographic characteristics of the participants in the validation stages are shown in Table 1. Out of 866 students, 579 (66.86%) were under 22 years old, 569 (65.7%) were women, and 679 (78.41%) were undergraduate or associate students.

| Age (y) | Gender | Education Status | Total | Missing | ||||

|---|---|---|---|---|---|---|---|---|

| < 22 | > 22 | Female | Male | Undergraduate or Associate Students | Postgraduate and Ph.D. Students | |||

| Face validity | 6 (60) | 4 (40) | 7 (70) | 3 (30) | 6 (60) | 4 (40) | 10 | 0 |

| EFA | 284 (65.9) | 147 (34.1) | 287 (66.6) | 144 (33.7) | 347 (81.3) | 84 (19.4) | 480 | 49 |

| CFA | 142 (68.3) | 66 (31.7) | 135 (64.9) | 73 (35.09) | 161 (77.4) | 47 (22.6) | 220 | 12 |

| Concurrentvalidity | 67 (69.79) | 29 (30.21) | 59 (61.46) | 37 (38.54) | 68 (70.83) | 28 (29.17) | 100 | 4 |

| Reliability (internal consistenc) | 61 (62.8) | 32 (33) | 61 (62.80) | 32 (34.4) | 73 (78.5) | 20 (21.5) | 100 | 7 |

| Reliability (stability) | 19 (67.9) | 9 (32.1) | 20 (71.4) | 8 (29.62) | 24 (88.9) | 4 (14.2) | 30 | 2 |

Abbreviations: EFA, exploratory factor analysis; CFA, confirmatory factor analysis.

a Values are expressed as No. (%) unless otherwise indicated.

4.1. Face Validity

First, the items on the scale were modified based on interviews with the participants, and then, the impact scores of the items were obtained. All values were above 1.5; therefore, no items were removed at this stage (44).

4.2. Content Validity

The values obtained from CVR for the 24-item scale ranged from 76% to 100%. In addition, the CVI value was 0.79. Due to acceptable CVR and CVI values, no items were removed from the scale (30).

4.3. EFA and CFA

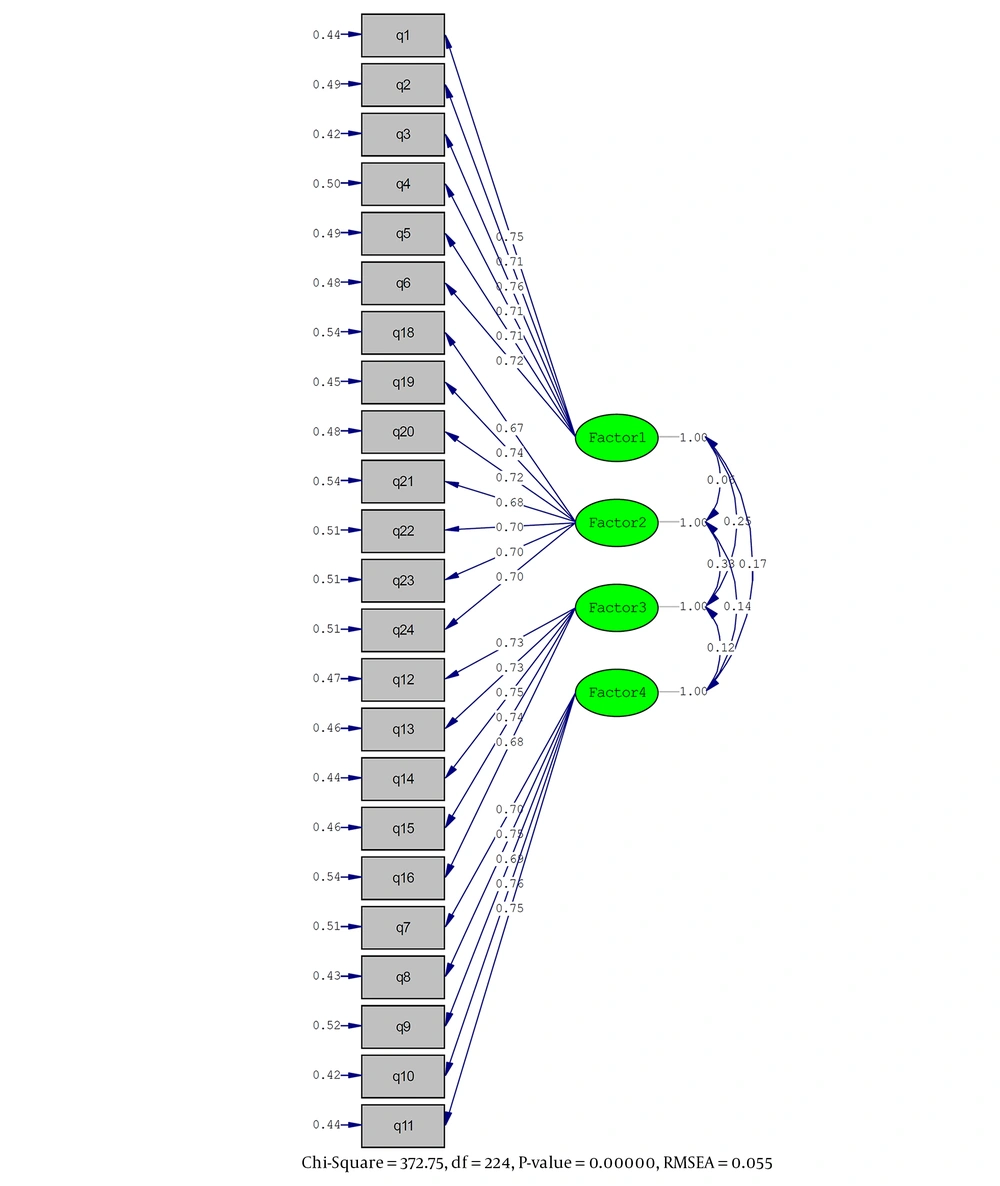

According to the results of factor extraction, for all the coefficients, the values were greater than 0.6. Therefore, no item was removed from the questionnaire (53). In this analysis, using the varimax rotation, four factors with particular values higher than one were extracted, explaining a total of 57.13% of the variance (factor 1 (17.02%), factor 2 (15.27%), factor 3 (13.24%) and factor 4 (11.59%)).

In the rotated component matrix, items with loading values above 0.5 form a dimension in each column. Consequently, items 1 - 6 were placed in one dimension according to the loaded values of column one (i1 = 0.80, i2 = 0.79, i3 = 0.82, i4 = 0.83, i5 = 0.79, and i6 = 0.82). Its name was defined according to these questions as psychological motivation. Items 18 - 24 were placed in one dimension based on the loaded values of column two. According to these questions, its name was management and effective communication (i18 = 0.73, i19 = 0.71, i20 = 0.73, i21 = 0.70, i22 = 0.72, i23 = 0.71, and i24 = 0.73).

Items 12 - 16 were placed in one dimension according to the loaded values of column three. According to these questions, its name was cognitive problem-solving (i12 = 0.80, i13 = 0.81, i14 = 0.78, i15 = 0.77, and i16 = 0.78). Items 7 - 11 were placed in one dimension according to the loaded values of column four. According to these questions, its name was peer collaboration (i7 = 0.72, i8 = 0.71, i9 = 0.72, i10 = 0.74, and i11 = 0.75). The first factor was psychological motivation, with six questions. The second was management and effective communication with seven questions, the third was cognitive problem-solving with five questions, and the fourth was peer collaboration with five questions. Question 17 was not included in any dimension.

All the goodness-of-fit indicators in Table 2 have acceptable values, confirming that the proposed model fits the data reasonably well. Figure 2 displays the factor loadings of the four factors, indicating the model's good fit with the data. Consequently, the data support the 4-factor model, as shown in the confirmatory factor analysis results.

| Variables | SRMR (< 0.1) | RMSEA (< 0.1) | CFI (> 0.9) | GFI (> 0.9) | AGFI (> 0.85) | CMIN/DF (< 3) |

|---|---|---|---|---|---|---|

| Values | 0.041 | 0.055 | 0.95 | 0.87 | 0.84 | 1.66 |

Abbreviations: SRMR, standardized RMR; RMSEA, root mean square error of approximation; CFI, comparative fit index; GFI, goodness of fit index; AGFI, adjusted goodness of fit index; CMIN/DF, chi-square/degrees of freedom.

4.4. Convergent Validity

AVE values > 0.5 and CR values > 0.7 AVE values > 0.5 and CR values > 0.7 are acceptable. Therefore, the scale has convergent validity (Table 3).

| AVE | CR | |

|---|---|---|

| Factor 1 | 0.528 | 0.873 |

| Factor 2 | 0.500 | 0.872 |

| Factor 3 | 0.528 | 0.848 |

| Factor 4 | 0.534 | 0.851 |

Abbreviations: AVE, average variance extract; CR, composite reliability.

4.5. Concurrent Validity

The correlation coefficient between the two scales was 0.61 (P = 0.001. Therefore, the scale has concurrent validity.

4.6. Reliability

The estimated Cronbach's alpha for the whole scale was 0.95. These coefficients were 0.91, 0.87, 0.88, and 0.86 for the first, second, third, and fourth factors, respectively. The correlation value was estimated to be 0.87 at the reliability of the split-half. The correlation between each item and the total score was significant at 0.05. Instability reliability, the correlation between the two tests, was calculated as 0.82.

5. Discussion

One of the major challenges for universities is the lack of knowledge sharing among students (54). Universities use knowledge sharing to help increase the efficiency and effectiveness of their community. This cross-sectional study provided detailed information on the validity and reliability of EELS in students. Lee et al.'s questionnaire has been expanded with the financial support of the Ministry of Public Education, and investigations confirmed content validity, convergent validity, divergent validity, and construct validity (with a sample of 737 students) (32). In addition, the researcher has reported its reliability at a suitable level. On the other hand, a review of the references of this article showed that many studies published in the field of online courses in the last two years had used this tool to measure academic engagement (202 articles posted on reputable sites, such as PubMed, tandfonline, and Springer) (55-59). Therefore, this tool was used in this research to provide a reliable tool to assess academic engagement.

The analysis included a broad range of aspects of the scale, from the construct of the questionnaire (for which exploratory and CFA were used) to its content validity calculations, CVI, and CVR. In all cases, it provided very satisfactory results. Reliability was 0.95 for 24 items, and EFA was satisfactory. Regarding CFA, root mean square error of approximation (RMSEA), goodness of fit index (GFI), and comparative fit index (CFI) values were satisfactory, and factor loadings were all statistically significant. This finding is consistent with the study by Lee et al. (32). This scale had a significant correlation with Schaufeli's academic engagement scale, and Lee's scale had a significant relationship with Schaufeli's academic engagement scale. Therefore, these two scales measure the same concept, and the Lee scale well measures academic engagement.

It should be noted that concurrent validity has not been investigated in the Lee scale. The AVE values were greater than 0.5, and the CR values were greater than 0.7. This indicates the convergent validity of the scale and is consistent with the original version (32).

The final questionnaire included 24 items and four factors: (1) psychological motivation; (2) effective management and communication; (3) cognitive problem-solving; and (4) peer collaboration (Appendix 1). The first dimension: "Psychological Motivation," includes questions about learning, enjoyment, stimulating interest, course functionality, satisfaction with the course, learning expectations, learning expectations, and motivation. This dimension corresponds to the dimension presented in the main questionnaire (32). It can be said that motivation is a prerequisite for learning, and the richest educational programs will not be useful in the absence of motivation (60). Academic engagement in online classes will not be exempt from this issue, and psychological motivation is essential in academic engagement and can increase academic engagement in online classes.

The second dimension, "management and effective communication," includes questions regarding asking questions, belonging to the community, connection with peers, interaction with peers, self-directed study, managing own learning, and managing own learning schedule. As a possible explanation, it can be said that communication increases academic performance, and students who have communication skills establish positive relationships with their classmates and teachers and create a suitable environment for learning (61). That is why communication is essential in online conflict. Moreover, management skills in students improve the motivation to learn, and they do not postpone their assignments and take control of the work processes (62). Therefore, management skills are essential in academic engagement. This dimension entails items from the dimensions of "Interactions with Instructors," "Community Support," and "Learning Management." In explaining this combination, we can highlight the difference between education in Iran and South Korea.

In comparing the educational system of South Korea and Iran, the difference in acceptance could be noted. In South Korea, the admission of students in the field of nursing and midwifery is based on an entrance exam and evaluation of interest and ability to communicate (63). In addition, coordination between goals and content in the educational program, providing lessons in line with creative and critical thinking, human relations, working in multicultural societies, and using evidence-based knowledge are distinctive and different features of the nursing education program. Teaching and using a comprehensive evaluation approach are also among the differences between nursing and midwifery education in South Korea and Iran (34, 64).

Another explanation is the speed and infrastructure related to the internet. South Korea has one of the fastest and cheapest internet settings in the world, and its average internet speed reaches 28.6 Mbps (65-68). Iran, meanwhile, ranks 107th in the world with an average internet connection speed of 4.7 Mbps, and internet access is expensive for citizens (69-72). In some regions of Iran, there is no proper Internet infrastructure. The weakness of the Internet and the lack of access to it in Iran have led to the low participation of students in classrooms compared to South Korea. The involvement of students in online classes largely depends on how to use the facilities of online platforms (73). The lack of internet infrastructure, low internet speed, and poor antenna coverage create many limitations in using the online platforms' features in online classes (74). These problems are more visible in Iran due to internet outages, low internet speed, and filtering.

The third dimension. "cognitive problem-solving," encompasses questions concerning asking questions, deriving an idea, applying knowledge, analyzing knowledge, judging the value of information, and approaching a new perspective. This dimension is aligned with the dimension presented in the main questionnaire (32). It can be said that learning based on problem-solving leads to deep learning, which is effective in the teaching process in which students collaboratively analyze educational issues and reflect on their experiences. The cooperation of professors and students in solving academic problems plays an essential role in the teaching-learning process, which results in improved personal learning skills (75).

The fourth dimension, "peer collaboration," includes questions on requesting help, collaborative problem-solving, responding to questions, collaborative learning, and collaborative assignments. This dimension is aligned with the dimension presented in the main questionnaire (32). It can be said that class participation can facilitate learning. However, in online learning, students must simultaneously "assess themselves," "set goals," "provide strategies to achieve those goals," and be concerned about their learning and progress (76). Therefore, the participation of students in online classes facilitates these challenges, and this dimension was important in this research. To assess the internal reliability of the scale, this study examined the correlation between the total test score and each item, as well as Cronbach's alpha values for the scale and its dimensions (1) psychological motivation; (2) effective management and communication; (3) cognitive problem-solving; and (4) peer collaboration), which were found to be more than 70%. Additionally, the correlation between the total score and each item was significant, indicating good internal reliability. These findings are consistent with the results of the Korean version (32). Moreover, this study confirmed that the scale has stable reliability by establishing a significant relationship between the two scale scores (test-retest), although the stability reliability of the Korean version has not been investigated yet.

One of the strengths of the present study was the selection of a large sample that increased the generalizability of the results. The second strength of the study was the use of cluster sampling methods from all universities in the country. Thus, considering the nature of sampling, it can be said that the random state and maximum variety of samples were maintained.

Among the limitations of this research is its validation in nursing and midwifery students. More caution should be taken in generalizing the results to other students. Therefore, it is suggested to validate this scale among other students. On the other hand, 78.41% of the participants in this research were undergraduate and associate degree students, which can lead to misuse of the results. Therefore, it is suggested to validate this scale among graduate students in research.

Our results supported the appropriate validity and reliability of the scale. This scale can help develop targeted interventions and improve student participation in e-learning by identifying the extent of student engagement in e-learning. The results of this study can also be used in designing online courses and evaluating the effectiveness of this teaching method.