1. Background

Knowledge sharing meant the distribution, collaboration, and exchange of information and cognitive skills related to knowledge among individuals and groups (1). This process led to an increase in collective knowledge, since when individuals shared their knowledge with others, collective knowledge increased (2).

Knowledge sharing in universities was done formally through places such as libraries and informally through social networks such as student societies and research groups, which improved the learning and efficiency of educational units (3, 4). As a communication process, knowledge sharing could contribute to improving organizational issues, increasing efficiency, and enhancing the quality of services in universities (5).

Universities played a critical role in the advancement of societies by producing, storing, and disseminating knowledge through innovative and applied research (6). Within universities, faculty members played an essential role in knowledge sharing as a means of enhancing organizational effectiveness (7-9). By sharing their knowledge and expertise with other members of the university community, faculty members could help ensure that the knowledge produced by the university was effectively transferred to society (10). Thus, knowledge sharing was a critical component of a university's overall mission to advance culture through producing and disseminating knowledge.

Although universities played a critical role in advancing society through the production, storage, and dissemination of knowledge, much attention had been paid to knowledge sharing in industrial companies compared to universities (11). However, knowledge sharing in universities played a central role in the sustainable socio-economic, psycho-social, and political development of societies through education, research, and dissemination of knowledge, making it essential for universities to adopt a proactive approach to knowledge management to maximize their knowledge assets (12).

Many universities, especially in developing countries, had an unsuitable approach to knowledge management, which prevented them from fostering knowledge-sharing behaviour among their faculty members (11). The common issue in universities was the hoarding of knowledge instead of knowledge sharing because, in production organizations, knowledge sharing had financial rewards for the knowledge owners (11). While in universities, academic and teaching achievement had a bigger impact on the financial benefits of professors (11). One of the requirements for enhancing knowledge sharing was a valid scale for measuring knowledge sharing. However, the absence of assessment tools to evaluate the current situation, especially in developing countries where knowledge producers might have been reluctant to share their knowledge due to fears of losing its advantages, created a significant obstacle for universities (12-15).

Existing knowledge-sharing questionnaires were designed to evaluate knowledge-sharing in economic organizations. In the following, these scales were introduced. Dixon's standard knowledge-sharing questionnaire (2000) had 15 questions on a five-point Likert scale (strongly agree to strongly disagree) and was designed in four dimensions (16). Ryu et al.'s knowledge-sharing attitude questionnaire had 10 items on a five-point Likert scale (strongly agree to strongly disagree) (17). Ajzen's knowledge-sharing questionnaire (1991), which had 10 questions on a five-point Likert scale from one to 5, was designed (18). Wang and Wang's Knowledge Management Questionnaire (KMQ) scale had 13 questions on a 7-point Likert scale (strongly disagree to "strongly agree) (19). Lin's Tacit Knowledge Sharing Scale (TEKS) had four items on a five-point Likert scale was an option (never to forever) (20). Due to this difference, the existing tools for evaluating knowledge sharing were not suitable in universities because these tools were designed to evaluate knowledge sharing in production organizations.

One of the most widely used scales to assess knowledge sharing was the Knowledge Sharing Behavior Scale (KSBS) developed by Yi (21). This 28-item scale assessed the extent to which individuals engaged in knowledge-sharing behavior. Knowledge Sharing Behavior Scale consisted of four dimensions: Organizational communication, written contributions, communities of practices, and personal interactions which were measured on a five-point Likert scale ranging from zero (never) to four (always). The total score ranged from 28 to 112, with higher scores indicating greater knowledge sharing (21). This scale was originally developed and validated in the United States to measure knowledge sharing among faculty members in relation to large commercial companies (21). It showed good psychometric properties, such as high internal consistency (Cronbach's alpha = 0.94), test-retest reliability (r = 0.87), and construct validity (confirmatory factor analysis) (21). It had been applied and validated in various studies on knowledge-sharing behavior across different countries and cultures, such as China (22), and Pakistan (11, 23).

For cross-cultural adaptation, the KSBS was translated into Persian using the guidelines proposed by Beaton et al. (24). The validity and reliability of the Persian version were examined in a sample of university faculty members in Iran.

2. Objectives

This study aimed to assess the psychometric properties of the KSBS among nursing and midwifery faculty members in Iran. The assessment included face validity, content validity, construct validity, concurrent validity, internal consistency reliability, and stability reliability.

Furthermore, the study intended to determine the cross-cultural adaptation of the KSBS to the Iranian context by considering the cultural and contextual factors that may influenced knowledge-sharing behaviors among faculty members.

Ultimately, the study aimed to provide a valid and reliable instrument for measuring knowledge-sharing behavior among nursing and midwifery faculty members in Iran.

3. Methods

In this study, the cross-cultural adaptation of a research questionnaire was conducted. The methods section was organized as follows:

3.1. The Cultural Adaptation Process

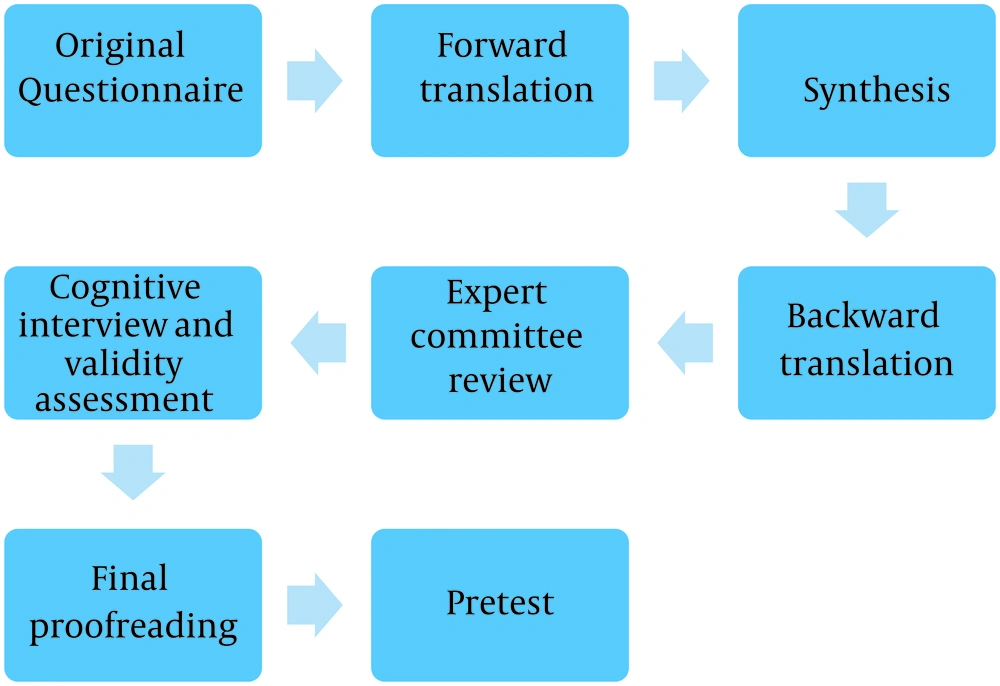

The cultural adaptation process consisted of several steps, including forward translation, synthesis, backward translation, expert committee review, cognitive interview and validity assessment, final proofreading, and pretest.

Forward translation: The main questionnaire was translated into Persian by two researchers who were proficient in both Persian and English.

Synthesis: The differences between the translations were resolved through discussion and dialogue between the two translators.

Backward translation: The agreed-upon version was given to two independent translators who were also proficient in both English and Persian for back-translation (i.e., translating from Persian to English).

Expert committee review: Meetings were held with the translators to reach a consensus on the back-translation. Then, a group of experts including specialists in information science, midwifery, nursing, and psychology compared the original and back-translated questionnaires to resolve any ambiguities (Figure 1).

Cognitive interview and validity assessment: The questionnaire was evaluated for its feasibility and comprehensibility by pilot-testing it on a sample of the target population. Ten faculty members from the nursing and midwifery school who met the inclusion criteria participated in a cognitive interview to assess its face and content validity. They were asked to rate each item on a 4-point Likert scale for simplicity, clarity, and relevance. They were also asked to provide any comments or suggestions for improvement.

Final proofreading: Any necessary modifications were made based on the results of the pilot test.

Pretest: The culturally adapted questionnaire was pretested on a sample of participants who met the inclusion and exclusion criteria.

3.2. Participants and Inclusion and Exclusion Criteria

The study population consisted of faculty members from medical universities throughout Iran, regardless of age or gender. The minimum sample size required for validity and reliability studies was 400 samples (25). In this study, a sample of 700 participants was determined.

For concurrent validation, Hobart et al. suggested a minimum sample size of 79 participants (26). In this research, the number of samples was set at 100 based on the statistical population and their availability. The minimum sample size for exploratory factor analysis (EFA) according to Tabachnick et al.'s study was 300 participants (27). In this study, the sample size of 340 participants was selected due to the possibility of respondents dropping out. For confirmatory factor analysis (CFA), Coleman recommended a sample size of 200 participants (28). Therefore, 200 participants were chosen as the sample in this study.

The entrance criteria for participation in the study included being a member of the faculty of nursing and midwifery and having at least one year of teaching experience. Participants were also required to express informed consent to participate in the research. The exit criteria for the study included a lack of willingness to continue collaboration and non-response to research questionnaires.

3.3. Procedure

To select the sample, two universities were randomly selected from each educational center (10 educational centers in the whole country). Then, a list of faculty members from the selected universities was prepared and saved in an Excel file using scientometric information from the Ministry of Health's database. The sample for each university was selected using the random function in Excel, with the number of samples from each university selected proportionally to the number of faculty members. The email addresses of the participants were obtained by checking their CV pages or articles in which they were correspondence authors. An online link to the questionnaire was then emailed to the participants, and written consent was obtained along with the questionnaires. Data were collected between June and September 2021. Table 1 presents the population and sample information by university. A total of 602 questionnaires were emailed to academic staff members, of which 640 (94% response rate) were received.

| University of Medical Sciences | N | n |

|---|---|---|

| 1. Abadan | 115 | 7 |

| 2. Shiraz | 967 | 60 |

| 3. Arak | 274 | 17 |

| 4. Qazvin | 268 | 17 |

| 5. Ardabil | 313 | 19 |

| 6. Kerman | 538 | 33 |

| 7. Urmia | 371 | 23 |

| 8. Kermanshah | 410 | 25 |

| 9. Isfahan | 941 | 58 |

| 10. Guilan | 452 | 28 |

| 11. Iran | 1029 | 63 |

| 12. Lorestan | 300 | 18 |

| 13. Birjand | 307 | 19 |

| 14. Mazandaran | 518 | 32 |

| 15. Jiroft | 105 | 6 |

| 16. Mashhad | 967 | 60 |

| 17. Shahr-e Kord | 266 | 16 |

| 18. Hormozgan | 287 | 18 |

| 19. Shahid Beheshti | 1475 | 91 |

| 20. Hamadan | 480 | 30 |

| Total | 10383 | 640 |

Population and Sample by Universities

3.4. Ethical Consideration

This study was conducted following the ethical guidelines for research involving human subjects. Written informed consent was obtained from all participants, and their anonymity and confidentiality were ensured.

3.5. Validity and Reliability

To evaluate the questionnaire's validity, face validity, construct validity, content validity, and concurrent validity were employed. Internal consistency and test-retest were used to evaluate reliability.

For face validity, two methods were employed, i.e., qualitative and quantitative. In the qualitative method, face-to-face interviews were conducted with ten participants from the target community. The interviews aimed to explore difficulty level cases (difficulty in understanding phrases and words) and ambiguity (probability of misunderstanding of phrases or lack of meaning of words). Participants' opinions regarding the questionnaire were sought (29).

In the quantitative part, to determine the face validity of the questionnaire, five options were provided for each primary question ("not at all important = one," "slightly important = two," "important = tree," "fairly important = four," and "very important = five."). Next, the questionnaires were distributed among ten participants from the target community who were asked to express their opinions about the questions. Questions with an impact score of less than 1.5 were modified or removed. The impact factor is defined as the percentage of answers with a score of four or five for each question multiplied by the average scores of all respondents (29, 30).

Content validity was evaluated by ten experts who assessed the content validity ratio (CVR) and content validity index (CVI) of the questionnaire. For this purpose, scale questions were based on a three-part Likert scale: "Necessary," "useful but not necessary," and "unnecessary." A CVR value above 0.62 was considered acceptable according to the Lawshe table (31). For CVI evaluation, each item of the scale was rated by 10 experts based on its relevance to the construct of knowledge sharing behavior. The experts used a four-point Likert scale ranging from 1 (not relevant) to 4 (highly relevant). The item-level content validity index (I-CVI) for each item was calculated by dividing the number of experts who rated the item as 3 or 4 by the total number of experts. The scale-level content validity index (S-CVI/UA) was calculated by dividing the number of items with I-CVI greater than 0.78 by the total number of items. The S-CVI/Ave was calculated by taking the average of I-CVIs for all items. According to the literature, a scale with excellent content validity should have I-CVIs of 0.78 or higher and S-CVI/UA and S-CVI/Ave of 0.8 and 0.9 or higher, respectively (32, 33).

To determine the validity of the structure, EFA was used. Sampling adequacy was determined with Kaiser-Meyer-Olkin (KMO) and Bartlett's coefficient. A KMO value above 0.7 and the significance level for Bartlett's test below 0.05 indicated the adequacy of the samples for EFA (34). The principal factors were extracted by PCA, and the minimum factor loading was set at 0.4 (35). Confirmatory factor analysis was used to confirm the extracted dimensions. Standardized root mean square residual (SRMR), goodness of fit index (GFI), comparative fit index (CFI), adjusted goodness of fit index (AGFI), and CMIN/DF were used to assess the model overall fitness. A model was considered to have a good fit if SRMR < 0.1, GFI > 0.9, CFI > 0.9, AGFI > 0.9, RMSEA < 0.08, and CMIN/DF < 3 (36).

To evaluate concurrent validity, the correlation between the scores obtained from the research scale and the scores obtained from KMQ and TEKS were assessed.

Knowledge Management Questionnaire was designed by Wang and Wang. This questionnaire has 13 questions on a seven-option Likert scale (‘‘one’’ (totally disagree) to ‘‘seven’’ (totally agree)). This scale has two dimensions, tacit knowledge sharing (items 1 - 6), and explicit knowledge sharing (items 7 - 13). Convergent validity, divergent validity, and construct validity of the scale had been confirmed by Wang and Wang (19). Cronbach α values of the factors ranged from 0.89 to 0.97 (19). Its validity and reliability had been confirmed in Iran (37, 38).

Tacit Knowledge Sharing Scale Lin developed a brief four-item scale to assess tacit knowledge sharing. The scale was developed from existing literature to measure how often employees share their unspoken and implicit knowledge with their co-workers in the workplace. The four items were rated on a five-point scale that ranges from one (never) to five (always). All the items are positively worded, with higher scores indicating that employees more willingly share their tacit knowledge with co-workers (20). The items yielded high internal consistency reliability, with Cronbach’s alpha of .81 (39). In this study, Cronbach's alpha was calculated as 0.89.

Knowledge Sharing Behavior Scale was developed by Yi (21). Knowledge Sharing Behavior Scale have 28 items (on a five-point Likert scale of always = four, often = three, sometimes = two, rarely = one, and never = zero (the minimum score is 0, and the maximum score is 112. The closer the score is to 112, the better the status of knowledge sharing)) with four dimensions: Organizational communication (eight statements, coefficient alpha (CA) = 0.905 and items 6 - 13), written contributions (five statements, CA = 0.458 and items 1 - 5), communities of practices (seven statements, CA = 0.934 and items 22 - 28), personal interactions (eight statements, CA = 0.723 and items 14 - 21), and. Each statement was assessed on a five-point Likert scale (i.e., never, rarely, sometimes, often, and always). This scale as a valid and reliable instrument can assess knowledge-sharing behaviour among academics (11, 21-23, 40).

To assess the internal reliability of the scale, Cronbach's alpha coefficients were calculated based on the responses of the participants in the CFA stage, with values above 0.7 indicating adequate internal reliability (41). In addition, Cronbach's alpha if item deleted was also computed. To measure the reliability of the scale, test-retest (by repeating the questionnaire on 64 randomly selected previous respondents) and split-half (using the data collected in the CFA stage) methods were used.

3.6. Statistical Analysis

Descriptive statistics were used to estimate the frequency and frequency percentage. According to Lawshe, the acceptance value of questions for CVR (according to the opinion of 10 experts) was higher than 0.62 (42). An acceptable value for CVI was equal to or higher than 0.79 (43). To investigate the structure validity and the factor structure of the current scale, PCA was performed with varimax rotation. Factors that had eigenvalues greater than 1.5 were regarded as the major factors (44, 45). Exploratory factor analysis and Pearson correlation coefficient were performed using SPSS version 21, and CFA was performed using LISREL version 8.8. The KSBS internal consistency was evaluated by the Cronbach α coefficient, with a value of > 0.7 being regarded acceptable (46, 47). In this study, the significance level was 0.01.

4. Results

4.1. Cultural Adaptation Process

The process of cross-cultural adaptation of the questionnaire involved several steps. Two bilingual translators translated the questionnaire into Persian and resolved the differences. Two other bilingual translators back-translated the questionnaire into English. The expert committee reviewed the original and back-translated versions and suggested minor modifications. The face and content validity of the questionnaire were assessed through a cognitive interview with a sample of the target population. The participants rated each item on a 4-point scale for simplicity, clarity, and relevance and expressed satisfaction with the questionnaire. The results showed good face validity, with all items scoring well on each criterion. Any final changes were incorporated into the questionnaire, which was then tested with participants meeting the study criteria. The test showed that it took an average of 15 minutes to complete the questionnaire, with no difficulties reported. As a result, the rigorous process of translation, expert review, cognitive interview, and testing produced a Persian version of the questionnaire that was culturally adapted for use in Iran. The final Persian version of the questionnaire is presented in Appendix 1.

4.2. Participants and Inclusion and Exclusion Criteria

The demographic characteristics of the participants are presented in Table 2. The results showed that 23 (3.82%) were instructors, 325 (53.99%) were assistant professors, 173 (28.74%) were associate professors, and 91 (15.12%) were professors. In addition, 235 (39.04%) were women, and 377 (62.62%) were men. Also, 246 (40.86%) cases had work experience of fewer than 15 years, and 366 (60.80%) had over 15 years. 260 (38.86%) were women, and 409 (61.14%) were men. 340 (56.48%) people were under 45 years old, and 262 (43.52%) people were over 45 years old.

| Age (y) | Sex | Academic Ran | Work Experience | Total | Missing | |||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| < 45 | > 45 | Female | Male | Instructors | Assistant | Associate | Professors | < 15 | > 15 | |||

| Face validity | 11 (57.89) | 8 (42.11) | 7 (36.84) | 12 (63.16) | 1 (5.26) | 10 (52.63) | 5 (26.32) | 3 (15.79) | 8 (42.11) | 11 (57.89) | 19 | 1 |

| EFA | 170 (54.14) | 134 (42.68) | 120 (38.22) | 194 (61.78) | 13 (4.14) | 167 (53.18) | 86 (27.39) | 48 (15.29) | 125 (39.81) | 189 (60.19) | 304 | 26 |

| CFA | 106 (56.99) | 80 (43.01) | 72 (38.71) | 114 (61.29) | 6 (3.23) | 99 (53.23) | 54 (29.03) | 27 (14.52) | 75 (40.32) | 111 (59.68) | 186 | 14 |

| Concurrent validity | 53 (56.99) | 40 (43.01) | 36 (38.71) | 57 (61.29) | 3 (3.23) | 49 (52.69) | 28 (30.11) | 13 (13.98) | 38 (40.86) | 55 (59.14) | 93 | 7 |

| Total | 340 (56.48) | 262 (43.52) | 235 (39.04) | 377 (62.62) | 23 (3.82) | 325 (53.99) | 173 (28.74) | 91 (15.12) | 246 (40.86) | 366 (60.80) | 602 | 48 |

Demographic Characteristics of the Participants

4.3. Validity and Reliability

The validity and reliability of the KSBS questionnaire were assessed using various methods. The results are as follows:

Content validity: The content validity of the questionnaire was evaluated by calculating the CVR and the CVI for each item based on the opinions of 10 experts. The results showed that the CVR for the 28-item scale ranged from 73% to 100%, and all items had I-CVI values greater than 0.78, indicating that they were relevant to the construct of knowledge-sharing behavior. The S-CVI/UA for the whole scale was 1.00, meaning that all items met the criterion of having an I-CVI greater than 0.78. The S-CVI/Ave for the entire scale was 0.93, higher than the recommended value of 0.9. These values indicated that the questionnaire had good content validity.

Construct validity: The results of EFA showed that the KMO measure of sampling adequacy was 0.93, and the Bartlett's test of sphericity was significant (chi-square = 4522.76, df = 378, P < 0.001), indicating that the data was suitable for factor analysis. The factor extraction showed that all items had factor loadings greater than 0.4, and that four factors emerged from the analysis, explaining 57.07% of the total variance. The first factor explains 16.47%, the second factor 15.97%, the third factor 13.99% and the fourth factor 10.64% of this variance.

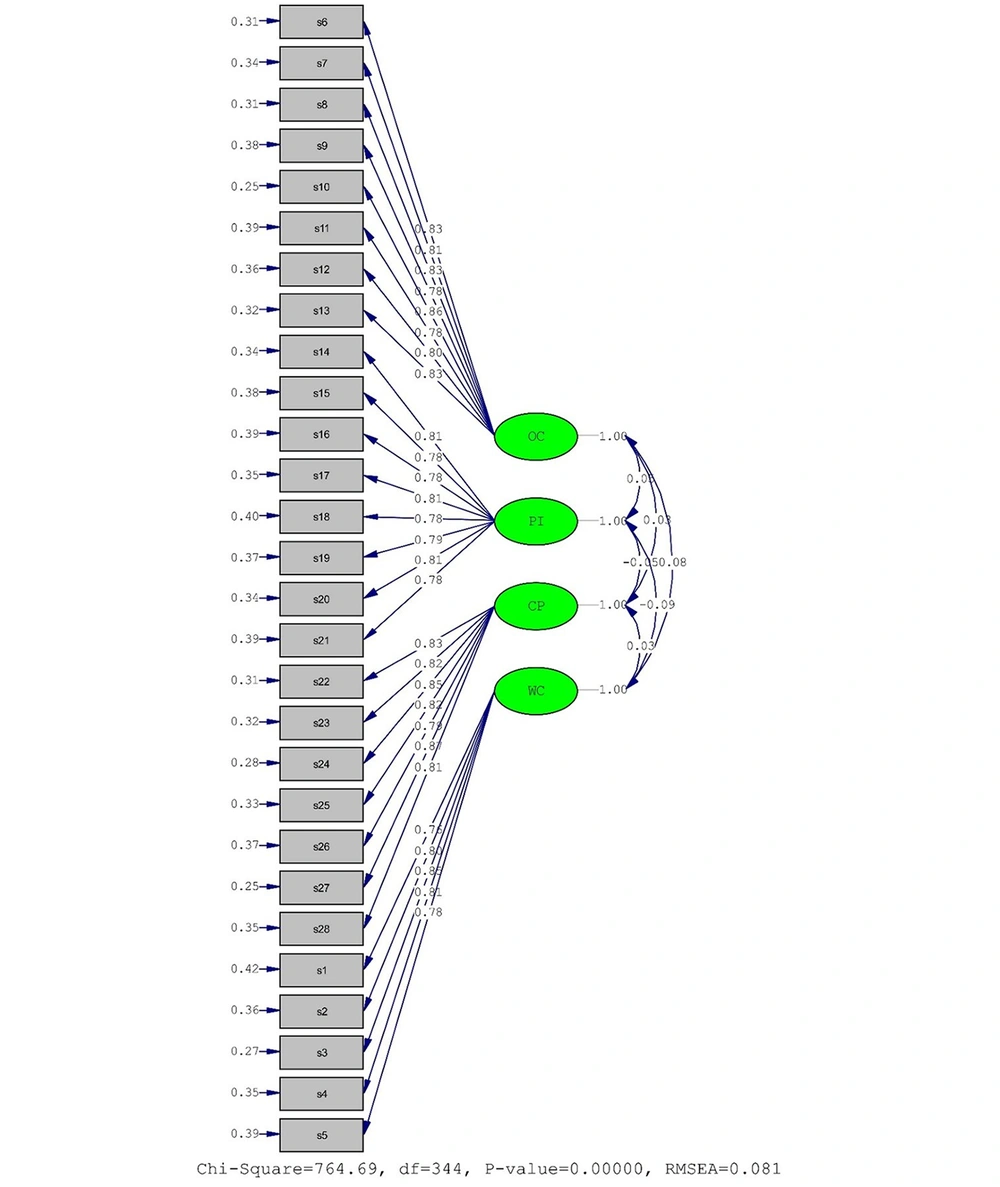

The first factor is organizational communications (items 6 - 13), the second factor is personal interactions (items 14 - 21), the third factor is communities of practice (items 22 - 28), and the fourth factor is written contributions (items 1 - 5) (Table 3). The results of CFA showed that all goodness-of-fit indices met their respective criteria, indicating that the four-factor model fitted the data well. Figure 2 shows the standardized path diagram of the CFA model.

| Items | Organizational Communication | Personal Interactions | Communities of Practice | Written Contributions |

|---|---|---|---|---|

| 1 | 0.27 | 0.16 | 0.28 | 0.63 |

| 2 | 0.23 | 0.15 | 0.19 | 0.68 |

| 3 | 0.19 | 0.17 | 0.16 | 0.72 |

| 4 | 0.23 | 0.12 | 0.22 | 0.68 |

| 5 | 0.26 | 0.13 | 0.23 | 0.73 |

| 6 | 0.70 | 0.21 | 0.16 | 0.13 |

| 7 | 0.67 | 0.16 | 0.13 | 0.16 |

| 8 | 0.72 | 0.18 | 0.22 | 0.19 |

| 9 | 0.66 | 0.29 | 0.12 | 0.20 |

| 10 | 0.67 | 0.21 | 0.11 | 0.20 |

| 11 | 0.70 | 0.22 | 0.13 | 0.19 |

| 12 | 0.75 | 0.18 | 0.16 | 0.15 |

| 13 | 0.67 | 0.22 | 0.10 | 0.20 |

| 14 | 0.20 | 0.71 | 0.16 | 0.09 |

| 15 | 0.26 | 0.65 | 0.20 | 0.06 |

| 16 | 0.20 | 0.64 | 0.14 | 0.17 |

| 17 | 0.14 | 0.70 | 0.15 | 0.18 |

| 18 | 0.23 | 0.74 | 0.17 | 0.08 |

| 19 | 0.22 | 0.67 | 0.22 | 0.17 |

| 20 | 0.20 | 0.67 | 0.15 | 0.07 |

| 21 | 0.15 | 0.70 | 0.19 | 0.12 |

| 22 | 0.16 | 0.19 | 0.66 | 0.05 |

| 23 | 0.17 | 0.19 | 0.62 | 0.23 |

| 24 | 0.11 | 0.11 | 0.73 | 0.11 |

| 25 | 0.06 | 0.20 | 0.65 | 0.22 |

| 26 | 0.15 | 0.20 | 0.68 | 0.20 |

| 27 | 0.12 | 0.18 | 0.69 | 0.21 |

| 28 | 0.21 | 0.17 | 0.75 | 0.13 |

Rotated Component Matrix

Concurrent validity: The results showed that the correlation coefficient between the KSBS scale and the KMQ scale was 0.72 (P < 0.001), and between the KSBS scale and the TEKS scale was 0.87 (P < 0.001). These values indicated that the questionnaire had good concurrent validity. Reliability: The results showed that Cronbach's alpha coefficient for the whole scale was 0.84, and for each factor was above 0.90, indicating high internal consistency. The split-half coefficient for the first half (14 items) and second half (14 items) of the scale was 0.86 and 0.85, respectively, and the correlation between them was 0.16, indicating good stability (Table 4). The test-retest reliability for a subsample of 64 participants who completed the questionnaire twice with a two-week interval was 0.74, indicating good temporal stability.

| Domains/Scales | Number of Items | Mean ± SD | Cronbach's Alpha |

|---|---|---|---|

| Scale a | 28 | 55.70 ± 16.75 | 0.84 |

| Organizational communications | 8 | 15.17 ± 9.46 | 0.94 |

| Personal interactions | 8 | 16.17 ± 8.84 | 0.93 |

| Communities of practice | 7 | 14.25 ± 8.37 | 0.94 |

| Written contributions | 5 | 10.10 ± 5.86 | 0.90 |

| Half one b | 14 | 28.44 ± 11.15 | 0.86 |

| Half two c | 14 | 27.25 ± 11.56 | 0.85 |

| Correlation between half one and a half two d | 0.16 | ||

Mean Scores, Standard Deviations, and Internal Consistency Reliability (Measured by Cronbach α) for the Scale and Factors Obtained

5. Discussion

The present study provided comprehensive information on the validity and reliability of the KSBS among faculty members of medical universities. The analyses included a range of validity tests such as face, concurrent, construct, and content validity, as well as reliability calculations.

The scale had a good face validity. This finding was consistent with those of Yi (21) and Ramayah et al. (11). As an explanation, it can be said that the questions of the scale were designed and localized by the research team and several expert faculty members before face validity. Therefore, none of the questions were deleted at this stage.

The CVR for the 28-item scale ranged from 73% to 100%. Therefore, the content validity of all questions was confirmed by the experts (48). The I-CVI value (0.78) was also acceptable. Therefore, the scale had content validity. These findings were in line with those of Yi (21) and Ramayah et al. (11). As a possible explanation, we can refer to the aim of this questionnaire, which was to assess knowledge sharing behavior among faculty members, which was consistent with the statistical population of this research. Also, the level of literacy of the statistical population of the research is probably another reason for increasing the content validity of this scale. This study's content validity of results were consistent with Yi (21), indicating that the scale's items were relevant and representative of the knowledge sharing behavior construct.

The results of factor analysis showed that KSBS was a 28-item tool with four factors: Written participation, organizational communication, personal interactions, and communities of practice. These findings were confirmed by Yi (21), Ramayah et al. (11). This study's factor structure was consistent with the four-factor model proposed by Yi (21), which supported the generalizability of the scale among faculty members in different countries and disciplines.

The questions for the written participation factor in this scale were consistent with those for modes of sharing tacit and explicit knowledge, intention (Islam), tendency to share tacit organizational knowledge (Holste and Fields (49)), Chennamanen's (50) scale, and sharing explicit knowledge (Wang and Wang (19)) (49-53). The questions for organizational communication were consistent with those for modes of sharing tacit and explicit knowledge, performance, intrinsic motivation (Islam), tendency to share tacit organizational knowledge (Holste and Fields (49)), Karamitri et al.'s (53) scale, and sharing tacit knowledge (Wang and Wang (19)) (49-53). The questions for personal interactions were consistent with those for modes of sharing tacit and explicit knowledge, intention, intrinsic motivation (Islam), tendency to share tacit organizational knowledge, tendency to exploit tacit organizational knowledge (Holste and Fields (49)), Chennamanen's (50) scale, and sharing tacit knowledge (Wang and Wang (19)) (49-53). The questions for communities of practice were consistent with those for modes of sharing tacit and explicit knowledge (Rehman), intention (Islam), tendency to share tacit organizational knowledge (Holste and Fields (49)), Karamitri et al.'s (53) scale, and expected reciprocal relationship (Bock et al. (54)) (49, 51, 52).

The results of concurrent validity showed a correlation between KSBS and KMQ and TEKS. This correlation was lower for KMQ because it only measures some dimensions of knowledge sharing behavior. This concurrent validity results are similar to the correlations reported in Yi (21), which suggests that the Persian version of the scale maintains the same level of criterion validity as the KSBS.

The alpha coefficient for KSBS was 84%. This indicates a desirable internal consistency. These data indicate that KSBS has an optimal internal consistency coefficient (34). The test-retest correlation coefficient was 0.74. This indicates stability in KSBS (36). These findings were confirmed by Yi (21) and Ramayah et al. (11). This study's reliability results are comparable to those reported in Yi's study, reinforcing the notion that the Persian version of the scale is a reliable measure of knowledge sharing behavior.

Knowledge Sharing Behavior Scale is a comprehensive scale for the university community; therefore, it is more specific than many other scales because in most scales, knowledge sharing is one dimension of the scale. Our statistical population was faculty members from Iranian universities. This tool can have practical and research applications. Identifying practical knowledge sharing behavior among faculty members can help to design targeted interventions to improve knowledge sharing behavior. Researchers can use this scale to assess knowledge sharing behavior among faculty members.

The main strength point in this study was that it was validated based on the university community, not an administrative one. On the other hand, samples were taken from all over the country, which increases generalizability. One of the limitations of this study was that validation was done only in medical universities. Therefore, it is suggested that validation be done in other universities affiliated with the Ministry of Science as well. Also, a study can be conducted that compares knowledge sharing behavior between professors of medical universities and universities of the Ministry of Science. Another limitation of the study was the lack of divergent and convergent validity assessment in the study.

Overall, this study's results demonstrate a high level of agreement with the findings of Yi's (21) original study, indicating that the Persian version of the KSBS is a valid and reliable measure for assessing knowledge sharing behavior among faculty members. Further validation in other university settings and the inclusion of additional validity measures would strengthen the evidence supporting the use of the KSBS in various contexts.

5.1. Conclusions

Psychometric results showed that KSBS was a valid and reliable tool for measuring knowledge-sharing behaviour among Iranian faculty members. Moreover, according to the four factors of the scale, educational groups can enhance the knowledge-sharing behaviour of their members through these factors.