1. Background

About 500 million people in the world suffer from a form of disability, of which 80% live in developing countries. It is also noted that about 15% of people in each country suffer from a disability, which in Iran is higher due to the country’s recent war. On the other hand, 65% of these people are male and 35% female. That is why in addition to 2200 governmental health rehabilitation centers in Iran, other private institutions also provide service to 100000 disabled people (1). According to the 2016 statistics in Iran, about 5000 people become disabled annually with spinal cord injury (2). About 4% of these patents have severe disabilities and require very special and cost-effective care. These costs and cares not only result in financial problems affecting the family and society, but also create a lot of stress for the patient because of his/her dependence on other people. On the other hand, given the ever-increasing advances in computer science, it can be said today that human life is very difficult without communication with computer systems. Nevertheless, people with moderate or severe disabilities, especially those with spinal cord injury, have many difficulties in communicating with these systems, because their problem is generally not addressed in the design of user interfaces in computer systems. The purpose of this research is to develop a user-friendly interface for people with disabilities in order to improve their communication with computer systems and solve a number of their everyday problems. With the design of an eye-command system, they can fulfill their basic needs, such as angular motion of their bed, ambient light control, temperature control, sending alarms, and communicating with a computer. Therefore, the need for continuous care decreases, and it has a great psychological effect on these individuals who can somehow communicate with technology.

The facial recognition system is a powerful technology for identifying and verifying a person from a digital photo or video. This system is able to detect faces of individuals with high-precision based on artificial intelligence technology and deep learning algorithms. In fundamentals of facial recognition, there is a concept called “face detection”. Face detection is the first step in face recognition which is the ability to detect the location of face in any input image or frame. The methods of face detection are divided into four categories (3); knowledge-based method, feature invariant approaches, template matching methods, and appearance-based methods. A common way of face detection is to place a rectangular frame on the picture, and search for the predefined features such as eyes, nose, eyebrows, mouth and etc. The algorithm divides the image into two groups of “face” and “non-face”. In artificial intelligence systems, learning and execution time, number of trainings and error value are very important.

Eye tracking is a technique where the position of the eye is used to determine the gaze direction of a person at a given time and also the sequence in which they are moved. This is also known as gaze-based interface. Holmqvist et al. (4) gathered all available information and techniques about tracking the eye movement. Hsu et al. (5) introduced a face recognition algorithm for color images in various lighting and complex backgrounds, which is based on a self-described illumination compensator and a non-linear color conversion to the YCbCr color space. In addition, they first removed the segments containing the color of the skin, and performed face recognition by eye and lip detection. Chin et al. (6) pursued the conceptualization, implementation, and testing of a system that allowed computer cursor control with face muscle and gaze. Their system inputs consisted of electromyogram signals from face muscles and the point of gaze coordinates produced by an eye-gaze tracking system. Nishimura et al. (7) designed an eye interface for physically impaired people by genetic eye tracking. De Santis and Iacoviello (8) presented an eye tracking procedure providing a non-invasive method for real time detection of the eyes in a sequence of frames. Also, Udayashankar et al. (9) designed a real time interactive system that could assist a paralyzed person to control appliances by playing pre-recorded audio messages, through a predefined number of eye blinks. Hennessey and Lawrence (10) used multiple corneal reflections and point-pattern matching for a scaling correction of head displacements and improvement of the system robustness to corneal reflection distortion in order to improve the point-of-gaze estimation accuracy. Cho et al. (11) proposed the long range binocular eye gaze tracking system which worked in a range of 1.5 ~ 2.5 m while allowing a head displacement in depth. They used two wide angle cameras to obtain the 3D position of the user’s eye. Eid et al. (12) designed a new gaze-controlled wheelchair for navigating by a patient with ALS. Kumar et al. (13) developed a novel eye tracking system, called SmartEye, which was based on eye fixation, smooth pursuit, and blinking in response to both static and dynamic visual stimuli. Meena et al. (14) proposed a novel method for optimization of the position of items for gaze-controlled tree-based menu selection systems in a Hindi virtual keyboard. This method was based on considering a combination of letter frequency and command selection time. Nouri et al. (15) designed a simple eye tracking system for people with spinal cord injuries.

The Internet of Things (IoT) is the extension of internet connectivity into physical devices. These devices can communicate and interact with others over the internet, and can be remotely monitored and controlled. IoT devices are a part of the larger concept of home automation, which can include lighting, heating and air conditioning, media and security systems. The IoT revolution is redesigning modern healthcare with promising economical, technological, and social prospects. IoT devices can be used to enable remote health monitoring and emergency notification systems. Moreover, IoT-based systems are patient-centered, which involves being flexible to the patient’s medical conditions. Riazul Islam et al. (16) surveyed advances in IoT-based healthcare technologies and reviewed the state-of-the-art network platforms, applications, and industrial trends in IoT-based healthcare systems. Domingo (17) considered different application scenarios in order to illustrate the interaction of the components of the IoT for people with disabilities. Sethi and Sarangi (18) proposed a novel taxonomy for IoT technologies, with some of the most important applications that have the potential to make a big difference in human life, especially for the differently abled and the elderly people. Stojkoska and Trivodaliev (19) proposed a framework incorporating different components from IoT architectures/frameworks in order to efficiently integrate smart home objects in a cloud-centric IoT based solution.

2. Objectives

In this research, the goal is to establish a relationship between a patient with a lumbar lesion and a command system using eye tracking in an IoT-based solution. For this purpose, the “step” function in the MATLAB software was used to detect the eye area. Statistical weighted averaging method is used to reduce errors. Then, the command is sent to the smart home department by designing a board with a processor and creation of a communication platform based on the IoT. In this way, one can issue a command by looking at the specific options on the command pane, and then looking at the confirmation point.

3. Methods

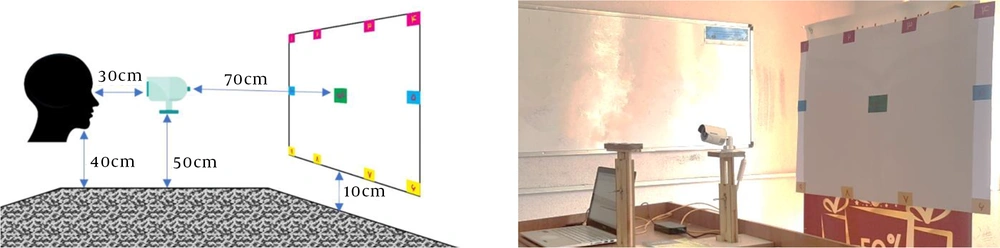

Care needs to be taken into consideration on how to treat people with spinal cord injury. The head angle and height depend on the patient’s type and amount of injury. Figure 1 shows the test setup and position of the patient’s head in front of the camera and the selection pane. Two types of adjustable wooden fixtures were used to collect verifiable data for testing the patient and installing the camera. It was also possible to adjust the angle of the camera in all directions. An infrared (IR) light ring is used around the camera to provide IR imaging in dark environments. The selection (command) pane designed for the patient has eleven options: ten options for issuing a command and one option for confirming the selected command. After looking at each option and detecting the command, the system announces the detected command, and the patient can confirm it by looking at the confirmation option at the middle of the pane.

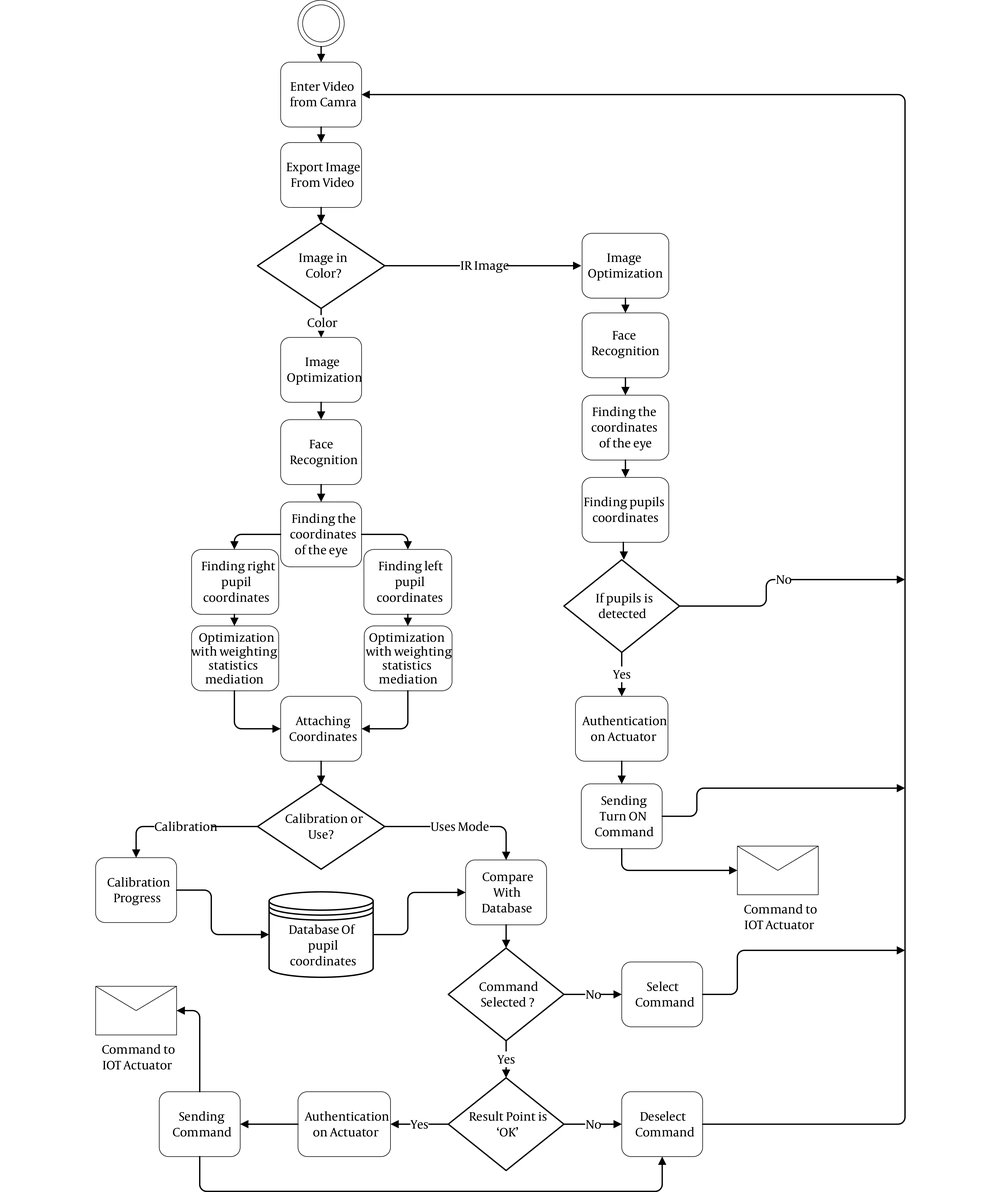

The commanding process using eye tracking technique in this research is performed in several steps.

3.1. 1st Step: Image Taking

At this stage, the connection with the camera is established using the IP Camera Image Acquisition toolbox and Instrument Control toolbox in MATLAB software to create the “object”. The film captured by the object (camera) is then received. A frame of it is separated and sent to the preprocessor for preparation.

3.2. 2nd Step: Image Preprocessing

In this step, the image obtained from the previous step -which is in RGB space- is taken and sent to the processing stage after applying the noise filter.

3.3. 3rd Step: Eye Identification

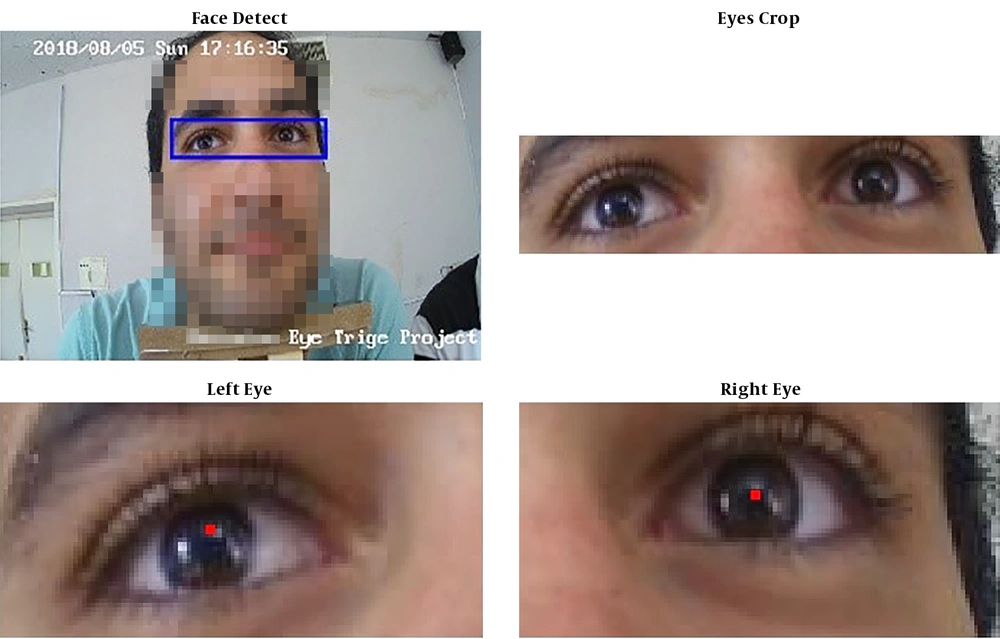

At this stage, the system is designed to find the exact coordinates of the face in the image according to its apparent features. In this algorithm, the regions of both eyes in the image are searched by Six Segmented technique. First, a rectangle containing six sub-sections is selected in the image. Then, color contrast is measured in each section. The algorithm moves the rectangle throughout the image. Finally, the rectangle that contains the specific characteristics represents the eyes and eyebrows region. This technique not only offers a fast face and eye recognition, but also responds well to the rotation of the head. So, this method is not sensitive to the face rotation up to 20 degrees and the change of distance from the camera. After identifying the rectangle containing both eyes in the image, the first three halves of the image containing the two-eye image are separated from the image, and then it is broken up into two left and right eye sent separately to the next step to identify the pupil. Figure 2 shows this procedure.

3.4. 4th Step: Pupil Detection

At this stage, the incoming image -which can be the right or left eye- is identified by extracting the iris medium and finally the pupil's exact location using a combination of two methods for removing the eyelids (red pupil points in Figure 2). The obtained point relative to the origin of the image has a coordinate, which the algorithm sends it as the output of this step to the statistical weighted averaging step.

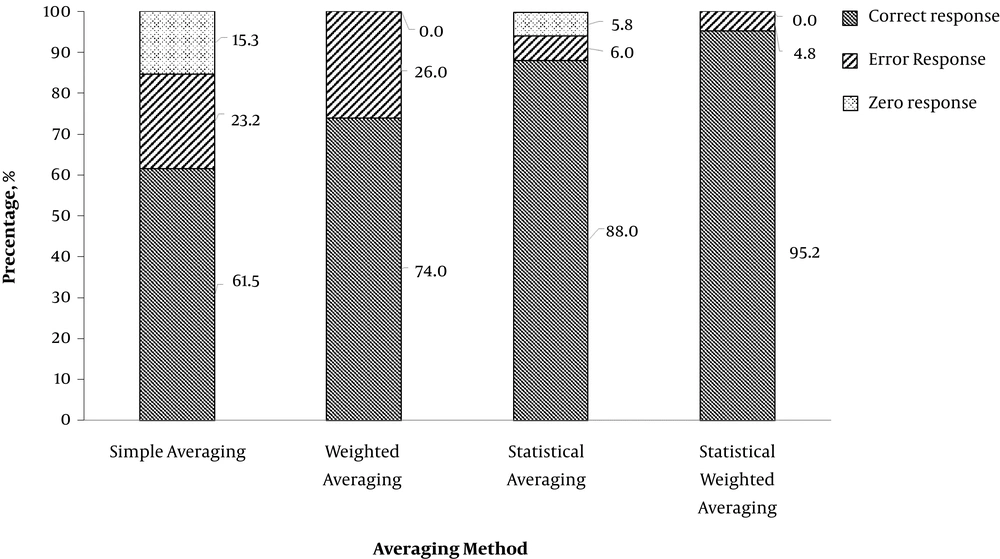

3.5. 5th Step: Statistical Weighted Averaging

At this stage, the output of processing each frame produces the coordinate matrix of a trace as four numbers (xr, yr, xl, yl). After removing all zero points resulting from non-recognized points on the face, each value is rounded to two decimal places. Then, two values with the highest frequency of weighted average are selected. Figure 3 shows the superiority of statistical weighted averaging method over other averaging methods. These steps are repeated for each variable, their values are calculated and will be sent to the calibration or tracking step (each applicable) along with non-zero coordinates.

3.6. 6th Step: Tracked Data Usage

At this stage, depending on the mode of the device (receive command or calibration mode) input data is directed to one of the following paths.

3.6.1. Calibration

In this case, the obtained points can be cited if the quorum is reached after the 5th step (statistical weighted averaging) and can be recorded as a valid data of that point for the person. After this, the algorithm announces the next point for calibration. This cycle is repeated for all eleven points and a database is created for the person to compare and receive the command.

3.6.2. Receive Command

In this step, the coordinates obtained from the previous step are compared with the coordinates of the reference points in the individual calibration file for each eye, and the distance from all points of the individual profile is obtained. Then, the closest reference point for the coordinates obtained is selected for each eye. If the responses of both eyes are identical, that point is selected as the final output. Otherwise, the algorithm compares the difference in the coordinates obtained with the selected point by each eye, and the final response is the point with smaller distance from the reference point. Finally, the result is sent to the command’s confirmation step.

3.7. 7th Step: Command Confirmation

When the required command is selected, the patient can confirm it by looking to the “confirm” point at the middle of the selection pane. This step is necessary to prevent the implementation of an incorrect command. When confirmed, the corresponding command will be sent for execution. Otherwise, the command is canceled and the program returns to the beginning waiting for the next command.

3.8. 8th Step: Send Command

At this stage, to create an output in the IoT platform, a device was designed and produced with the ability to generate 10 outputs with connection to the mobile network based on the Arduino Mega 2560 microcontroller (for command authentication) and the SIM800L cellular communication chip (for sending SMS and emergency call). This device obtains the information on the network with ENC28J60 chip, and executes the commands after confirmation of the sender’s identity. Several examples of the commands applicable by this device through 10 relays with a 5A current are as follows; turn on/off the equipment connected to it, emergency call, send text message, send alarm, move the bed up/down, etc.

For this purpose, a link is established between the image processing and eye tracking unit and the output unit in ethernet base, and the port 22 via SSH in which the processing unit verifies the owner’s identity by sending the username and password. It waits for a moment for granting the send command permission. The output unit sends the confirmation message after adapting the username and password with its database and verification of the owner’s identity. Then the command code will be sent based on one of the following two command forms:

• Send command to 10 output relays: in this form, after sending the command code, which contains the output number, it determines the waiting time, and waits for the right command by sending the situation type (On, Off, Change).

• Send the SMS or call command: in this form, code 20 is used for sending SMS and code 21 for calling the destination number. Then the message text for SMS or the ringing time for call is sent. After that, the message finish is announced waiting for confirmation. This procedure is repeated for three times in case the confirmation is not received in one minute.

The whole process is shown as a flowchart of the algorithm in Figure 4.

4. Results and Discussion

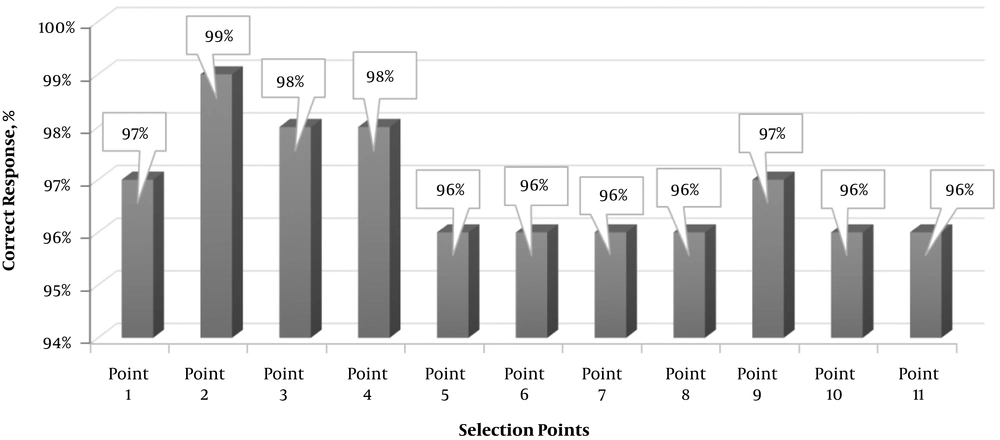

In this study, the tests were provided in a similar environment and conditions similar to that used by spinal cord injury patients. The experiments were performed in an environment with visible light range of 5 to 300 lux, and infrared light range of 2 to 6. To evaluate the responsiveness of the algorithm to the facial features, thirty different individuals of different ages, genders, and facial features such as beard, hair, eyebrows, glasses and colored skins were tested. Therefore, information and results from the experiments can be cited and lead to future research. Each person was asked to look at each of the eleven command points on the screen. Figure 5 shows the average accuracy of each of the detected points after tests with all individuals.

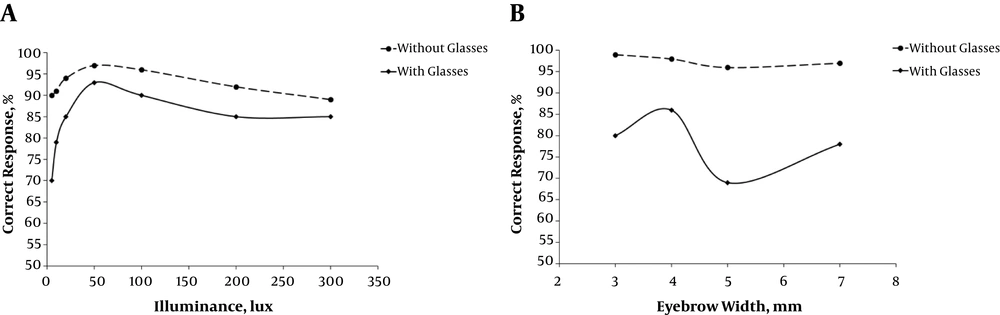

The accuracy of the algorithm relative to the ambient light intensity is shown in Figure 6A. It is seen that the highest accuracy is obtained under 90 lux light intensity ambient. The best results were obtained when the patient wears no glasses. Wearing glasses reduces the accuracy maximum 20%. By performing multiple tests, it was found that the eyebrow width has a significant effect on the eye pupil detection. In Figure 6B, the relation between the eyebrow width and correct response percentage is shown in two conditions (with glasses and without glasses). It can be seen that the combination of eyebrow and glasses does not have a specific trend.

This algorithm has no sensitivity to individuals with a different gender or beard shape and type. In the infrared optical spectrum, the constant accuracy of 97% was due to the constant infrared light irradiated by the infrared light ring around the camera.

4.1. Conclusions

In this research, a non-invasive intelligent method is used to take and confirm the eye commands by people with spinal cord injuries. This technique has been developed by combining an eye tracking method and Internet of Things to create a platform for the use of SCI patients. The eye movement tracking method is designed and programmed in MATLAB software. The center of patient’s both eyes pupils is first detected, the related command is selected and then verified by the patient looking at the confirmation tab. Infrared light is also used for dark environments. The proposed method was tested for people with different gender/age/eyebrow type/beard type both wearing and not wearing glasses. The system could produce 97% correct responses with the help of statistical weighted averaging and eliminating the bad data. Therefore, the designed system is a reliable method for building a smart home for SCI patients in the context of an IoT solution.