1. Context

As the population ages, the number of people with dementia increases dramatically. Consequently, it imposes enormous health and economic problems on societies (1, 2). More than 55 million people live with dementia worldwide, with nearly 10 million new cases yearly. As the proportion of older people in the population is increasing in nearly every country, this number is expected to rise to 78 million in 2030 and 139 million in 2050 (3). Timely diagnosis of dementia is important in treating and managing the disease. Early detection provides access to the right services and support. It also helps people manage their condition, plan for the future, and live well with dementia (4-7).

Cognitive assessment is one of the methods for early detection of dementia (8-10). Various tests, such as Mini-mental State Examination (MMSE) and Montreal Cognitive Assessment (MOCA), have been developed to assess cognitive functions and screen for dementia. These tests are mostly used because they are non-invasive, efficient, and cost-effective approaches to diagnosis (7, 8, 11-14). Studies showed that cognitive tests have good diagnostic accuracy for detecting dementia (15, 16). Therefore, paper-based tests are almost always used in healthcare organizations (17). The conventional paper-based cognitive tests accurately detect dementia but have some limitations. One of the most important limitations of these tests is that their administration, scoring, interpretation, and documentation require considerable time from the healthcare provider (2, 18, 19).

Digital technology transforms traditional pencil and paper approaches to cognitive testing into more objective, efficient, and sensitive methods. Digital or computerized cognitive tests have different advantages, such as enhanced scoring accuracy, immediate automated scoring and interpretation, easy access to tests by healthcare providers, availability of several alternative tests, and the possibility of using the test by individuals in a self-administered manner (19-21). With the outbreak of the COVID-19 pandemic and accompanying various social restrictions such as social distancing measures, remote service delivery has accelerated (22). In the current situation, digital screening tests for assessing cognitive functions can play a significant role in the remote and timely identification of people with dementia. These tests can be used by healthcare providers and even by individuals. Therefore, this study aimed to review digital cognitive tests for dementia screening.

2. Methods

2.1. Data Resources and Search Strategy

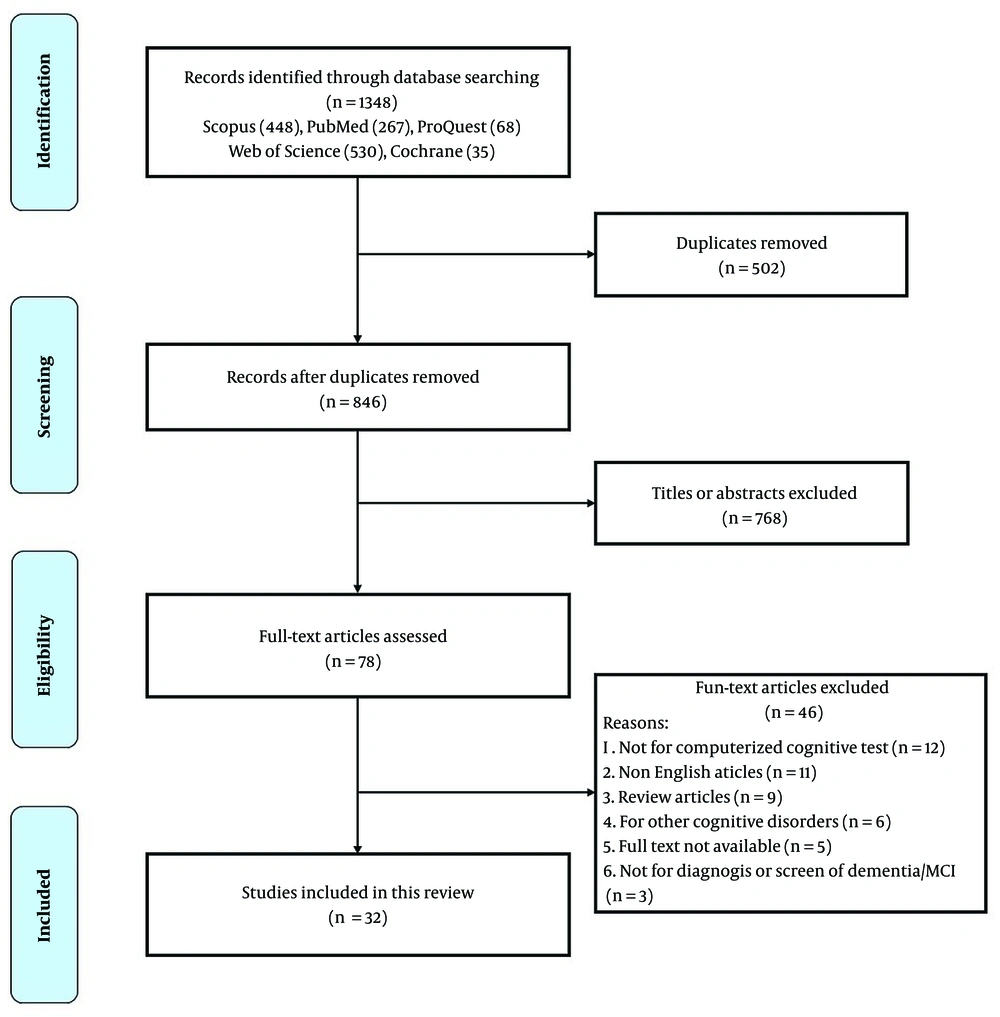

This systematic review was conducted according to the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) principles (23). We searched five electronic databases (ProQuest, Cochrane, PubMed, Scopus, and Web of Science) using search terms for relevant articles from inception to June 2022. Search terms were categorized into 3 groups (Table 1). To combine search terms, we used the OR operator within each group and the AND operator between the groups.

| Query | Operator | |

|---|---|---|

| Group 1 (technology) | Computer* OR Cell phone* OR Mobile OR Handheld OR Application* OR Health OR m-health OR android OR iPad* OR iPhone* OR Mobile device OR Phone* OR App OR PDA OR Smart phone* OR Smartphone* OR Tablet* OR Cellular phone* OR Telephone* OR Internet OR Software OR Electronic* OR Digital OR CDSS OR Clinical decision support system OR CAD OR Computer aided OR Computer assisted OR Decision support | AND |

| Group 2 (disease) | Dementia OR Cognitive dysfunction OR Cognitive impairment* OR Cognitive decline* OR Neurocognitive disorder | AND |

| Group 3 (screening) | Assessment OR Screening OR Diagnosis OR Detect* OR Identify OR Identification OR Test OR Battery OR Batteries OR Tool |

2.2. Inclusion and Exclusion Criteria

To select the relevant articles, inclusion/exclusion criteria were determined. The inclusion criteria included peer-reviewed articles, original articles, full-text availability, studies related to dementia diagnosis, studies using digital tests for dementia diagnosis, and studies reporting on the psychometric characteristics of the measure, including reliability and validity indices. Non-English articles, review articles, studies on other cognitive disorders, and articles that only used paper-based tests were excluded. After eliminating duplicate studies, two raters (MA and AM) screened the titles, abstracts, and full texts of the articles based on the specified criteria. Finally, they chose the relevant articles. Disagreements between raters were resolved by the third author (MH).

2.3. Data Extraction

Two investigators (MA and AM) independently extracted data from studies. The data included: (1) Sample size, (2) name of the tests, (3) country of the study, (4) type of the disease, (5) administration time, (6) diagnostic accuracy, (7) test validity, (8) type of the platform, and (9) cognitive domains of the test. The third author (MH) resolved the disagreement between the raters.

2.4. Risk of Bias and Quality Assessment

Two raters (MA and AM) independently assessed the potential risks of bias in selected studies using the QUADAS-2 (Quality Assessment of Diagnostic Accuracy Studies) tool, which include 4 key domains: (1) Patient selection, (2) index test, (3) reference standard, and (4) flow and timing (24). In addition, the quality of the studies was assessed by an ad hoc scale (Table 2) designed by adapting the STARD statement (Standards for Reporting of Diagnostic Accuracy) and the scale used by Chan et al. (25, 26). This scale includes 8 domains, as follows: (1) Study population, (2) selection of participants, (3) procedures to run the index test, (4) reference standard, (5) cognitive domains, (6) evaluated diseases, (7) test validity, and (8) diagnostic accuracy. The score of each domain ranged from 0 to 3, and the total score was between 0 and 24. Two raters independently evaluated the quality of the selected studies using the designed scale. Any disagreements were resolved by the third author (MH).

| Domains | Details | Scores |

|---|---|---|

| Study population | Definition of the study population and details of participant recruitment | 0 = No data 1 = Poor; 2 = Moderate; 3 = Strong |

| Selection of participants | Sampling | 0 = No data 1 = ≤ 50 participants; 2 = 50 to 200 participants; 3 = ≥ 200 participants |

| Procedures of data collection | Explanation of the digital cognitive test and procedures of data collection | 0 = No data 1 = Poor; 2 = Moderate; 3 = Strong |

| Reference standard | Explanation of reference standard and its rationale | 0 = No data 1 = Poor; 2 = Moderate; 3 = Strong |

| Cognitive domains | Assessment of cognitive domains, including memory, attention, language, executive functions, orientation, and calculation | 0 = No data 1 = 1 domain; 2 = 2 domains; 3 = ≥ 3 domains |

| Evaluated diseases | Evaluated diseases by digital cognitive test, including dementia, MCI, Alzheimer’s disease, and other types of dementia | 0 = No data; 1 = 1 type of disease; 2 = 2 types of disease; 3 = ≥ 3 types of disease |

| Reliability of test | Number of standard cognitive tests used to measure reliability | 0 = No data 1 = 1 test; 2 = 2 tests; 3 = ≥ 3 tests |

| Diagnostic performance | Calculation methods of diagnostic performance (criteria for diagnostic performance such as sensitivity, specificity, and accuracy) | 0 = No data; 1 = 1 criteria; 2 = 2 criteria; 3 = ≥ 3 criteria |

3. Results

3.1. Study Selection

Figure 1 illustrates the study selection process. A total of 1,348 articles were identified from electronic database searching. After reviewing the titles and abstracts of the articles and excluding 1,270 duplicates and irrelevant articles, 78 studies were obtained for further eligibility assessment. After reviewing the full text of the articles, 32 were eventually selected.

3.2. Study Characteristics

According to the investigations, 32 articles were published between 1994 and 2021. America with 14 articles, Japan with three articles, Korea with two articles, and other countries with one article each appeared in the results. The total number of participants in the studies was 38,429 people with an age range of 50 to 85 years. Twenty-three studies evaluated the diagnostic performance of digital tests for patients with dementia, 22 studies evaluated the diagnostic performance of digital tests for patients with mild cognitive impairment (MCI), 8 studies evaluated the diagnostic performance of digital tests for patients with Alzheimer’s disease, and one study evaluated the diagnostic performance of digital tests for patients with vascular dementia. The risk of bias in the included articles was evaluated by QUADAS-2 (Appendices 1 and 2 in the Supplementary File). Seven studies (21.8%) were assessed as having a high risk of bias on flow and timing, 6 studies (18.7%) as having a high risk on the index test, and 2 studies (6.2%) as having a high risk in the reference standard.

Different platforms were used to create digital dementia screening tests. Thus, based on the operating platforms, we classified the studies into three groups: (1) Mobile-based screening tests, (2) desktop-based screening tests, and (3) web-based screening tests.

3.3. Mobile-based Screening Tests

Nineteen articles investigated mobile-based screening tests (Table 3). Eight tests were developed based on existing neuropsychological tests. Nine tests were new and innovative cognitive tests. The CAMCI test was previously desktop based, which has become a mobile-based test. The CADi2 test is an improved version of CADi.

| Study | Test | Time, min | Type of Disease | Participants | Cognitive Domains | Diagnostic Performance | Quality Score | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| MCI | D | AD | Other | Memory | Attention | Language | Executive Function | Visuospatial | Orientation | Calculation | ||||||

| (27) | CANTAB-PAL | 8 | √ | √ | N = 58 (AD = 19, MCI = 17, HC = 22) | √ | √ | √ | ACC = 81.0% | 15 | ||||||

| (28) | BHA | 10 | √ | √ | N = 347 (HC = 185, MCI = 99), D = 42, normal with concerns = 21) | √ | √ | √ | √ | SN = 100%, SP = 85% (D vs. HC); SN = 84%, SP = 85% (MCI vs. HC) | 23 | |||||

| (29) | e-CT | 2 | √ | √ | N = 325 (HC = 112, MCI = 129, AD = 84) | √ | SN = 70.4%. SP = 78.7% (MCI vs. HC); SN = 86.1%, SP = 91.7% (AD vs. HC) | 18 | ||||||||

| (30) | VSM | ND | √ | N = 55 (HC = 21, MCI = 34) | √ | √ | √ | √ | ACC = 91.8%, SN = 89.0% SP = 94.0% | 19 | ||||||

| (31) | dCDT | ND | √ | √ | √ | N = 231 (HC = 175, AD = 29, VD = 27) | √ | AUC = 91.52% (HC vs. D); AUC = 76.94% (AD vs. VaD) | 19 | |||||||

| (32) | iVitality | ND | √ | N = 151 (mean age in years = 57.3) | √ | √ | √ | Moderate correlation with the conventional test ρ = 0.3 - 0.5 (P < 0.001). | 15 | |||||||

| (33) | CAMCI | 20 | √ | √ | N = 263 (patients with cognitive concerns = 130, HC = 133) | √ | √ | √ | √ | SN = 80%, SP = 74% | 21 | |||||

| (34) | CST | ND | √ | √ | √ | N = 215 (HC = 104, AD = 84, MCI = 27) | √ | √ | ACC = 96% (D vs. HC) ACC = 91% (HC, MCI, AD, D) | 23 | ||||||

| (35) | IDEA-IADL | ND | √ | N = 3011 | √ | √ | √ | AUC = 79%, SN = 84.8%, SP = 58.4% | 19 | |||||||

| (36) | EC-Screen | 5 | √ | √ | N = 243 (HC = 126, MCI = 54, D = 63) | √ | √ | √ | AUC = 90%, SN = 83% and SP = 83% | 21 | ||||||

| (37) | MCS | ND | √ | N = 23 (HC = 9 (age 81.78 ± 4.77), D = 14 (age 72.55 ± 9.95)) | √ | √ | √ | √ | √ | √ | √ | The ability to differentiate the individuals in the control and dementia groups with statistical significance (P < 0.05) | 12 | |||

| (38) | BrainCheck | 20 | √ | N = 586 (HC = 398, D = 188) over the age of 49 | √ | √ | √ | SN = 81%, SP = 94%. | 21 | |||||||

| (39) | CADi2 | 10 | √ | √ | AD = 27 | √ | √ | √ | √ | √ | √ | ACC = 83%, SN = 85%, SP = 81% | 21 | |||

| (40) | CADi | 10 | √ | N = 222 | √ | √ | √ | √ | √ | SN = 96%, SP = 77% | 19 | |||||

| (41) | CCS | 3 | √ | N = 60 (D = 40, HC = 20) | √ | √ | √ | AUC = 94%, SN = 94%, SP = 60%. | 17 | |||||||

| (42) | ICA | 5 | √ | √ | √ | N = 230 (HC = 95, MCI = 80, mild AD = 55) | √ | AUC = 81% (MCI), AUC = 88% (D and AD) | 19 | |||||||

| (43) | mSTS-MCI | ND | √ | N = 181 (HC = 107, MCI = 74) | √ | √ | √ | Significant correlations with MoCA | 17 | |||||||

| (44) | SATURN | 12 | √ | N = 60 (D = 23, HC = 37) | √ | √ | √ | √ | √ | √ | 83% reported that SATURN was easy to use. | 19 | ||||

| (45) | eSAGE | 10 - 15 | √ | √ | N = 66 (HC = 21, MCI = 24, D = 21; -50 years of age or over | √ | √ | √ | √ | √ | √ | SN = 71%, SP = 90% | 19 | |||

Abbreviations: CANTAB-PAL, Cambridge Neuropsychological Test Automated Battery- paired associate learning; BHA, Brain Health Assessment; e-CT, electronic cancellation test; VSM, virtual super market; dCDT, Digital Clock Drawing Test; CST, color and shape matching; IDEA-IADL, identification and intervention for dementia in elderly Africans- instrumental activities of daily living; EC-Screen, electronic cognitive screen; MCS, mobile cognitive screening; CAMCI, Computer Assessment of Memory and Cognitive Impairment; CADi, the Cognitive Assessment for Dementia, iPad version; CCS, the computerized cognitive screening; ICA, Integrated Cognitive Assessment; mSTS-MCI, mobile screening test system for screening mild cognitive impairment; SATURN, self-administered tasks uncovering risk of neurodegeneration; eSAGE, self-administered gerocognitive examination; ND, no data; HC, healthy control; MCI, mild cognitive impairment; AD, Alzheimer’s disease; D, dementia; SN, sensitivity; SP, specificity; ACC, Accuracy; AUC, area under the ROC curve.

In 17 studies, the results of mobile-based tests were compared with the results of paper-based tests to measure the validity of the tests. Also, MOCA (nine cases) and MMSE (six cases) had the highest frequency among other paper-based cognitive tests.

According to the quality assessment results (Appendix 3 in the Supplementary File), the qualitative scores of the studies were between 12 and 23. The studies on the BHA, CST, CAMCI, EC-Screen, BrainCheck, and CADi2 tests received the highest quality scores in sequence.

Various statistical criteria investigated the diagnostic performance of mobile-based tests in dementia screening. Sensitivity and specificity criteria were used in 11 studies. The BHA and CADi tests had the highest sensitivity, with 100% and 96%, respectively, and the e-CT and eSAGE tests had the lowest sensitivity, with 70.04% and 71%, respectively. Regarding specificity, the VSM and BrainCheck tests had the highest specificity at 94%, and the IDEA-IADL and CCS tests had the lowest specificity with 58.4% and 60%, respectively. Five studies reported the area under the receiving operating characteristic curve (AUC) values. The CCS (94%) and IDEA-IADL (79%) tests had the highest and lowest AUC, respectively. Four studies reported correct classification accuracy values. Also, the CST (96%) and CANTAB-PAL (81%) tests had the highest and lowest accuracy, respectively.

3.4. Desktop-Based Screening Tests

Nine articles examined desktop-based screening tests (Table 4). Four tests were innovative and new cognitive tests, while four were developed based on existing neuropsychological tests. One of the tests is the Brazilian version of the CANS-MCI test (46). In 4 of the studies, the validity of the desktop-based tests was evaluated.

| Study | Test | Time, Min | Type of Disease | Participants | Cognitive Domains | Diagnostic Performance | Quality Score | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| MCI | D | AD | Other | Memory | Attention | Language | Executive Function | Visuospatial | Orientation | Calculation | ||||||

| (46) | CANS-MCI-BR | 30 | √ | √ | √ | N = 97 (HC = 41, MCI = 35, AD = 21) | √ | √ | √ | √ | √ | SN = 0.81, SP = 0.73 | 21 | |||

| (47) | C-ABC | 5 | √ | √ | N = 701 (HC = 134, MCI = 145, D = 422) | √ | √ | √ | √ | √ | AUCs = 0.910, 0.874, and 0.882 (in the 50s, 60s, and 70 - 85 age groups). | 21 | ||||

| (48) | CANS-MCI | 30 | √ | N = 310 (older adults) | √ | √ | √ | √ | √ | SN = 0.89, SP = 0.73 | 20 | |||||

| (49) | Cogstate | 10 | √ | √ | N = 263 | √ | √ | √ | √ | SN = 0.78, SP = 0.90 | 19 | |||||

| (50) | MoCA-CC | ND | √ | N = 181 (HC = 85, MCI = 96) | √ | √ | √ | √ | √ | AUC = 0.97, SN = 95.8%, SP = 87.1% | 19 | |||||

| (51) | MicroCog | 30-45 | √ | N = 102 (HC = 50, MCI = 52) | √ | √ | √ | √ | SN = 98% and SP = 83% | 18 | ||||||

| (52) | VSM | ND | √ | √ | N = 66 (HC = 29, MCI = 10, D = 27) | √ | SN = 70.0%, SP = 76.0% (HC vs. MCI); SN = 93.0%, SP = 85.0% (HC vs. D) | 17 | ||||||||

| (53) | dCDT | ND | √ | √ | N = 163 (HC = 35, MCI = 69, AD = 59) | √ | √ | ACC = 91.42% (HC vs. AD); ACC = 83.69% (HC vs. MCI) | 16 | |||||||

| (54) | dTDT | ND | √ | √ | N = 187 (HC = 67, D = 56, MCI = 64) | √ | AUC = 0.90, SN = 0.86, SP = 0.82 (HC vs. D); AUC = 0.77, SN = 0.56, SP = 0.83 (HC vs. MCI) | 15 | ||||||||

Abbreviations: CANS-MCI, computer-administered neuropsychological screen for mild cognitive impairment; C-ABC, Computerized Assessment Battery for Cognition; VSM, Visuo-Spatial Memory; MoCA-CC, the Chinese version of the Montreal Cognitive Assessment; dCDT, digital Clock Drawing Test; dTDT, Digital Tree Drawing Test; ND, no data; HC, healthy control; MCI, mild cognitive impairment; AD, Alzheimer’s disease; D, dementia; SN, sensitivity; SP, specificity; ACC, accuracy; AUC, area under the ROC Curve.

According to the qualitative assessment (Appendix 4 in the Supplementary File), the scores of studies were between 15 and 21. The studies conducted on the C-ABC, CANS-MCI-BR, CANS-MCI, MoCA-CC, and Cogstate tests obtained the highest quality scores in sequence.

In nine studies, the diagnostic performance of desktop-based tests in dementia screening was investigated by sensitivity and specificity. The MicroCog and MoCA-CC tests had the highest sensitivity, with 98% and 97%, respectively, and the dTDT and Cogstate tests had the lowest sensitivity, with 56% and 78%, respectively. The Cogstate (90%) and CANS-MCI (58.4%) tests had the highest and lowest specificity, respectively. Three studies reported the AUC values, and MoCA-CC (97%), dTDT (90%), and C-ABC (88.86%) tests had the highest AUC.

3.5. Web-Based Screening Tests

Four articles described web-based screening tests (Table 5). The MITSI-L test was based on a paper-based test called LASSI-L. The accuracy of MITSI-L in the detection of dementia was 85.3%. The CNS-VS test used to be a desktop version that has become a web-based test. This test has been translated into more than 50 languages in the world. According to the qualitative assessment results (Appendix 5 in the Supplementary File), the scores were between 20 and 17. The sensitivity and specificity of the CNS-VS test for dementia diagnosis were 90% and 85%. Mindstreams and Co-Wis tests were new and innovative.

| Study | Test | Time, min | Type of Disease | Participants | Cognitive Domains | Diagnostic Performance | Quality Score | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| MCI | D | AD | Other | Memory | Attention | Language | Executive Function | Visuospatial | Orientation | Calculation | ||||||

| (55) | CNS-VS | 30 | √ | √ | N = 178 (HC = 89, MCI = 36, D = 53) | √ | √ | √ | √ | SN = 0.90, SP = 0.85 (for MCI); SN = 0.90, SP = 0.94 (for D) | 20 | |||||

| (56) | MITSI-L | 8 | √ | √ | N = 98 ((HC = 64, MCI = 34) | √ | √ | ACC = 85.3% | 17 | |||||||

| (57) | Co-Wis | 10 | √ | N = 113 | √ | √ | √ | √ | √ | Significant correlation with the SNSB-II test | 17 | |||||

| (58) | Mindstreams | 45-60 | √ | N = 52 (HC = 22, MCI = 27) | √ | √ | √ | √ | √ | √ | The ability to differentiate the individuals in control and MCI groups with statistical significance | 17 | ||||

Abbreviations: MITSI-L, Miami Test of Semantic Interference and Learning; Co-Wis, Computer-Based Dementia Assessment Content; CNS-VS, CNS Vital signs; HC, healthy control; MCI, mild cognitive impairment; AD, Alzheimer’s disease; D, dementia; SNSB, the Seoul Neuropsychological Screening Battery; SN, sensitivity; SP, specificity; ACC, accuracy.

4. Discussion

We examined 32 digital cognitive tests for dementia screening. The studies differed regarding test design, the number of participants, and the aim.

The cognitive tests were designed on mobile, desktop, and web platforms. Examination of tests indicated that mobile-based tests have increased significantly in recent years compared to other platforms. Koo and Vizer have also acknowledged this in their study (10). Recent advances in technology, the expansion of smartphones, and the unique capabilities and advantages of this technology, such as portability, ease of access, and user-friendliness, have led to the design of most tests based on mobile phones. Mobile technologies make it possible for the elderly to access these tests outside of medical centers, even at home or in their workplaces (45). This possibility makes the tests easier to access and provides more usability. In addition, the technology of touch screens in smartphones and tablets facilitates and accelerates the entry of information, which makes it easy for the elderly to perform these tests even though they have fewer computer skills (59). Due to the advantages of touch technology, in some desktop-based tests, this technology has also been used to facilitate information entry (46, 47, 52). Using a digital pen was one of the technologies used in 2 tests (53, 54).

The validity of dementia screening tests is an essential component of the designed tests’ acceptability (60). Accordingly, the validity of the test was measured in nearly 72% of the studies. Tsoy et al. obtained similar results in their review study (61). In order to measure the validity, the researchers used conventional paper-based tests. The MOCA and MMSE tests were used more than other tests. In most cases, the results showed a high correlation between digital and standard paper-based tests.

The reviewed tests were often developed based on paper-based neuropsychological tests; only 38% were innovative and new. Digitalizing existing cognitive tests seems more acceptable and reliable than innovative tests among physicians. The electronic version of paper-based tests can overcome the limitations of the paper version and offers various advantages. These include ease of access, increased test usage, faster administration and reduced costs, automatic score calculation, and immediate access to test results (44, 56, 62). However, when paper-based tests are converted into electronic forms, it is possible to get different results because by making the test electronic, fundamental changes occur in how the test is conducted, especially in self-administered tests, which can affect the obtained results. Therefore, conducting necessary assessments and investigations in this field is recommended. Ruggeri et al. obtained different results from the two electronic and paper versions of the test. The researchers stated that even when the paper test is directly translated, mobile-based tests require training and development of new standards because they should match the elderly population with different skills and familiarity with mobile technologies (63).

The length of administration is one of the important factors that significantly impact its efficiency, effectiveness, and acceptability (64). According to the results, administration time in 38% of reviewed tests was less than 10 minutes. However, it should be noted that in addition to administration time, other important factors, such as diagnostic performance and the number of cognitive domains, are also influential in the efficiency of a test. For example, the administration time of the e-CT test was 2 min, but it assessed only one cognitive domain and had a low diagnostic accuracy (29).

Most of the reviewed tests, especially the mobile-based tests, were self-administered. Self-administration requires less examiner involvement in performing and calculating the test, making it easier to access and facilitate the cognitive assessment. Also, if integrated with health information systems, this capability can effectively receive the necessary recommendations from healthcare providers and telecare (65).

The diagnostic performance of tests in dementia screening is one of the main factors that play an important role in their efficiency. Tests with high sensitivity and specificity are more acceptable. In disease screening, sensitivity is more important than specificity, so tests with high sensitivity can be more suitable for dementia screening. For example, the BHA test had 100% sensitivity (28). It seems that this test can be the best option for dementia screening. The method and quality of the study on tests can affect the results. To ensure the quality and accuracy of the reported results, we examined studies from various aspects, including the number of participants and cognitive domains. The results of some studies in which the tests had high diagnostic power were also qualitatively favorable (28, 50, 51).

Often, studies evaluated the diagnostic accuracy of digital tests for patients with MCI. Some of these tests, such as BHA, had obtained acceptable results even in the diagnosis of MCI (28). However, in all these cases, the diagnostic accuracy of the test was lower in diagnosing MCI than dementia. Developing digital tests to diagnose MCI can be very effective in early detection and better management of cognitive disorders, especially dementia. Consequently, researchers have always been working in this field, especially in recent years.

In some reviewed studies, the authors used virtual reality and machine learning techniques for cognitive testing (30, 42, 53). The application of new technologies, such as virtual reality, the Internet of Things (IoT), and chatbots, along with the development of intelligent cognitive tests, offers numerous opportunities.

4.1. Conclusions

Digital cognitive tests, especially self-administrated mobile-based tests, can effectively facilitate the screening and timely diagnosis of dementia. These tests can play an important role in remote cognitive assessment and diagnosis of dementia during the COVID-19 pandemic and similar situations. In addition, digital cognitive tests can contribute to successfully implementing a national dementia screening program.

Diagnostic performance, administration time, ease of use, especially for the elderly and people with low computer and health literacy, ease of access, and the ability to communicate with healthcare centers and receive advice from healthcare providers are important factors that influence the acceptability and efficiency of digital tests. Therefore, in developing digital tests, attention must be paid to these factors.