1. Background

A faculty, as an educational system, consists of various educational groups, faculty members, researchers, students, and administrative staff. Each faculty member contributes to different areas, namely teaching, research, and management (1). A faculty is a place where different types of conferences and conventions are held. The data related to these activities, with the participation of faculty members, are facts and information resulting from academic endeavors (2). The data of a faculty refers to the information linked with the academic performance of its professors and lecturers, such as details of academic services and contributions, completed courses, the number of annual research publications, and the number of committees of which the faculty member is a member (1, 3).

Although the collection, management, and reporting of faculty data are crucial for each faculty member and the institution itself as a complete establishment, numerous gaps exist in this area (4). While a faculty member may be involved in several activities, most of these activities are not documented and recognized because the university lacks a central system for effectively recording these data and presenting a comprehensive report of such activities and performance feedback (5).

Currently, different independent systems host faculty data (6). The lack of internal communication between these systems causes these data to be enclosed in a contained silo (7). Retrieving data from multiple systems is often a manual and, of course, difficult process for representatives and faculty members. Since these data are not analyzed or merged, their trends and inter-relationships cannot be exploited, which is a lost opportunity to discover information and extract knowledge (8).

Currently, the data recording section is inadequate in most higher education institutions, and there is partial automation for recording and sharing data between different systems. Therefore, faculty members and managers have to spend a lot of time and effort on manual data entry to gather or track the details of academic activities and assessments (9). Although the manual entering of data is unavoidable in some cases, automation and interoperability between systems can prevent duplicate data recording. In addition, faculty members may have inadequate time and skills to perform statistical analyses on data (e.g., findings correlations) and extrapolate valuable interpretations, targeted feedback, or practical complementary objectives (10).

As a data management tool, dashboards are one of the most effective and renowned forms of data objectification (10, 11). A dashboard can be defined as a tool for visualization that provides the possibility for acquiring awareness, finding trends, planning, and real comparisons. These items are repeatedly embodied in a simple and functional user interface. A dashboard of accumulated data effectively presents multiple sources and a comprehensive summary of important information that can be assimilated by faculty members at a glance (11). Performance dashboards enable organizations to measure, monitor, and manage business performance more effectively (12, 13). They are built on the foundations of business intelligence and data integration infrastructure and are used for monitoring, analysis, and management (12).

Developing a faculty performance dashboard is useful for quickly and easily sharing information about faculty members' performance with them in a way that helps them better understand the data (14). Observing and interpreting the data presented in large tables and lengthy reports are exhausting and time-consuming tasks for faculty members. In other words, a dashboard, if designed appropriately, can help faculty members spot their strengths and areas of progress and identify the trends and steps necessary for improvement (15).

Based on the researchers’ explorations, there are substantial gaps in the reporting and management of faculty data. Therefore, it seems necessary to develop a comprehensive dashboard for monitoring and evaluating the performance of educational groups, faculty members, students, and other faculty staff, as well as to monitor the performance of the faculty in various fields, such as education, research, culture, and student affairs, resource management, and technology and development.

2. Methods

This study will be carried out by using the consecutive mixed design. In a sequential design (qualitative and then quantitative), the data collection and analysis of one component take place after the data collection and data analysis of the other component and depend on the latter's outcomes (16). Mixed methods research combines closed-ended response data (quantitative) and open-ended personal data (qualitative) (17).

This protocol study is registered in the Open Science Framework (OSF) registries database (DOI: 10.17605/OSF.IO/J326S).

2.1. Setting

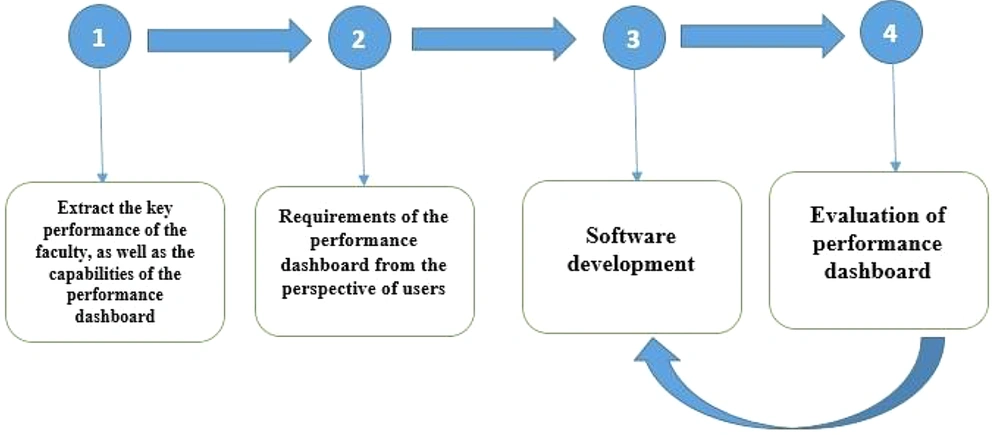

The research setting is the Faculty of Allied Medical Sciences of the AJA University of Medical Sciences in 2023 (From 20 April 2023 to 21 November 2023). The study has obtained ethical approval and will be conducted in four phases (Figure 1 and Table 1).

| Study phase | Goals | Output | Method | Technique | Time |

|---|---|---|---|---|---|

| 1. Extracting the key performance of the faculty and the capabilities of the performance dashboard | Identification of functional and non-functional requirements of the performance dashboard and performance indicators | Functional and non-functional requirements of the performance dashboard; Key performance indicators of the faculty | Systematic review | Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) | From 20 April 2023 to 5 June 2023 |

| 2. Requirements of the performance dashboard from the perspective of users | Identification of the requirements of the performance dashboard from the perspective of users | Functional and non-functional requirements of the performance dashboard; Key performance indicators of the faculty | 2.1. Qualitative methods | Semi-structured interview | From 22 June 2023 to 22 July 2023 |

| 2.2. Quantitative methods | Delphi technique (questionnaire) | From 22 July 2023 to 22 August 2023 | |||

| 3. Software development | Software production | Performance dashboard software | - | From 22 August 2023 to 22 October 2023 | |

| 4. Evaluation of the performance dashboard | Evaluation of user satisfaction | Usability and user satisfaction evaluation with the dashboard software | 4.1. Qualitative methods | Think-aloud | From 22 October 2023 to 6 November 2023 |

| 4.2. Quantitative methods | Questionnaire | From 6 November 2023 to 21 November 2023 |

Summary of the Phases of the Protocol Study and the Goals, Outputs, Methods, and Time of Each Phase

2.1.1. Phase 1: Identification of Functional and Non-functional Requirements of the Performance Dashboard and Performance Indicators of the Faculty Through a Systematic Review

This phase aims to extract the key performance indicators of the faculty, as well as the capabilities of the performance dashboard. Data search and extraction phases were previously performed based on the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) checklist (18). In this step, the search was performed using a combination of keywords, including "dashboard[TIAB] OR whiteboard[TIAB]" AND "Quality Indicators, Health Care" [Mesh] OR "Quality Indicators" [TIAB] OR "Key performance indicators" [TIAB] AND faculty[TIAB] OR university[TIAB] in PubMed, ScienceDirect, Web of Science, Scopus, and Google Scholar from 1 until 20 April 2023. The articles were selected based on the inclusion/exclusion criteria in terms of study design and whether or not they assessed performance dashboards at the levels of faculty or university.

The inclusion criteria was as follow: (1) English articles published in peer-reviewed journals or conferences with an available full-text; (2) articles on performance indicators and functionalities of performance dashboards at the levels of faculty or university; (3) articles published from 1 until 20 April 2023.

The exclusion criteria was as follow: (1) review articles, case reports, case studies or study protocols, letters to the editor, correspondences, and conference papers (absence or lack of access to the full text); (2) papers that merely design a performance dashboard at the levels of faculty or university.

For paper selection, three authors (SA, NM, and MGH) checked the titles and abstracts of the papers and removed the irrelevant papers. For eligibility assessment, the papers were independently checked by the mentioned authors. The bibliography check was then conducted by one of the authors (SA).

In data extraction, the indicators are divided into 5 groups, including education, research, cultural and student affairs, resource management, and development and technology (Appendix 1 in the Supplementary File).

2.1.2. Phase 2: Requirements of the Performance Dashboard from the Perspective of Users

This phase is conducted in two steps. First, a qualitative study is conducted to identify the requirements of the performance dashboard software. The inclusion/exclusion criteria for the participants in this phase are given below.

The inclusion criteria was as follow: (1) being the main users of the dashboard; (2) desiring to participate in the study; (3) having at least 5 years of work experience.

The exclusion criteria was as follow: Unwillingness to continue cooperation at any stage of the research.

For this purpose, 8 educational group directors and faculty directors are selected by purposive sampling for interviews. The average duration of each interview will be 30 minutes. Semi-structured interviews will continue until data saturation. At this stage, after coordinating with the interviewees and obtaining their informed consent, the interview is recorded using an electronic audio recorder, and then it is transcribed verbatim in Microsoft Word. The questions raised in the interviews are related to the functional and non-functional requirements of the dashboard, as well as the performance preferences of users (Appendix 1 in the Supplementary File). After transcription, the interviews are subjected to code extraction and then thematic analysis, involving 6 phases: (1) phase 1: Familiarizing oneself with the data; (2) phase 2: Generating initial codes; (3) phase 3: Searching for themes; (4) phase 4: Reviewing themes; (5) phase 5: Defining and naming; and (6) phase 6: Reporting the themes (19).

In the second step, a questionnaire is designed to identify the key performance indicators of the faculty using the 2-round Delphi technique. Twenty individuals are purposively selected among academic members, educational group directors, and faculty directors. In the first step of the Delphi technique, a questionnaire with 3-choice questions (disagree, no opinion, and agree) and an open-ended question at the end of each section is completed. In this way, the participants can state if they think anything should be added to the questionnaire for the second step of the Delphi technique. In the second step, the proposed indicators are added and subjected to a poll. For data analysis, items with > 75% agreement are accepted, those with an agreement of 50 - 75% enter the second round of Delphi, and those with < 50% agreement are omitted from the questionnaire.

2.1.3. Phase 3: Software Development

Microsoft Visual Studio 2019, the ASP.NET MVC Core 3.1 framework, and C# Server Language are used to perform software coding. The interface of the software is designed using HTML, jQuery, CSS, and Javascript languages. Finally, the Microsoft SQL Server is used for designing tables and managing the database.

2.1.4. Phase 4: Evaluation of the Performance Dashboard

In this phase, the qualitative method and then the quantitative method are used to evaluate the software. In the qualitative method, the think-aloud protocol will be used to evaluate usability, and in the quantitative method, the users' satisfaction with the dashboard software is assessed using the questionnaire. In this phase, 15 academic members and managers of the faculty who are dashboard software users are chosen. The inclusion/exclusion criteria for the participants in this phase are mentioned below.

Inclusion criteria was as follow: (1) having at least 5 years of work experience; (2) academic staff members of educational groups and faculty managers; (3) being the main users of the dashboard; (4) desiring to participate in the study.

Exclusion criteria was as follow: Unwillingness to continue cooperation at any stage of the research.

Think-aloud or concurrent verbalization was borrowed from cognitive psychology (20). In this method, users think aloud while performing a set of specified tasks (21); in other words, they verbalize anything that crosses their minds during the task performance (20). An advantage of this method is that it enables the collection of insights into the difficulties that participants encounter while using the system/product (22). In this method, users are asked to express their suggestions and comments regarding the dashboard while working with the software.

A 20-question Dashboard Assessment Usability Model scored based on a five-point Likert scale (1 = completely disagree; 5 = completely agree) will be used to evaluate user satisfaction with the dashboard software. In addition, two open-ended questions are presented to the participants so that they can express their viewpoints and recommendations. This questionnaire will evaluate the dimensions of satisfaction (4 questions), effectiveness (2 questions), efficiency (2 questions), operability (5 questions), learnability (4 questions), user interface aesthetics (1 question), appropriate recognizability (1 question), and accessibility (1 question).

The validity and reliability of the questionnaire have been confirmed previously (Cronbach's alpha for reliability: 0.94) (23). In the final step, the data are presented in tables using descriptive statistics such as frequency and percentage. Data analysis is conducted in SPSS v. 21.

3. Results

In the first stage, which is already conducted, performance indicators and dashboard features were extracted after extracting relevant articles based on the inclusion and exclusion criteria for the studies.

The performance indicators were divided into 5 areas: education, research, educational and cultural, resource management, and development and technology. Each area has its own performance indicators. The performance dashboard features were divided into performance monitoring, evaluation, and resource management. In the second stage of the study, a questionnaire and interview guide were prepared to identify users' needs based on the information extracted from the first phase.

4. Discussion

This study aimed to develop a protocol for the design of a faculty performance dashboard for monitoring, evaluation, and resource management at the faculty level. The steps used for developing this dashboard can provide a basis for designing better performance dashboards for other colleges or universities.

Due to the importance of information in organizations such as universities, it is essential to trace the flow and dimensions of information. The lack of proper management of information resources can impede the achievement of organizational goals and baffle employees when they work with information sources; this leads to redundant work in different departments, retrieval of similar information, and finally, the flowing of this information into organizational databases, which requires spending extra time and costs to reuse it (24, 25). The establishment and use of comprehensive information resources play a strategic role in the qualitative development of universities and their transformation into pioneer organizations. These measures also play a substantial role in achieving the strategic goals of the university (26). The information obtained from the information system provides a powerful management tool in the higher education system (27). Because of providing timely and accurate information, dashboards are considered powerful systems to fulfill the informational needs of organizations, including universities, and to handle large amounts of organizational data (28).

Performance evaluation is among the capabilities of the faculty performance dashboard, a process through which the function of employees is formally and regularly assessed at certain intervals. Evaluation of the performance of academic members refers to the regular assessment of their educational/research activities and determining to what extent the goals of the educational system are achieved according to predetermined criteria (29, 30). Functional monitoring refers to the real-time observation of the faculty's key performance indicators (29, 30). Faculty resource management encompasses being informed of the status quo of human resources and equipment (29, 30).

In the present study, first, the qualitative method and then the quantitative method are used in the data collection process. In the dashboard evaluation phase, a combination of qualitative and quantitative methods is used.

Studies illustrate the growing importance of mixed methods research for many health disciplines, ranging from nursing to epidemiology (31, 32). Mixed methods approaches require not only the skills of the individual quantitative and qualitative methods but also a skill set to bring the two methods/datasets/findings together in the most appropriate way (31).

Mixed methods research can provide a plethora of advantages for researchers and practitioners who try to gain a more comprehensive and nuanced understanding of their research topic. By offering a richer and deeper data set that can capture the diversity and complexity of the research phenomenon, mixed methods research can enable the triangulation or corroboration of the data or results from different sources or methods, thus increasing the validity or trustworthiness of the research (33). Additionally, it can allow for the exploration or explanation of the findings from one approach with the data or results from another approach, thereby enhancing the interpretation or understanding of the research (32). In the current study, the qualitative method (think-aloud) and a questionnaire will be used in the dashboard evaluation phase. Generally, questionnaires are the most commonly used tools for usability evaluation due to the simplicity of data analysis (34). However, a combination of qualitative and quantitative approaches is suggested to appropriately measure the usability of technologies (34).

Based on another study, the use of the think-aloud protocol for usability evaluation allows participants to share their real-time experience with using the app and stimulates verbal expression of this experience, which is more difficult to achieve using traditional stand-alone usability testing (35). Finally, it can be acknowledged that both quantitative and qualitative methods play a significant role in technology development and progress. While quantitative methods have some advantages, such as cost-effectiveness and higher suitability for studies with a large sample size, qualitative methods (e.g., think-aloud) provide details about problems to which quantitative methods do not commonly apply (36). Additionally, qualitative data analysis of user behaviors and routines and a variety of other types of information are essential to delivering a product that actually fits into the users' needs or desires (37).

Despite the strengths of this study, we may face some challenges while conducting its various phases. For example, in phase 1, the participants may refuse full cooperation in completing the questionnaire or conducting the interviews due to their busy work schedules. We will try to distribute a considerable number of questionnaires among users to obviate this challenge. During the implementation phase, the designed software may not be suitably integrated with other organizational systems, thus interfering with information exchange. This challenge will be addressed by writing the codes of this software in object-oriented programming languages.

4.1. Conclusions

Faculty, as an educational system, comprises various educational groups, faculty members, researchers, students, and administrative staff. The management of data records related to the performance and activities of the faculty and its members leads to better monitoring, identification of weaknesses and strengths, and, ultimately, promotion of the faculty's performance. Dashboards are embedded in educational processes, paying attention to the ways that the tools are integrated into the educational systems and processes. In fact, a dashboard is a data management tool that can be used for monitoring and evaluating a faculty's performance. The final product of this study is a dashboard for monitoring, evaluating performance, and managing resources at the faculty level.