1. Background

The validity of an assessment refers to the evidence presented to support or to refute the meaning or interpretation assigned to the assessment data (1). Validity, therefore, is a degree to which the test measures what it is supposed to measure. This includes test item analysis that is usually done after the assessment has been completed to determine the candidate responses to individual test items, the quality of those items as well as the overall assessment. Difficulty index or passing index (PI), discrimination index (DI), and distractor efficiency (DE) of each item can be obtained from the analyses, which reflect the quality of the test items. The PI of an item is commonly defined as the percentage of students who answered the item correctly. The DI, on the other hand, is defined as the degree to which an item discriminates between students of high and low achievement. The DE is used to assess the credibility of the distractors in an item, whether they are able to distract students from selecting the right answer (2). Any distractor that is selected by less than 5% of the students is considered to be a non-functional distractor (NFD).

Item analyses assess the quality of individual test items and the test as a whole by looking at how students respond to them. The advantages of the analysis are to help identify faulty items (3, 4), identify the lower performers and their learning problems such as misconceptions as a guide for remedial actions to be done to students, and as importantly to increase teachers’ skills to construct a high quality of test items (5). Test items that do not fulfill the well-designed item criterion can therefore be changed or eliminated, and a viable question bank can be developed (6, 7).

Doctor of Medicine (MD) curriculum in Universiti Putra Malaysia (UPM) consists of two phases; preclinical and clinical phases, which run for two and three years, respectively. Students will sit for a summative assessment at the end of each phase. Students must pass the preclinical examination to proceed to clinical phase. In the fifth year, they need to sit and pass the clinical phase examination before being awarded the degree of medicine. In the written examination, various assessment tools are used, such as multiple-choice questions (MCQs), short answer questions, and modified essay questions. In fact, MCQ is one of the most important well-established written assessment tools widely used for its distinct advantage and ability to evaluate over a broad coverage of concepts in less time. The scoring is also objective and reliable (8). The type A MCQ item (single best response) consists of a 'stem' or 'vignette', followed by a 'lead in' statement and several options. The correct answer in the list of options is called a 'key', and the incorrect options are called 'distractors'.

We analyzed the MCQs given in the preclinical and clinical phase examinations in the years 2017 and 2018 to determine the quality and validity of our test items. A comparison was made between PI, DI, and DE of the items between the examinations for both years as well as between the two phases of the examinations. Good quality items and revised items are going to be stored in the question bank, and faulty items shall be discarded based on the obtained findings.

2. Methods

This cross-sectional study was conducted in the Faculty of Medicine and Health Sciences, UPM, during the preclinical and clinical phase examinations in the years 2017 and 2018. In 2017, a total of 84 second-year MD students took the preclinical examination, while 128 final-year students took the clinical phase examination. Meanwhile, in 2018, there were 100 second-year students and 120 final-year students who took the end of preclinical and clinical examination, respectively. The 2-year preclinical phase examinations comprised of 40 MCQs with four options each, while the 2-year clinical phase examination comprised of 80 MCQs with five options each. Each correct response was awarded five marks, and there was no negative marking for the wrong answers. Pre-validation of the items was done by the vetting committee of the faculty.

2.1. Item Analysis

Post-validation was done automatically by item analysis using the optical mark recognition (OMR) machine (Scantron iNSIGHT 20 OMR scanner, Minnesota USA). The scores of all students in each examination paper were arranged in order of merit. The upper 27% students were considered ‘top’ students and lower 27% students as ‘poor’ students. Each item was analyzed for difficulty and discrimination indices according to Hassan and Hod (5) as well as Abdul Rahim (9):

(1) Difficulty index or Passing Index (PI), using the formula: PI = (H + L)/N

(2) Discrimination index (DI), using the formula: DI = (H – L)/A

H = number of ‘top’ students answering the item correctly; L = number of ‘poor’ students answering the item correctly; N = total number of students in the ‘top’ and ‘poor’ groups; A = number of students in 27% of total students.

The interpretation of PI and DI values is presented in Table 1.

(3) Distractor efficiency (DE): Non-functional distractor (NFD) is the option that was selected by less than 5% of students. Based on NFDs in an item, DE ranges from 0% to 100%. If an item with four options contained three or two or one or nil NFDs, then DE would be 0, 33.3%, 66.7%, and 100.0%, respectively. If an item with five options contained four or three or two or one or nil NFDs, then DE would be 0, 25%, 50%, 75%, and 100%, respectively.

3. Results

A total of 240 MCQs were analyzed, and the average PI and DI were determined. Overall, it was found that the difficulty level of the questions was similar in both preclinical and clinical phase examinations (Table 2). Interestingly, the values of average PI reduced in 2018, indicating a reduction of an increase of difficulty level of the questions for that particular year in both preclinical and clinical examinations. Nevertheless, the mean DI was similar in all examinations except for a low DI in the 2018 clinical phase examination.

| Preclinical Phase Examination | Clinical Phase Examination | |||

|---|---|---|---|---|

| 2017 | 2018 | 2017 | 2018 | |

| Difficulty @ passing index (PI) | 0.60 ± 0.24 (Acceptable) | 0.55 ± 0.23 (Ideal) | 0.60 ± 0.21 (Acceptable) | 0.56 ± 0.28 (Ideal) |

| Discrimination index (DI) | 0.25 ± 0.16 (Good) | 0.31 ± 0.16 (Good) | 0.25 ± 0.15 (Good) | 0.20 ± 0.11 (Poor) |

aValues are expressed as mean ± SD.

For preclinical phase examination, the numbers of ‘difficult’ and ‘very easy’ items were similar in both years (Table 3). Half of the 40 MCQs were ‘ideal’ and ‘acceptable’. Clinical phase examination in 2017 had 54% ‘ideal’ and ‘acceptable’ items but the value reduced in 2018 to 34% due to the significant increase in the percentage of ‘difficult’ items in the year 2018.

| Difficulty @ Passing Index (PI) | Preclinical Phase Examination | Clinical Phase Examination | ||

|---|---|---|---|---|

| 2017 | 2018 | 2017 | 2018 | |

| Difficult | 7 (17.50) | 7 (17.50) | 7 (8.75) | 21 (26.25) |

| Ideal | 12 (30.00) | 15 (37.50) | 29 (36.25) | 18 (22.50) |

| Acceptable | 8 (20.00) | 5 (12.50) | 14 (17.50) | 9 (11.25) |

| Very easy | 13 (32.50) | 13 (32.50) | 30 (37.50) | 32 (40.00) |

aValues are expressed as No. (%).

There was an increase in the total percentages of ‘excellent’ and ‘good’ items in preclinical phase examination in 2018, from about 38% to 70% (Table 4). In clinical phase examination of 2017, half of the questions were ‘excellent’ and ‘good’. However, the percentage reduced to 36% in 2018 due to the high percentage of ‘poor’ questions in the examination (53%). Additionally, there were five questions with zero DI in the paper; one with PI equals one and another with PI equals zero.

| Discrimination Index (DI) | Preclinical Phase Examination | Clinical Phase Examination | ||

|---|---|---|---|---|

| 2017 | 2018 | 2017 | 2018 | |

| Excellent | 11 (27.50) | 15 (37.50) | 23 (28.75) | 6 (7.50) |

| Good | 4 (10.00) | 13 (32.50) | 17 (21.25) | 23 (28.75) |

| Acceptable | 9 (22.50) | 3 (7.50) | 10 (12.50) | 9 (11.25) |

| Poor | 16 (40.00) | 9 (22.50) | 30 (37.50) | 42 (52.50) |

aValues are expressed as No. (%).

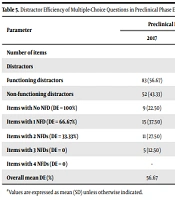

The total number of NFD was reduced in 2018 in both examination phases (Table 5). Both examinations in 2018 showed an increase in the number of items with no NFD as compared to the previous year. The number of items with no NFD in preclinical phase examination is higher than clinical phase examination for both years. Similarly, the overall mean DE was increased in 2018 with preclinical phase examination, showing the highest mean DE compared to the rest of examinations.

| Parameter | Preclinical Phase Examination | Clinical Phase Examination | ||

|---|---|---|---|---|

| 2017 | 2018 | 2017 | 2018 | |

| Number of items | 40 | 80 | ||

| Distractors | 120 | 320 | ||

| Functioning distractors | 83 (56.67) | 86 (71.67) | 155 (48.44) | 177 (55.31) |

| Non-functioning distractors | 52 (43.33) | 34 (28.33) | 165 (51.56) | 143 (44.69) |

| Items with No NFD (DE = 100%) | 9 (22.50) | 13 (32.50) | 3 (3.75) | 8 (10.00) |

| Items with 1 NFD (DE = 66.67%) | 15 (37.50) | 20 (50.00) | 21 (26.25) | 24 (30.00) |

| Items with 2 NFDs (DE = 33.33%) | 11 (27.50) | 7 (17.50) | 28 (35.00) | 32 (40.00) |

| Items with 3 NFDs (DE = 0) | 5 (12.50) | 0 (0.00) | 24 (30.00) | 9 (11.25) |

| Items with 4 NFDs (DE = 0) | - | - | 4 (5.00) | 7 (8.75) |

| Overall mean DE (%) | 56.67 | 71.67 | 48.44 | 55.31 |

aValues are expressed as mean (SD) unless otherwise indicated.

4. Discussion

The end-of-phase examination in UPM MD program is a high-stake summative assessment at the end of preclinical and clinical phase. For preclinical phase examination, the results determine whether the preclinical students are eligible to progress to clinical phase, while the final-year students need to pass the clinical phase examination to graduate. Therefore, valid assessment tools are needed to measure students’ knowledge, skills, and attitude in the examination. One of the tools used to test the ‘knows’ and ‘knows how’ in Miller’s pyramid is with MCQ (11). It is useful in measuring factual recall, but it can also test higher order of thinking skills such as application, analysis, synthesis, and evaluation of knowledge, which are important for medical graduates. Post-validation of test items using item analysis of PI, DI, and DE is a simple yet effective method to assess the validity of the test. In the present study, we analyzed the MCQs from both preclinical and clinical phase examinations taken by two different cohorts of students. Each MCQ in preclinical phase examination has four options, while clinical phase examination has five options.

Based on the findings, the mean PI in both examinations in both years was similar. This indicates that an increased number of options, five versus four options, does not have a significant impact on the difficulty level of the examination. This was supported by the previous study by Schneid et al. who found that there were no significant differences in the difficulty level among MCQs with three, four, or five options (12). On the contrary, Vegada et al. found a slight decreased in the difficulty level when reducing the options from five to four, and the items became much easier when reducing the options to only three (13). They concluded that the items became easier with fewer options due to the increased probability of random guessing to select the correct answer.

Preclinical phase examination showed a consistent level of item difficulty for both years. However, half of the questions were ‘very easy’ and ‘difficult’. These questions seem to be unsuitable for assessing students in the high-stake examination as they were unable to discriminate between the good and the weak students. Hence, these questions should be revised, by changing either the vignette or the options. All difficult questions should be reviewed for their language and grammar, ambiguity, and controversial statements (5). Some previous studies demonstrated their PI as a percentage, which reflects the percentage of correct answers to the total responses (6, 7, 14). An item with PI percentage between 30% to 70% is considered acceptable, i.e., not too easy and not too difficult (6, 14). Studies by Sim and Rasiah (7) and Rao et al. (14) showed a comparable mean PI similar to our present finding, ranging from 50 to 60%. It is evident that proper vetting and training in constructing MCQs led to such desirable findings. It was also suggested that continuous training and feedback should be given to the teachers so that the number of ‘difficult’ and ‘very easy’ questions can be reduced in the future.

The mean DI for preclinical phase examination in both years and 2017 clinical phase examination ranging from 0.25 to 0.31, which were considered 'good'. An earlier study had shown that a comparable mean of DI proved that the quality of questions has been consistent over the years (5). Nevertheless, the mean DI in clinical phase examination reduced significantly in 2018. This may be due to the high number (66%) of ‘very easy’ and ‘difficult’ questions in the examination. Consequently, about 53% of the questions were considered ‘poor’ and were not able to discriminate between the good and weak students. A test item ideally should be able to pick out the ‘good’ students from the ‘poor’ ones, in which more ‘good’ students are able to answer the item as compared to the ‘poor’ students (9). In the present study, some questions were found to have zero and negative DI. Zero DI means that the item was non-discriminating in which either all students were able to answer the item correctly, or an equal number ‘good’ and ‘poor’ students were able to answer correctly, or none of the ‘good’ and ‘poor’ students managed to correctly answer it. Negative DI indicates that more ‘poor’ students were able to answer the item correctly. There was also one question with zero DI and zero PI. These demonstrated an extremely low number of students who managed to answer it correctly, and none of them were the ‘good’ and ‘poor’ students. We speculate the reasons for these were due to ambiguous framing of the questions and poor preparation of students (7, 15, 16). Another question has zero DI and PI equals to one, demonstrating that all students managed to answer it correctly, probably because it was too easy. Too difficult and too easy questions may contribute to the ‘poor’ questions based on the dome-shaped correlation between PI and DI (6, 17). These questions were not useful and may reduce the validity of the test, therefore should be eliminated.

Preclinical phase examination in 2018 showed an increase in the number of 'excellent' and 'good' questions, and a decrease in 'poor' questions comparing to 2017. This proves that the preclinical lecturers have shown considerable improvement in constructing MCQs through continuous training and feedback. In contrast, less than 8% of the questions in 2018 clinical phase examination were considered 'excellent', and there was an increased number of 'poor' questions compared to 2017. Several possible reasons for this finding were identified. Some of the clinical lecturers were new and probably were unfamiliar with the test format, while some clinical lecturers had never attended any training on test item construction. There should be a thorough and several levels of vetting by peers who are content experts, and non-content experts are also needed before the questions are used in an examination. With this analysis, feedback should be given to all teachers for them to reflect upon and revise their questions accordingly.

The number of NFDs also affects the discrimination power of an item (14). In this study, more than 20% of the MCQs for preclinical phase had no NFDs in both years. In fact, there was one-third of the 2018 preclinical phase MCQs with three functioning distractors (DE = 100%). We identified that items with a higher number of options in clinical phase examination tend to have higher NFDs in both years. Less than 10% of the MCQs for clinical phase examination had 100% DE. This shows that it was probably difficult for the teachers to develop four equally plausible distractors. Preclinical phase examination in 2018 has no question with 0% DE, and it showed the highest overall mean DE as compared to other examinations. Preclinical lecturers have shown a significant improvement in developing MCQs with plausible distractors and avoiding NFDs between the years. The findings suggested that reducing the number of options may increase the credible of distractors in an item; however, it may reduce its difficulty level.

A meta-analysis by Rodriguez found that having three options in an item is adequate (18). Even though the difficulty level is lowered, but it is more discriminating and more reliable. This is supported by a more recent study which found that questions with even as low as three options would still produce good reliability and less laborious to construct (19). However, this means that students will have a high chance (only 1 in 3) of correctly answering the item with random guessing. Royal and Dorman highlighted that 3-option and 4-option MCQs had similar psychometric properties, which means the former is equally effective as the latter (20). Therefore, the traditional MCQs with four options shall be maintained as more research needs to be done to better understand the effects of 3-option MCQs on guessing strategies and cut score determination decisions to avoid any unintended consequential validity (21).

The present study highlights some interesting findings. First, an increased number of options does not affect the difficulty level of the questions; however, it significantly affects their discrimination power. Questions with a higher number of options tend to have lower DI and a higher number of non-effective distractors. Therefore, it is suggested to standardize the number of options to only four, in both preclinical and clinical phases of examination. Second, teachers from preclinical phase showed considerable improvement in constructing test items with plausible distractors and ideal difficulty level as compared to the clinical phase. Lack of motivation and time constraints may be the possible challenges for the clinical teachers to construct good quality items (22). Despite the availability of the faculty’s guidelines for constructing examination questions for reference, training and continuous follow-up and feedback to them are important to decrease items flaws and improve item-writing skills. Institutional support for faculty development programs is crucial to ensure reliable and valid assessment strategies, especially for high-stake examinations.

The roles of vetting committee in medical schools have been described in the literature to evaluate the content, language, and technical aspects of all questions (23, 24). Vetting sessions should be more thorough to ensure the validity of test items by removing any flaws and making them as understandable and clear as possible (25). Additionally, more time, as well as resources, are needed to develop the assessment blueprint to ensure all items are aligned with the learning objectives. A well-developed blueprint also corresponds with the depth of knowledge and level of difficulty of each content area.

Several limitations should be noted in this study. First, this study was confined to one educational setting, limiting its generalization. Any effort to infer the findings to other educational settings needs to be done with caution. Second, several other parameters such as internal consistency and correlation between PI and DI were not measured in this study. Lastly, some variables such as previous training on writing MCQs and students’ characteristics were not controlled during the analysis, which might affect the findings of the study.

4.1. Conclusions

The findings suggest standardizing the number of options to only four as it did not much affect the difficulty level of the questions but improve the discrimination degree of the items between high and low achievers. This will also ease the teachers on preparing MCQs with equally plausible distractors. More trainings are required for the teachers, especially from clinical phase, to improve the quality of the items as seen in preclinical phase. Feedback should be given to all teachers after analysis for them to reflect and make improvements. Good quality items have to be stored in the question bank while the poor ones have to be discarded.