1. Background

Evaluation is one of the important dimensions of a teacher's educational activities that make teaching change from a static to a dynamic process (1). Using appropriate evaluations, after identifying the strengths and weaknesses of education, the positive points can be strengthened and moved in the direction of reforming the educational system (2). Lack of effective methods for clinical evaluation can cause a decrease in clinical skills and reduce their efficiency and effectiveness in providing health services (3). Clinical performance evaluation aims to improve productivity and quality. This type of assessment is performed to ensure the student's mastery of skills necessary to save the patient's life (4). Based on the evidence, clinical evaluation of medical students in most universities is done using self-made forms that lack sufficient validity and reliability (5). Recently, to solve this problem, new tests have been used to evaluate the student's clinical skills, such as Portfolio, Logbook, Mini-CEX, OSCE, and DOPS (6). In the Logbook method, instead of the quality of the procedure, more attention is paid to the quantities and numbers of procedures (2).

One of the effective methods of evaluation by medical education specialists is done by direct observation of clinical skills, which is designed to evaluate the clinical skills and give feedback (5). Operating room technology is a practical profession. There are a variety of procedures, workload, heavy responsibilities, speed, the precision of action, rapid turn-out of patients, unpredictability of work in many cases, rapid occurrences of high-risk incidents, and acute and severe emergencies in the operating room that must be managed (6). Thus, weakness in different aspects of clinical practices of operating room technology students can threaten the patient's health (7).

As mentioned above, the evaluation process identifies and describes the usefulness of education and is an appropriate tool to modify the training goals, plans, and methods (8). Evaluation is essential in educational technology as a key activity, and achieving educational goals is impossible without it (9). The evaluation commonly used in clinical settings is unstructured and sometimes subjective. The results indicated that operating room technology students were not satisfied with their evaluations and they believed the reason is the irrelevancy of objectified tools in clinical evaluation to practical conditions, and unavailability of accurate, objective, and clear criteria (10).

Meantime, the clinical assessment of students in the form of direct observation (DOPS) in practical situations will ensure their ability to cope with the specific conditions of patients (11). As known, DOPS is one of the newest methods of clinical evaluation, first implemented at the Royal University of Medicine in England, which has been practiced since 1994 at the Australian University of Medical Sciences (12). In this method, the student is required to directly observe the performance of a real procedure in a real environment in a way that evaluates the learner's practical skills objectively and in a structured way (4). Observations are recorded by an evaluator in a valid checklist, and feedback is given to the learner based on real and objective observations. Therefore, after observing the skills by several evaluators and giving feedback to the trainee about the problems, it can finally lead to the improvement and correction of the skill in the person (13). The duration of each test is 20 minutes, of which 15 minutes are allocated to observation by the evaluator and 5 minutes to provide feedback to the learner (14). Numerous studies have been conducted in Iranian universities of medical sciences to evaluate the validity and reliability of the DOPS method, as well as its effectiveness in clinical skills in several branches of medical sciences, including nursing, dentistry, midwifery, and other fields (15). The results of previous research show that the implementation of DOPS in the assessment of clinical skills could improve and enhance the score of clinical performance in students of radiology, nursing, and midwifery (15-17).

Therefore, due to the lack of such study in this field on operating room students and the hypersensitivity of the tasks of students in this field, the researchers decided to examine the effect of the DOPS method on performance and clinical skills of operating room students and compare it with the traditional method.

2. Methods

This quasi-experimental study was performed in 2019 on 30 final-year operating room students who were taking the hospital apprenticeship course at the teaching hospitals of Torbat Heydariyeh University of Medical Sciences (Razi and Nohome Day hospitals). The study protocol was approved by the Ethics Committee of Torbat Heydariyeh University of Medical Sciences with the code IR.THUMS.REC.1398.036 (available at https://ethics.research.ac.ir/EthicsProposalView.php?id = 86759).

To participate in the study, the purpose and methods of research were explained to all participants, including students and educators and finally, their informed consent was obtained. They were assured that their personal information would be kept confidential and only general data and statistics would be made available to the public. Also, all participants took part in the study voluntarily.

The population of this study consisted of all operating room students of Torbat Heydariyeh University of Medical Sciences who had an internship course (semesters 6 and 8). Two hospitals were randomly divided into either a control group (Razi hospital with 15 students) or an intervention group (Nohome Day Hospital with 15 students). Besides, 10 clinical teachers as training examiners were randomly distributed in the control and intervention groups. These teachers passed an educational workshop on new evaluation techniques before the research started.

The inclusion criteria included students who had passed the internship course, had not been evaluated during this semester by any tool other than DOPS, and had the willingness to participate in this research. Students who were evaluated fewer than twice for each of the selected techniques with the DOPS method were excluded.

The data collection tool consisted of two parts: The first part included demographic data of the students and the second part was the DOPS checklist where the skills of the individual were assessed as a surgical assistant. Considering the related literature, operating room textbooks introduced as a reference by the Ministry of Health, and operating room faculty members’ ideas, the evaluation checklists were prepared for each skill by the researchers. The selected procedure was one of the routine and specialized operating room procedures that had a chance to be available in the operating room for all students.

The checklists were used after the validity was determined. The content validity of the researcher-designed questionnaire was determined by experts and seven operating room faculty members. The Content Validity Index (CVI) was 0.8 and the test-retest method showed ICC = 0.93. The reliability of the questionnaire was confirmed by Cronbach's alpha, which was α = 0.9.

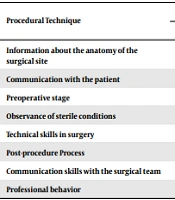

The procedures included the following items: Information about the anatomy of the surgical site, communication with the patient, preoperative stage, observance of sterile conditions, technical skills in surgery, post-procedure process, communication skills with the surgical team, and professional behavior.

Evaluation based on the DOPS method was performed by training examiners (five faculty members). Clinical skills of the students were evaluated by the checklist and the following steps were used for each procedure: skills observation in 20 - 30 minutes and giving feedback in five minutes. This checklist was rated on a five-point Likert scale, including no grade (1), less than expected (score 2), boundary limit (score 3), as expected (4), and upper than expected (5).

After averaging the scores, the highest scores (41 - 50) indicated that the student could well perform the procedure without examiner supervision, a score of 31 - 40 indicated that the student needed partial supervision, a score of 21 - 30 showed that the student could perform the procedure under examiner supervision, a score of 21 - 30 indicated that the student would be constantly supervised at all stages, and a score of 1 - 10 was very poor (no procedure allowed). In this stage, the control group students were evaluated by a school common method while the intervention group was evaluated by DOPS. In the intervention group, the clinical skills of the students were assessed by the checklist.

Concerning the evaluation plan, in the first stage, test observing skills (information about the anatomy of the surgical site, communication with the patient, preoperative stage, observance of sterile conditions, technical skills in surgery, post-procedure process, communication skills with the surgical team, and professional behavior) were assessed in 20 - 30 minutes and structured feedback was given in five minutes by the examiner. In the second stage, the same test of the first stage is repeated 3 weeks after the first test with emphasizing the strengths and weaknesses of the students.

In the control group, information about the anatomy of the surgical site, communication with the patient, preoperative stage, observance of sterile conditions, technical skills in surgery, post-procedure process, communication skills with the surgical team, and professional behavior skills were assessed in just one stage, meaning that the clinical instructor taught the skill and asked the student to repeat the skill. According to the common method, clinical skill evaluation was performed at the same stage. In the common method of the School of Nursing and Midwifery and the Operating Room Department, during the internship period, students’ skills were mentally judged by the examiner, and scoring was based on this judgment.

The role of the control group in this study was to compare the clinical performance scores of the students who received no feedback and intervention with the mean scores of the intervention group receiving feedback and intervention. For the intervention group, the scores of every skill were put in a special checklist separately and each score was recorded at every evaluation step. The progress of the students was assessed and the mean score of the two evaluation stages for each skill was considered separately. Eventually, the final score was noted.

The reason for doing two evaluations for the intervention group was that since the base was giving feedback, so by repeating tests, the goal would be successive feedback given for covering students’ weaknesses if they repeated their mistakes; therefore, the students could have more focus on their mistakes. The examiners observed students while doing skills and wrote their observations in the checklist so that students could receive feedback in a suitable place and strength their weaknesses.

Data were analyzed using SPSS software (version 20) through descriptive (mean ± SD) and analytical (T-paired and Mann-Whitney tests) statistics. Also, the Kolmogorov-Smirnov test and the Shapiro-Wilk test were used to evaluate the normality of data distribution. Besides, P < 0.05 was considered significant.

3. Results

The results of the present study showed no significant difference between the two groups in terms of age and internship score based on the Mann-Whitney test. Also, there was no significant difference between the two groups in terms of grade point average based on the t-test and in terms of gender based on the chi-square test (P > 0.05). The results are shown in Table 1.

| Variables | Groups | Test | P-Value | |

|---|---|---|---|---|

| Control | Intervention | |||

| Age | 23.7 ± 1.2 | 23.4 ± 1.1 | Z = -0.44 | 0.66 |

| Grade point average | 16.5 ± 1.2 | 16.7 ± 1.1 | T = 0.41 | 0.68 |

| Internship score | 17.2 ± 0.5 | 17.1 ± 0.9 | Z = -0.63 | 0.52 |

| Female | 9 (60) | 10 (66.3) | χ2 = 0.14 | 0.71 |

| Male | 6 (40) | 5 (33.7) | ||

Comparison of Mean Age, Grade Point Average, and Internship Score of Students in Two Groups a

The mean rank of procedural techniques after the intervention was compared between the groups using the Mann-Whitney test. The mean rank of procedural techniques was significantly higher in the intervention group than in the control group (P < 0.05). In other words, according to the results of this study, the significance level for all procedural techniques was less than 0.05 (P < 0.05), indicating that the intervention had a significant effect on skill procedures in the intervention group (Table 2).

| Procedural Technique | Groups | Z-Value | P-Value | |

|---|---|---|---|---|

| Control | Intervention | |||

| Information about the anatomy of the surgical site | 9.4 | 21.6 | -3.99 | < 0.001 |

| Communication with the patient | 9.9 | 21.0 | -3.76 | < 0.001 |

| Preoperative stage | 11.2 | 19.7 | -2.85 | 0.008 |

| Observance of sterile conditions | 9.0 | 21.9 | -4.23 | < 0.001 |

| Technical skills in surgery | 11.3 | 19.7 | -2.85 | 0.008 |

| Post-procedure Process | 9.6 | 21.3 | -3.87 | < 0.001 |

| Communication skills with the surgical team | 10.4 | 20.5 | -3.43 | 0.001 |

| Professional behavior | 10.6 | 20.3 | -3.40 | 0.002 |

Comparison of Average Rank of Procedural Techniques in Intervention and Control Groups Based on Mann-Whitney Test

The comparison of the mean scores of clinical performance within the groups was made based on the Mann-Whitney test. The results of this study indicated that the mean score of clinical performance was significantly higher in the intervention group than in the control group (P < 0.05). In other words, the evaluation of clinical skills by the DOPS method could positively affect the clinical performance of operating room students (Table 3).

| Variable | Groups | Z-Value | P-Value | |

|---|---|---|---|---|

| Control | Intervention | |||

| Clinical Skills, Mean ± SD | 23.1 ± 5.2 | 37.9 ± 3.5 | -4.5 | < 0.001 |

Comparison of Mean Scores of Clinical Skills Assessment of Operating Room Students Between Intervention and Control Groups

4. Discussion

This study aimed to investigate the effect of using the DOPS assessment method on the clinical skills of operating room students at Torbat Heydariyeh University of Medical Sciences. The results showed that the use of the DOPS assessment method improved the quality of clinical skills of operating room students. Consistent with this study, the results of several previous studies showed that the DOPS assessment method improved learning approaches and clinical performance of students of other medical sciences when compared to routine methods (18, 19). In studies conducted by Holmboe et al. (20) and Chen et al. (21) on medical students, they observed that the DOPS assessment method increased the level of clinical skills and self-confidence of students. Also, a study conducted by Habibi et al. (22) showed that the use of both DOPS and MINI-CEX methods increased the power of clinical skills during the procedure in nursing students, when compared to traditional methods. Tohidnia et al. (23), in their study on radiology students, reported that the clinical performance score was significantly higher in the DOPS assessment method than in the traditional method. Also, the improvement of the evaluation phase by the DOPS method compared with the preliminary phase improved clinical performance, which could be due to providing appropriate feedback and eliminating functional weaknesses (23).

In addition to the application of assessment, the DOPS method can be used as an educational tool for empowering medical students (15). In contrast, Sohrabi et al. (15) reported that the DOPS method due to its weaknesses could not be used as a useful educational tool to improve students' clinical performance. The weaknesses of this method included stress induction, time constraints for participants, and lack of complete similarity of evaluators. In contrast, Ahmed et al. (24) reported in their review study that none of the assessment methods was completely valid and reliable, so they proposed a combination of clinical skills assessment methods. Despite the mentioned weaknesses, the use of the DOPS method compared to the traditional method in this study improved the clinical performance of operating room students in performing procedures, which can be due to structural and timely feedback on students' clinical performance, as well as recognizing their weaknesses and trying to get rid of them. Therefore, it is suggested that the use of the DOPS method and its comparison with different methods be examined at a broader level for evaluating the performance of different procedures of operating room students, as well as students of other fields of medical sciences.

4.1. Conclusion

Given the high importance of evaluation in medical education, it is necessary to provide an effective and appropriate method in this field. The findings of this study showed that the DOPS assessment method, by directly observing and providing structural feedback to students during training, significantly improved the clinical skills of operating room students and thus, it can be a very effective alternative to conventional methods in the clinical skills assessment.