1. Context

Objective structured clinical examination (OSCE) is one of the most important assessment methods for both undergraduate and postgraduate students in medical education. As Zayyan stated in his 2011 paper, many authors, such as Stillman and Novack, have proposed that OSCE is a standard method to assess competencies, clinical skills, advising, and performance assessments, including data interpretation, problem-solving, training, interaction, managing unforeseen patient reactions, critical thinking, and professionalism (1). Objective structured clinical examination is recognized as a reliable and valid standardized assessment tool for assessing interpersonal communication and clinical skills (2) and has been used to teach and assess medical students in high-stakes examinations like the United States Medical Licensing Examination (USMLE) (3). Despite the vast time and resources required to implement OSCE, it boasts fairly high validity and reliability (4, 5). Therefore, critical components such as the number and duration of test stations are important to determine in OSCE design. Harden designed the initial OSCE in 1972 in Dundee, which included eight testing stations and two rest stations. Each station was designed to assess specific competencies, such as clinical procedures, history taking, and physical examination, with 4.5 minutes allocated per station and a 30-second break between stations (6). To the best of our knowledge, various studies have concluded that OSCE design can include different numbers and durations of stations. Rushforth on behalf of Harden (1975) states that designing an OSCE typically requires 16 - 20 stations, with each station lasting 5 minutes. On the other hand, some nursing OSCE examinations have been designed with 2 to 10 stations, each ranging from 10 to 30 minutes (7). Studies regarding the number and duration of OSCE stations for medical students, residents, and fellowships have considered 6 to 42 stations, with each station lasting 4 to 20 minutes (8-13). For nursing students, at least 20 stations with durations ranging from 4 to 70 minutes have been referred to in relation to the number and duration of OSCE stations (7, 14). Although an OSCE usually includes several different stations to assess a wide range of competencies, single-station tests have also been reported (15). In Goh et al.'s 2019 study, 104 studies were reviewed, and the number of stations varied from 1 to 20. Tests with 1 to 5 stations were the most frequent (61.5%), 18.3% of studies used 6 to 10 stations, and the lowest frequency was related to tests with 11 to 20 stations (11.5%) (16). In fact, determining the number and duration of stations is required to fairly and precisely judge students' competencies. The number and duration of stations affect the test's utility, including reliability, validity, and cost (8, 17-19). To the best of our knowledge, different studies have suggested various numbers and durations of stations. Thus, the aim of our study is to determine the factors affecting the appropriate number and duration of OSCE stations.

2. Methods

The authors conducted a narrative overview literature review by examining international OSCE literature. A narrative overview was selected to achieve the aims of this study by providing an existing synthesis of knowledge related to the factors affecting the determination of the number and duration of OSCE stations. When there are one or more specific questions related to a contemporary topic and a broader view is needed, a narrative overview can be useful (20, 21).

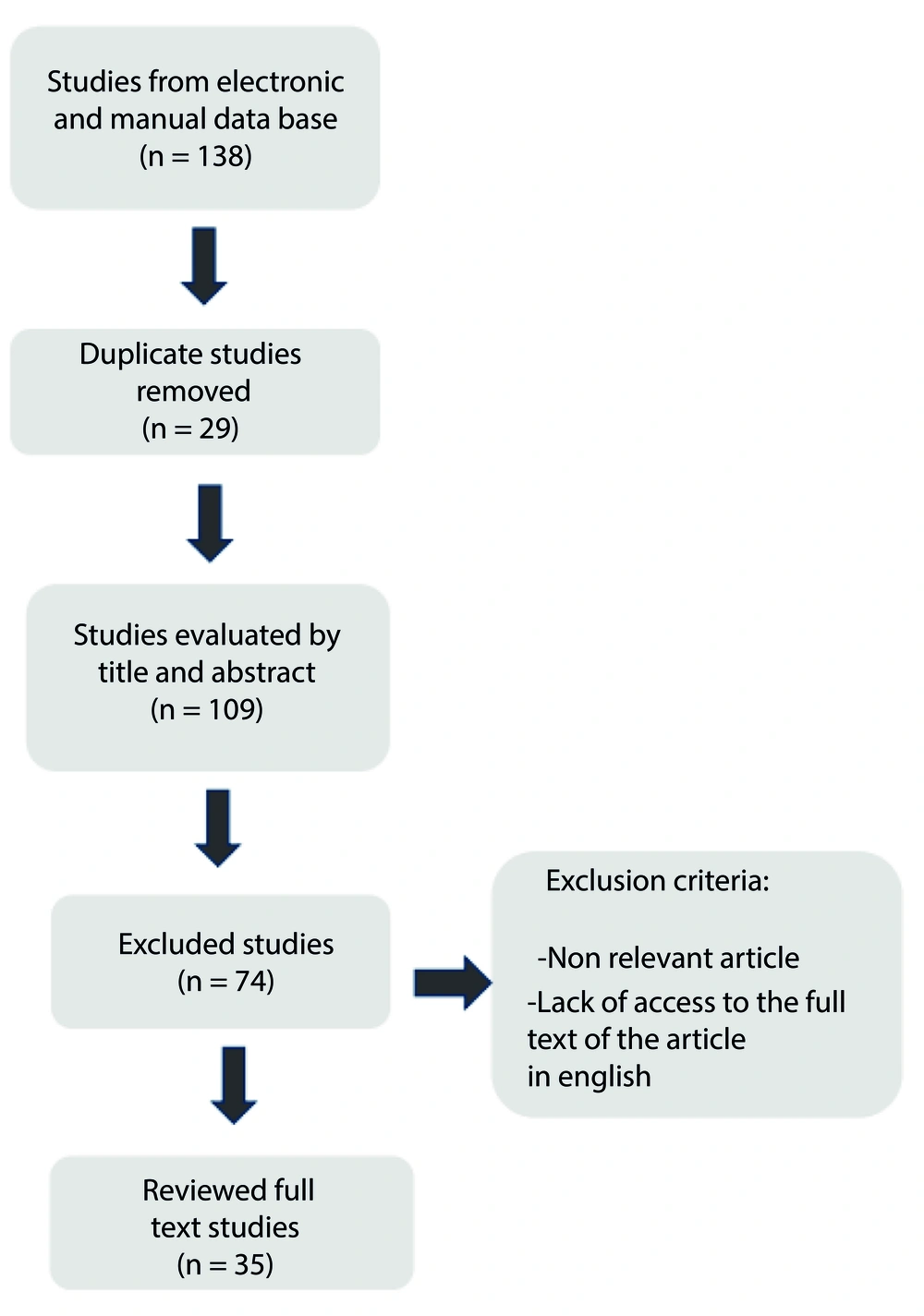

The method described here is simple, feasible, and straightforward. The initial search was conducted through computerized databases including ScienceDirect, Eric, PubMed, Scopus, Ovid, and Google Scholar. To validate the search outcomes, we first considered specific criteria. The selected search criteria included English language papers, original and review articles, with no time limit until 2022, using the keywords: Objective structured clinical examination, OSCE, duration of station, number of stations, utility, validity, reliability, cost, budget, finance, skill, and competency. A total of 138 papers were recruited for this study.

Papers specifically referring to the quality of an OSCE were included according to the following criteria: Comparison and experience of OSCE in medical sciences related to skills, reliability, validity, and cost evaluation. In an attempt to identify key factors, it was decided to consider inclusion criteria that included 35 studies. Figure 1 shows the process of identifying and selecting articles for the study.

3. Results

There is a vast amount of literature on the number and duration of OSCE stations. According Khan et al. initially, a series of 18 stations, each with a duration of 5 minutes, was designed by Harden 1979 (6). The reliability, validity, clinical skills, and cost of an OSCE are influenced by the number and duration of its stations.

3.1. Clinical Skills

In 1975, Harden et al. managed the complexities of the clinical examination by incorporating various aspects of clinical scenarios, such as history taking, physical examination, and patient management problems, through 16 stations, each lasting 5 minutes (22). In 2001, Mavis designed an OSCE that included 11 stations focused on five performance skills: Interpersonal skills, physical examination skills, written records, review of systems, and problem-solving, lasting 3.5 hours to evaluate the confidence of second-year medical students (23). Townsend et al. in 2001 conducted an OSCE to identify learning deficiencies in general practice students, which included 7 stations lasting 8 - 10 minutes each, assessing skills such as history taking, physical examination, communication skills, problem-solving, information gathering, prescription writing, referral, and ethics (24). Nowadays, OSCEs typically include several stations lasting 10 to 15 minutes each to assess medical knowledge, patient care skills, and competencies like professionalism and communication (3). An OSCE was designed to evaluate pediatric students' overall perception at the end of their clerkship. This OSCE included 13 stations, each lasting 7 minutes, except for the history-taking station, which lasted 14 minutes. The skills assessed included history taking, counseling, problem-solving, diagnostic procedures, data interpretation, and photographic material analysis (12). In 2016, Gas et al. developed an OSCE for general surgery residents that included 5 stations, each lasting 15 minutes, focusing on surgical knowledge and technical skills (25). Fields et al. in 2017 designed an OSCE to compare the results of 12 years of experience with previous OSCEs in orthodontic graduates. Their OSCE comprised 13 stations, each lasting 10 minutes, and assessed five skills: Three technical, one diagnostic, and one evaluation/synthesis (26).

3.2. Reliability

In fact, test reliability includes the stability and accuracy of students’ scores with different raters over repetitions (18, 26). Although the duration of a standard OSCE station is 5 - 10 minutes, different lengths of time are recommended for a complete OSCE, such as 4 hours to obtain (≥ 0.8) reliability and 6 - 8 hours to achieve highly reliable results (26). Brannick et al. and Barman stated that if an OSCE has a large number of stations, high reliability will be achieved (8, 18). Brannick et al., in his systematic review, claims that the more OSCE stations there are, the more reliable the results. Specifically, an OSCE with ≤ 10 stations tends to have a reliability of 0.56, while an OSCE with > 10 stations results in a reliability of 0.74. Additionally, a reliability of > 0.80 with > 25 stations has been reported in various studies (8). Khan et al. considers that 14 - 18 stations, each lasting 5 - 10 minutes, are required to obtain adequate reliability (27). Various studies consider an increased number of stations to be more effective on test reliability compared to other factors affecting test reliability, including the increased number of raters per station (27, 28).

3.3. Validity

As Messick states in his 1989 paper, validity is a key concept in the principles of theory and document estimation, which form the final judgment of the sufficiency and congruence of test scores. Ark et al. concluded that there is documented validity for only a handful of articles that establish the validity of OSCE. The theory of validity has undergone many changes, especially concerning the tools of assessment in medical education. In fact, recent theories of validity have omitted face validity (29). Additionally, evidence of reliability is considered the cornerstone of the overall validation of OSCE (30). Cohen et al. concluded that 17 of 19 stations are required to support construct validity in surgical residents (31). According to Egloff-Juras et al. and the Schoonheim study, at least 12 stations are needed to confirm the validity of the test (32). Downing wrote that to support or refute OSCE validity, sources of evidence, such as internal structure, including station analysis data, might be required (33). As Khan et al. stated, an OSCE station generally takes 5 to 10 minutes, and both the number of stations and their duration affect validity. Improving test validity in each station requires realistic and suitable time allocations for tasks (27).

3.4. Cost

Brown et al. writes that high-stakes OSCEs have higher costs compared to low-stakes OSCEs. These costs include staff, consumable equipment, travel and accommodation, venue, and patient cost divisions (19). Reznick et al. states that component costs in the four phases of the OSCE process (development, production, administration, and post-examination reporting and analysis) are similar to those in theatre production (34). Brown et al. also notes that examiner time is the main cost of an OSCE (19). Reznick et al. concludes that key factors affecting OSCE costs include the number of stations, duration, and the number of standard or simulated patients (34).

In a sequential OSCE, students participate on the first day, and those who achieve a clear pass do not participate on the second day, potentially reducing costs (19). Cookson et al. estimated that transferring an OSCE from eight long cases with 12 stations to a sequential design of four long cases with 6 stations would save approximately one-third of the original cost (35). While the initial implementation of a new OSCE is costly, subsequent administrations and shared scenarios can result in cost savings (35, 36). Additionally, implementing an OSCE with highly trained professional actors incurs higher costs compared to using volunteers and real patients (27).

4. Discussion

The main goal of our narrative review article was to identify the effective factors influenced by the number and duration of OSCE stations. Our results indicated trends that could be useful for understanding key factors such as skills, reliability, validity, and cost, which can influence the number and duration of OSCE stations. Our results suggest that there are four elements to consider in the initial stage of OSCE design: Skills, reliability, validity, and cost. Emphasizing the importance of determining the appropriate number and duration of stations in OSCE design underscores the need for assessment methods capable of distinguishing competent medical students from incompetent ones. Barman stated that designing a reliable, valid, objective, and practicable OSCE requires great sensitivity in planning and administration, such as using a test matrix and its learning objective allocations, organizing a sufficient number of stations, training academic staff, and using proper checklists (18). Mavis designed an OSCE to examine the self-efficacy of second-year medical students with 11 stations lasting 3.5 hours, but it lacked reliability and validity (23). Numerous studies have reported various station numbers and durations to assess different skills compatibly in OSCE design (3, 12, 23, 24, 26). Pierre et al. designed an OSCE to evaluate child health medical students' perceptions at the end of their clerkship with 13 stations, each lasting 7 minutes, except for the history-taking station, which lasted 14 minutes. Therefore, considering various skills requires different station durations in OSCE design (12). Thus, it seems necessary to consider factors other than skills when determining the number of stations and test duration in OSCE development. There is controversy regarding the standard length of an OSCE station. Some researchers have obtained good reliability (≥ 0.8) with stations lasting 5 - 10 minutes each, while others recommend a 6 - 8 hour OSCE to achieve reliable results (26). Although higher reliability in OSCEs is generally achieved with more stations (18), some studies have found the opposite. Brannick et al. investigated OSCEs and found that tests with fewer than 10 stations may result in reliability greater than 0.80. Conversely, other studies showed that even with more than 25 stations, reliability of less than 0.80 was obtained (8).

Therefore, it appears that factors other than reliability affect the number and duration of stations in OSCE implementation. To remove poorly performing OSCE stations, Auewarakul et al. analyzed the OSCE to estimate reliability and found that reliability was the basis of construct validity (30). In a study by Cohen in 1990, an OSCE was implemented for surgical residents to evaluate the construct validity of the test. Cohen et al. found that 17 of 19 stations were required to obtain construct validity, but he also considered three other elements: Reliability, skills, and cost (31). Khan et al. states that OSCE stations usually last 5 to 10 minutes each, but reliability, validity, and task allocation are essential for determining station numbers and duration (27).

Barman defines the types of validity and mentions that for an OSCE to have a high level of validity, it is important to consider the blueprint of subject matter that students are expected to achieve according to learning objectives (18). However, he does not address validity in terms of OSCE station number and duration. Direct and indirect costs are reported in a limited number of studies. OSCE costs vary due to differences in station designs across various OSCEs. In his 2015 paper, Brown et al. notes that cost resources are influenced by the time period of the study (19). Additionally, the main costs are impacted by inflation over the time period of the study.

Consequently, outdated budget estimates are not adequate resources for stakeholders. As Pell et al. state, reducing the number of stations results in decreased marginal costs, such as reductions in clinical resources, the number of simulations, support staff, and assessors, which leads to overall cost reduction (17). According to Reznick et al., multisite examinations for licensees, large-scale, high-stakes examinations require increased costs for OSCE implementation with more stations (34). Although Cookson et al. claim that if the number of stations is halved in a sequential OSCE, the cost will be reduced by one-third, it is not possible to definitively predict the amount of cost reduction because OSCE costs are influenced by the required skills, the number of assessors, standardized patients, and essential equipment (35).

Additionally, the cost of a station depends on whether real patients or professional standardized patients (SP) are used, and this cost is influenced by whether the OSCE is a new implementation or an iterated one. As mentioned previously, the focus of this study was to consider important factors related to OSCE station numbers and duration. An extensive search of databases such as PubMed, ScienceDirect, Scopus, Eric, Ovid, and Google Scholar for OSCE studies revealed four key factors.

Papers from various disciplines, including undergraduate and postgraduate medicine (e.g., internal medicine, surgery, child health, and nursing), were reviewed. It was found that factors such as skills, validity, reliability, and cost play significant roles in the initial stage of OSCE design and have a substantial influence on OSCE station numbers and duration. Similar levels of influence were observed in OSCEs across different fields like internal medicine, surgery, child health, and nursing in various universities of medical sciences. Our findings revealed that these four factors simultaneously affect OSCE station numbers and duration.

The presence of some factors with different OSCEs is due to slight differences in subjects from one discipline to another. Our reviewed studies identified four key factors compared to other studies. These studies were then categorized into four groups: Skills, reliability, validity, and cost. We selected the studies based on station numbers and duration in OSCE design developed for undergraduate and postgraduate medical sciences to scrutinize key factors. The studies elaborated in our review provide examples of factors that may be useful for developing OSCEs in the future.

Most studies, with the exception of Lind et al. in 1998, which examined the effect of length, timing, and content of the third-year surgery rotation on several clerkship and post-clerkship performance metrics, developed OSCEs to assess student competency and measure reliability and validity. However, they did not focus on the important factors influencing OSCE station numbers and duration (37). The opinions of OSCE designers offer important perspectives that can contribute to the development of more accurate, reliable, and valid tests. As mentioned in the introduction, no prior review appears to have determined the effective factors influencing OSCE station numbers and duration.

4.1. Strengths and Limitations

Investigating one of the basic needs in OSCE design, namely determining the appropriate number and duration of stations in the initial stages of test design, is one of the strengths of this study.

We are aware that our review may have two limitations. First, we could not assess all the evidence pertaining to this field. Although some progress has been made using our review, these incremental factors provide only a partial answer to OSCE development. Second, we only included studies in English, which may have limited our findings. These limitations highlight the difficulty of collecting comprehensive data for this review.

4.2. Conclusions

The findings underscore the importance of determining the appropriate number and duration of stations in the early stages of OSCE design. The data indicate that the four variables of skills, validity, reliability, and cost have a considerable impact on OSCE design. This research suggests that investigating these factors can be instrumental in designing effective OSCE tests and help predict the utility of the designed assessments. Ultimately, this study, with its insights, can aid in developing widely used and impactful OSCE tests and pave a clearer path for future OSCE design and evaluation in the field of medical sciences. In addition to assisting in OSCE test design, the results of this study can also help predict the utility of the designed OSCEs.

4.3. Highlights

Station numbers and duration are two important components that should be considered in the early stages of designing the OSCE structure. Reliability, validity, clinical skills, and budget are crucial factors in determining the number and duration of stations. Having sufficient insight into the factors influencing the utility of the OSCE is essential for exam designers.

4.4. Lay Summary

In objective structured clinical examinations, students' clinical skills are assessed in a simulated environment. Determining the number and duration of stations are two important elements in the design of this test. The decision regarding the number and duration of stations depends on various factors. The results of this study showed that the validity of the test, the skills considered for evaluation, obtaining consistent results from repeated assessments, and the cost of the test all affect the number and duration of stations in this evaluation method.