1. Background

The assessment of learners is inherent to educational curricula. A proper assessment could have a significant impact on the entire curriculum by guiding teachers and learners for higher efficacy in the success or failure of a program. In medical education, the assessment of learners is considered to be an important approach to ensuring accountability to the community (1).

Students are assessed by various approaches in medical education. The foremost example in this regard is the traditional approaches through oral and written exams. One of disadvantage of these assessment methods is that they only emphasize on reservations and cannot assess the combination of the theoretical knowledge and clinical skills of students (2). Considering that the assessment of clinical skills in medicine plays a pivotal role in education, it should evaluate the competence and practical abilities of medical students reliably, which highlights the need for the methods that are able to link theory to practice.

The clinical competency exam is an assessment method used for the clinical competencies of medical students, which bridges the gap between theory and practice. The structures of this exam are similar to the objective structured clinical examination (OSCE) (2, 3). The OSCE is considered to be an effective approach the evaluation of students, which allows focus on the practical aspects of training in addition to theoretical learning (4). OSCE was introduced by Ronald Harden for the assessment of various skills under different circumstances. Some of the key advantages of this exam are performance development and strengthening the roles of students, technical and clinical assessment, validity and reliability, and prominent psychometric characteristic compared to other exams. Despite these advantages, OSCE has limitations such as the lack of skilled workforce, resources and facilities, and being time-consuming (5).

In Iran, the clinical competency exam was held with a structure similar to the OSCE at the end of the internship course, assessing various skills such as communication skills, taking history, clinical decision-making, physical examination, and diagnostic and therapeutic skills (6, 7). Despite its advantages, the clinical competency exam could cause challenges in the quality of implementation. Therefore, special attention should be paid to the opinions of the stakeholders in this regard, including students. The importance of this issue could also be observed in the transformation plan of the educational system, in which one of the transformation and innovation packages in medical education has been dedicated to the upgrading of medical exams (8).

2. Objectives

The present study aimed to investigate the influential factors in the performance of medical students in the clinical competency exam.

3. Methods

This qualitative study was conducted using the directed content analysis approach during 2018-2019. The sample population included 10 medical interns at Isfahan University of Medical Sciences in Isfahan, Iran who had prior experience of participating in the clinical competency exam. The participants were selected via purposive sampling with maximum variety.

Data were collected via semi-structured, individual interviews in a face-to-face or indirect manner (via phone). The researcher set the time and place of the interviews with the participants in advance. Before the interviews, the researcher informed the participants of the recording of the interview contents, and written informed consent was obtained. The participants were given the opportunity to announce their willingness to participate in the interviews and were allowed to withdraw from the study at any given time. Furthermore, the participants were assured of the confidentially of the interviews.

At the beginning of the interviews, the interviewer introduced herself and explained the objectives of the research to the interviewees. The interview started with a few open questions (“How have your practical exams been conducted so far?”, “Have you ever experienced taking a similar clinical competency exam?”, “Please describe your experience”). At the next stage, the main question of the interview was asked (“What factors or elements do you think may affect the quality of your performance as an examinee in the clinical competency exam?”) Following the interviews and based on the responses of the participants, the probing questions were asked (“How have these factors affected your performance?”, “Have these factors facilitated or deterred your performance?”, “Given the factors you have mentioned, what strategies do you suggest to improve your performance in the exam and its quality?”) The duration of each interview was 45 - 60 minutes, and the interviews continued until reaching data saturation.

Data analysis process was performed immediately after each interview; the recorded tape was transcribed in detail, and the MAXQDA 10 software was used to facilitate the analysis process. Following that, the interview texts were reviewed several times, the semantic units related to the research question were selected, and initial open codes were assigned to these units. Finally, similar open codes were classified as one subcategory in terms of the concept and meaning, and the similar subcategories were classified as one main category.

3.1. Rigor

Ensuring the accuracy of data plays a pivotal role in qualitative research. In the present study, we used the four criteria of Lincoln and Guba (credibility, dependability, transferability, and confirmability) to verify the accuracy of the data (9).

The researcher used various methods based on these criteria. The member checking method was used to confirm the credibility of the data, so that some level-one codes could be extracted, shared with a number of the participants, and approved. Furthermore, an experienced expert in qualitative research confirmed the dependability of the data. To achieve confirmability, an observer approved all the stages of the study. To achieve transferability, the researcher attempted to describe the entire process of the study and its stages from implementation to analysis in detail.

4. Results

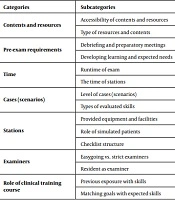

In total, 10 medical students of internship at Isfahan University of Medical Sciences with prior experience of participating in the clinical competency exam were enrolled in the study, including four men and six women. The mean age of the participants was 26 ± 0.94 years (age range: 25 - 28 years). After the analysis of the data obtained from the data analysis, 206 initial codes were obtained, and after extracting the duplicate codes and merging the similar codes, 87 codes were extracted and classified into seven main categories, including content and resources, pre-exam requirements, time, cases (scenarios), stations, examiners, and the role of the clinical training course (Table 1).

| Categories | Subcategories |

|---|---|

| Contents and resources | Accessibility of contents and resources |

| Type of resources and contents | |

| Pre-exam requirements | Debriefing and preparatory meetings |

| Developing learning and expected needs | |

| Time | Runtime of exam |

| The time of stations | |

| Cases (scenarios) | Level of cases (scenarios) |

| Types of evaluated skills | |

| Stations | Provided equipment and facilities |

| Role of simulated patients | |

| Checklist structure | |

| Examiners | Easygoing vs. strict examiners |

| Resident as examiner | |

| Role of clinical training course | Previous exposure with skills |

| Matching goals with expected skills |

4.1. Content and Resources

Regarding the exam content and resources, the participants referred to factors such as the accessibility of the content and resources and their various types.

“I don’t think this exam has a single reference. There is no one to say that a certain book has been introduced by the Ministry of Health and is practical, useful, and concise” (p2).

The other respondents believed that the type of the resources and contents of the exam could affect their preparation and performance on the test. Accordingly, the contents and resources should be provided to the students in the form of a summary booklet or an educational video if possible.

“They have to give us the reference as a booklet or film. Our university does not have a specific reference, and the source link cannot be opened” (p5).

4.2. Pre-Exam Requirements

The participants noted issues such as the role of debriefing and preparation sessions or simulation exam before the main exam, as well as the need to develop the subjects they must learn and what is expected in the exam, which should be assessed in the form of a guideline or booklet.

“We asked the previous students who had the exam experience what the exam was like. If there is a briefing workshop or another meeting, we would not be aware and the information would not be proper” (p9).

“I think they should give us what is expected of us in the exam. For example, they can leave the vital services of each section to the same section, ask questions, and summarize the contents, and provide the information to us” (p1).

4.3. Time

The participants considered the exam time as an influential factor in their performance. The time allocated to each station also has affected their performance. The majority of the participants believed that the clinical competency exam should be continuous and formative, rather than only held at the end of the course.

“One factor that is influential is that the exam is not continuous. It means that by only one exam, you cannot get rid of the wrong training for a few years” (p2).

“One of the factors that greatly affects our performance on the exam is that stations have a very short time for the exam session, like at a vaccination station or at a station that we should put a chest tube” (p4).

4.4. Cases (Scenarios)

According to the participants, the cases designed in the exam could also affect the quality of their performance. They mentioned that the level of the cases and type of the assessed skills were also important in this regard.

“The level of the exam cases should be general, at the level of an outpatient setting and general practitioners. The complicated cases that are not so common to manage should not be included in the questions” (p1).

Some of the participants believed that some exam cases did not assess high-level skills, such as clinical reasoning.

“Decision-making, clinical reasoning, and reaching an accurate diagnosis lacking in us. These are important matters to keep in mind when designing the exam scenarios” (p7).

4.5. Stations

Regarding the exam stations, the participants referred to the provided equipment inside the stations, such as moulages and their quality, simulated patients and the quality of their role, and the structure of the checklists.

“Some of the moulages are broken; for example, the tube was pierced behind the tongue and I could not perform the intubation” (10).

In the viewpoint of the participants, the quality of the role-playing of simulated patients was highly effective in their performance and was also a major source of stress during the exam.

“Some simulated patients do not know how to play at all. For example, pediatric patients cannot speak well, and such a simulation with such a patient is bad on an exam. Because when you do not understand what they are saying, it makes you more stressed” (p1).

The participants believed that the items of the checklist did not match the taught materials that were expected in the clinical education course.

“Some tasks are not our responsibility; for example, vaccination is not often our job. I mean, I go to a center where someone else is usually responsible for vaccination. There are things we need to know, but they are always into details” (p3).

In this regard, another participant also stated that the checklist was not standard.

“I saw that they were checking what we were saying, but those check markers were not really standardized. That means it was going into more details ” (p8).

Some other participants also mentioned the need to weigh and prioritize the items expected on the checklist.

“The checklists need to be more specific. For example, about the patient that I have to visit, I need to be told the important points and items because it is the priority of diagnosis, while some of these points are preferable, not necessary” (p6).

4.6. Examiners

Another issue pointed out by the participants was the role of the examiners. Some participants stated that the behavior of the examiner affected their performance. In their opinion, whether the examiner is strict or easygoing could affect the quality of their performance.

“This exam is very masterful, and the person who manages the exam can be very effective. I think there were one or two stations where they were very difficult. I knew the professors who were very strict and sensitive to education” (p2).

Some participants also mentioned the role of residents as examiners and their scoring manners.

“The presence of residents as examiners at the station is incorrect as we might have had personal and private problems with them during the training course, which will affect their scoring” (p10).

4.7. Role of the Clinical Training Course

The participants mentioned some problems with the clinical training course, which they believed could influence the quality of their performance directly and indirectly. These issues included not meeting the expected skills in the exam during the clinical training course and not practicing the skills sufficiently.

“We cannot do anything other than NG and catheter in the emergency room because there is a contest between the doctor and nurse. Nurses and doctors say we cannot do it, so they hand it to us. Otherwise, whether it was an injection or not, we could not do any of the injections. That is why exposure is not enough at all” (p9).

Many participants stated that the expected goals and skills of the exam did not match the taught materials during the training course.

“The style of the exam is not interesting; as if you did not teach a subject, but you take the exam. Where did you teach me these?” (p3).

5. Discussion

According to the interns participating in the clinical competency exam, several factors affected their performance in the medical competency exam, including contents and resources, pre-exam requirements, time, cases (scenarios), stations, examiners, and the role of the clinical training course.

One of the main influential factors in the performance of the participants in the exam was the discussion of the exam contents and resources. The results of a study by Pierre et al. (10) regarding the evaluation of the childcare OSCE at the University of Jamaica indicated that the participants took the exam as a useful learning experience and believed that the exam contents reflected the true status of childcare. Furthermore, more than half of the residents in the mentioned study were satisfied with the contents, organization, and implementation of the test (10).

The result of a study by Huang et al. (11) also showed that in order to train qualified and capable medical graduates, well-organized training programs should be used to emphasize on the expected clinical skills as much as medical knowledge. To hold any exam, some prerequisites and actions are required before the exam, so that an effective exam could be performed. Some of these factors include the discussion of justification and the minimum learning or must-learns for students. The results of a study by Allen et al. (12) showed that holding a four-month debriefing session before the OSCE allowed students and examiners to better compare their previous knowledge with the exam and its expectations. In addition, the students in the mentioned study expressed their satisfaction with the consistency of the exam and their learning in the debriefing sessions.

In another study, students suggested holding online debriefing sessions about the details of the exam process and its requirements (11). In their opinion, one of the influential factors in their performance was the exam time, and the participants believed that the exam should be continuous and held formatively. The study by Cushing and Westwood (13) also indicated that the OSCE could be used with a formative approach to provide peer feedback. In the mentioned study, the medical and nursing students, who were at the graduation stage, participated in an OSCE involving three five-minute stations with a case-based and problem-based approach, and simulated patients were also used in the exam. According to the findings, the exam led the students to learn constructive feedback and enhance their communication skills (13).

In the present study, the participants also considered the cases (scenarios), their level, and the type of skills they assessed to be effective in their performance. In the opinion of the students, the design of the cases and their level should be revised. In this regard, Bodamer et al. (14) used a practical simulated medical exam to assess the clinical competence of third-year medical students recruiting internal faculty members and simulation specialists to develop their exam scenario. Before running the real exam, they performed these scenarios experimentally with several clinical content specialists (14). Another study in this regard was conducted by Aliadarous et al. (15) regarding the viewpoints of residents on the OSCE as a tool for their developmental assessment in the education, and the results were indicative of the effects of the cases on the performance of the students, giving them the opportunity to learn real-life situations (15).

Stations were another important influential factor in the performance of the students in the present study. In this regard, the participants mentioned the quality of the provided equipment, such as moulages and their defects, and believed that these defects make it impossible to perform well on the exam. The study by Khajavikhan et al. (16) evaluated the viewpoints of medical students regarding tracheal intubation in two real ways using mannequins, and the obtained results indicated that the students assumed that the fear of hurting patients, teacher’s distrust of the students, and stress and anxiety in the operating room were the most important influential factors in their failure in the tracheal intubation of real patients (16).

Based on the viewpoint of the participants in the current research, the discussion of the presence of simulated patients in the stations and their role-playing had a great impact on their performance. Accordingly, the simulated patients did not play their role professionally and correctly. The research by Khosravi Khorashad et al. (17) on evaluating the satisfaction of medical students with the OSCE showed that the students were reluctant to see their teacher as a patient. In their opinion, it might help to guess the patient’s problem and make it difficult to judge and evaluate (17).

The quality of the exam checklist was another influential factor in the performance of the medical students in the present study. The main problem mentioned by the participants in this regard was the imbalance between the considered items and the need to prioritize and weigh these items. Since the clinical competency exam has a similar structure to the OSCE, using a checklist is considered to be an advantage as it increases objectivity. Due to the limited skills on the checklist, there were concerns that the students would not be able to reflect their learned skills, which threatened validity. This is intensified when the weighing of different items is considered the same, and this issue could be prevented by incorporating the items that are important and able to differentiate between poor and strong student performance into the checklist (1, 18).

Another issue that was mentioned by the students regarding the discussion of the exam stations was the role of the examiners in the stations, and they considered the behavior of examiners to be effective in their performance. The hawk-dove effect applies to this issue as some examiners are easygoing and usually give higher score to the examinee, while others are strict and assign lower scores. For instance, in the Mini-CEX exam, which has a structure similar to the OSCE and clinical competency, up to 40% of the variance in the exam scores is related to this issue (19, 20).

Another issue highlighted in the current research was the use of residents as examiners in the exam stations. The participants in our study had variable views in this regarding, mentioning the advantages and disadvantages. The results obtained by Khosravi Khorashad et al. (17) also considered the use of residents as examiners in both the positive and negative aspects. From a negative perspective, there is the possibility of conflict and personal disputes between students and residents during the training course, which may lead to scoring bias. In the present study, the students also cited their reasons for not using residents as examiners. According to Khosravi Khorashad et al. (17), the presence of residents as examiners could also have a positive aspect, which is the feedback that residents could provide to students more easily than professors in terms of specialized and professional aspects.

In the current research, the participants mentioned other influential factors in the quality of their performance in the exam regarding the clinical training course. One of the most important issues cited by the students was the lack of exposure with the considered skills in the exam stations in their training and learning course. The study by Mortazavi and Razmara (21) regarding the satisfaction of medical students with training wards, emergency and outpatient centers in hospitals, and in the Community at Isfahan University of Medical Sciences indicated the highest satisfaction level with outpatient education in terms of the teaching methods, performance of the professors, and the number and variety of patients. Considering that hospital wards are mainly specialized, apprentices and interns are only familiar with chronic and probably rare diseases in the community; as such, educational planners must focus their efforts on the outpatient departments (21).

In the present study, the students believed that more productive educational opportunities should be valued in terms of education; such examples are the ambulatory setting and taking advantage of the goals of educational opportunities in this educational situation to properly train the students.

5.1. Conclusions

Several factors could influence the performance of medical students in the clinical competency exam. The contribution of these factors and their impact on the performance of medical students may vary. According to the results, the most important influential factors in this regard were the incompatibility of the expected skills to the goals and learned materials of the students during the training course, lack of organized resources, difficulty in the assessment of the skills and resources, poor quality of the role-playing of simulated patients, and the effect of the examiner on the performance of the examinee. These findings could help officials and policymakers to plan for the future and improve the quality of the exam. The problems of the clinical competency exam should be further evaluated, and the factors that affect the performance of students must be identified in order to solve these problems and shortcomings, so that the exam would be held with higher quality. Therefore, it is recommended that authorities and policymakers take corrective measures with regular and organized planning, so that students could perform better and have a more desirable performance.

5.2. Limitations of the Study

One of limitations of the present study was that the findings were limited to the perspective of the students and not the exam organizers, which might have led to the negligence of some aspects of the exam. In addition, this study was only performed on the medical students of Isfahan University of Medical Sciences, and it is suggested that the views of all stakeholders, including test practitioners, policymakers, and professors, be considered in the further investigations in this regard. It is also recommended that the results of these studies be reflected to the university officials and the Ministry of Health and Medical Education and other related organizations.