1. Background

The clinical environment is a valuable resource that combines theory and clinical practice to prepare students for their professional roles. If nursing is accepted as a practical profession and nurses learn by doing, mastering basic clinical skills should be essential to each student’s educational course (1). Students’ most crucial educational experience is what they learn in the clinical environment. However, nursing education researchers have shown that the quality of clinical education has not been outstanding (2, 3).

Improving the quality of education requires continuous review of the current situation, identifying strengths and weaknesses, and providing appropriate solutions (4). In the meantime, evaluation, as an integral part of any educational program, provides the possibility to measure the effectiveness of programs and the achievement of goals (5, 6). Educational purposes could not be achieved without proper evaluation (7). The student’s way of dealing with the patient and mastery of basic skills are assessed in clinical evaluation, which is necessary to save the patient’s life and promote community health (8).

Clinical evaluation in nursing education is a critical action with consequences for students, educators, and recipients of nursing care. The result of clinical evaluation directly affects students’ self-esteem. Every educator should know that evaluation and teaching are inseparable, and evaluation is a teaching strategy. Evaluation is not just a process but a product that, if unsuitable, threatens the patients’ health and quality of life (3).

Despite the availability of different clinical evaluation methods, the evidence suggests that students’ assessment is usually limited to subjective information. The students believe that precise assessment of their clinical skills is not considered (9, 10). The survey results have shown that most students (73.6%) and professors (75.9%) considered the current clinical evaluation method inappropriate. Furthermore, 96.4% of students stated that they do not receive any feedback on their strengths and weaknesses regarding the clinical evaluation method (11). Meanwhile, evaluation should be transparent, impartial, and standard, evaluating students’ performance based on the educational goals, being continuous, and providing necessary feedback (12).

Therefore, the need for using a method by which students’ performance could be examined more objectively is quite sensible (13). Objective structured clinical examination (OSCE) method is one of the objective methods of clinical evaluation (14). In this method, several stations are designed, and different capabilities are tested in each station. Learners go through all the stations and are judged by the same tools and under predetermined standards (15). Another type of evaluation is the Mini-CEX method, a short-term evaluation with several stages within a specific time interval. The assessor observes the student, provides feedback, and determines the student’s performance score using a structured form (16, 17).

Much effort and energy have been paid to assessing students’ learning in the clinical field (3). However, evaluating students in achieving the desired status is still one of the most critical challenges in clinical education (18). No studies have been conducted in Iran to evaluate the effect of OSCE and Mini-CEX evaluation methods on operating room students’ performance and satisfaction.

2. Objectives

Having this knowledge, the present study was designed to implement these two methods and collect operating room students’ opinions and clinical skill scores.

3. Methods

This quasi-experimental study was conducted on second-semester operating room students who completed their first academic semester. A total of 32 samples were selected by census method and randomly divided into 2 groups of 16 people. One group was evaluated by the OSCE method in one session at the end of the course. The other group was assessed by the Mini-CEX method in 2 sessions during and at the end of the course. Both groups had the same instructor and conditions, such as clinical environment, length of training course, and teaching content. At the end of the evaluation, both groups completed the evaluation satisfaction questionnaire to obtain students’ opinions.

The inclusion criteria were passing all the previous courses. Exclusion criteria were unwillingness to participate in the study, failure to complete the evaluation process, and unfinished questionnaire completion. The data collection tools included 8 tools for the OSCE test (5 procedure checklists and 3 question sheets), a comprehensive checklist for evaluation by the Mini-CEX method, and a satisfaction questionnaire. The satisfaction questionnaire included 10 domains, including fairness, consistency with learning objectives, suitability for assessing skills, adequate time, feasibility, skill promotion, objectiveness, stressfulness, interest in the evaluation method, and agreeing to use the method in the later semesters. The questions were set on a 5-point Likert scale from completely disagree to completely agree. However, the domain “stressfulness” score was calculated reversely. The reliability of the satisfaction questionnaire was obtained by calculating Cronbach’s alpha coefficient and the validity approved by Content Validity and ten experts’ opinions. The satisfaction questionnaire’s alpha coefficient was 0.836.

The study had 2 general phases. The first phase involved tool preparation and instructor training. The second phase entailed method implementation and data collection. The instructor training was conducted 1 hour after extracting up-to-date information about each method. The procedures were selected, and the tools were prepared after consulting with the instructor and according to the educational course topics. According to experts, the final version of the tools was ready for use after modifications.

In the second phase, students from one group completed the OSCE test at 8 stations at the end of the course. 5 skill stations, including simple interrupted suture, attaching a scalpel blade to a handle, open gowning and gloving, closed gowning and gloving, and also. owning and gloving a team member and three question stations, including surgical sutures, surgical supplies, and general set instruments. Each station lasted between 10 and 15 minutes, and the student was transferred from 1 station to the next after the time was announced. Each student’s OSCE score was calculated by adding up the scores they received at 8 stations, and the final score was calculated out of 20.

In the other group, the Mini-CEX test was performed in 2 sessions 1 week apart. The student received feedback after doing the procedure in the first session, and a checklist was used to grade the student in the second session. Open gowning and gloving closed gowning and gloving, and a team member’s gowning and gloving were evaluated in the Mini-CEX group. The Mini-CEX checklist included 50 general statements in 5 parts: Open gowning, open gloving, closed gowning, closed gloving, and gowning and gloving a team member. Each statement was graded on a 10-point Likert scale. The scores range from 0 (unacceptable) to 10 (excellent), with scores of 1 to 3 considered lower than expected, 4 to 6 borderline, and 7 to 9 within the expected range. The maximum score for the Mini-CEX test is 500, converted into a final score of 20.

Both sessions were performed in the operating room environment. Students performed the surgical hand scrub before proceeding to the operating room, where they donned sterile gowns and gloves and began the procedure. The procedure was performed for 10 minutes, and feedback was given for about 5 minutes.

The reliability of applied tools was obtained by calculating Cronbach’s alpha coefficient and the validity approved by Content Validity and ten experts’ opinions. Alpha’s reliability coefficient was more than 0.9 for half of the tools and more than 0.7 for the rest. The data were analyzed using SPSS statistical software v. 22.0.

4. Results

Out of 32 single samples, 75% were girls and 25% were boys. An independent t-test was used to determine the homogeneity of the 2 groups in terms of age and grade point average. The chi-square test was used to compare the two groups regarding gender and marital status. No significant difference was observed between the 2 groups regarding age, gender, grade point average, and marital status (Table 1).

| Variables | Study Groups (Mean ± SD) | Statistical Results | |||

|---|---|---|---|---|---|

| OSCE Group | Mini-CEX Group | P | df | t | |

| Age | 19.88 ± 0.80 | 19.25 ± 1.00 | 0.61 | 30 | 1.946 |

| Grade point average | 16.97 ± 1.31 | 17.29 ± 1.20 | 0.475 | 30 | 0.724 |

Frequency Distribution of Quantitative Demographic Variables and Independent t-Test Results

The Mini-CEX group included younger students with higher grade point averages. Despite the P values, the differences between the 2 groups are not statistically significant.

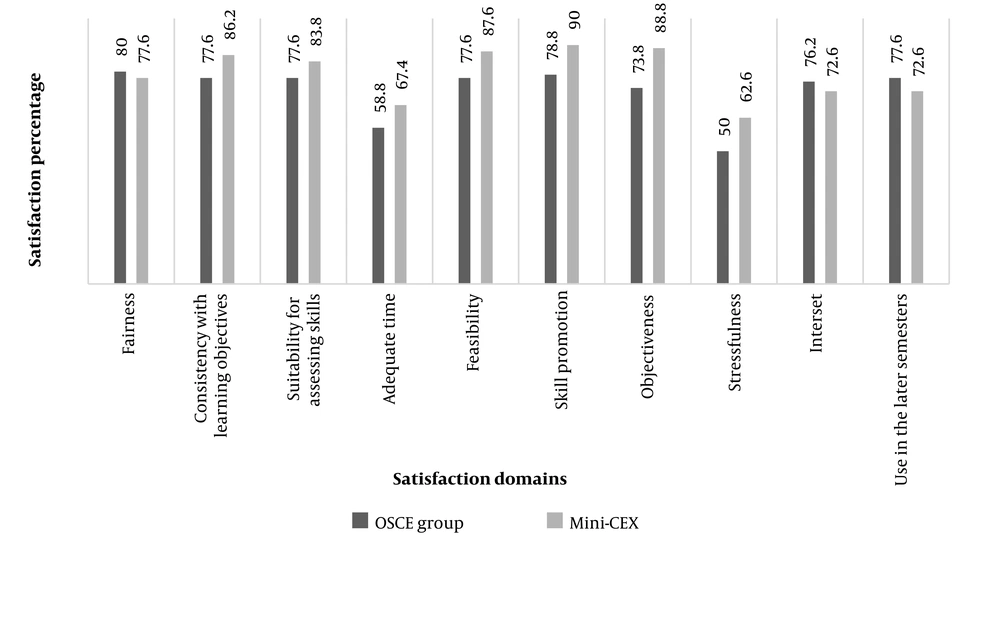

The mean satisfaction score was 78.87 ± 13.30 in the Mini-CEX group and 72.75 ± 12.93 in the OSCE group. The satisfaction percentage with the evaluation method was higher in the Mini-CEX group, but this difference was insignificant (P > 0.05). The information obtained from the satisfaction of the evaluation questionnaire is shown in Figure 1. According to this information, the average satisfaction in the Mini-CEX group was higher in 7 domains: “Consistency with learning objectives, suitability for assessing skills, adequate time, feasibility, skill promotion, objectiveness, stressfulness.” Satisfaction in 3 domains, “fairness,” “agreeing to use the method in the later semesters,” and “interest in evaluation method,” was higher in the OSCE group.

The highest satisfaction percentage was obtained in the “fairness” domain for the OSCE group and the “skill promotion” domain for the Mini-CEX group. The lowest percentage of satisfaction in both groups was related to “stressfulness.” The highest level of satisfaction was related to the “skill promotion” domain, with a satisfaction rate of 84.4%. The lowest level of satisfaction in both groups and overall was related to “stressfulness.” The difference in satisfaction between the 2 groups was only significant in the domain of “objectiveness” (P < 0.05). The result of comparing the final scores in the 2 groups is shown in Table 2. The mean scores collected in the Mini-CEX group were 19.15 ± 1.53 and 17.11 ± 1.47 in the OSCE group (P < 0.05).

| Group | Possible Range | Minimum and Maximum | Mean of Final Scores | SD | Statistical Results | |||

|---|---|---|---|---|---|---|---|---|

| Mean Difference | t | df | P-Value | |||||

| OSCE | 0 - 20 | 13.75 - 19.30 | 17.11 | 1.47 | 2.03 | 3.824 | 30 | 0.01 |

| Mini-CEX | 0 - 20 | 14.80 - 20 | 19.15 | 1.53 | ||||

Comparing the Mean and Standard Deviation of the Final Scores in the OSCE and Mini-CEX Groups Using the Independent t-Test

The mean of final scores is higher in the Mini-CEX group, and based on the P-value, this difference is statistically significant.

5. Discussion

This study evaluated the effects of OSCE and Mini-CEX on operating room students’ performance and satisfaction. The Mini-CEX group topped their peers’ mean final performance scores and reported the highest satisfaction levels in the “skill promotion” domain. The results indicated that the Mini-CEX evaluation method positively affects the improvement of students’ clinical skills.

Najari. F and Najari. D evaluated 7 skills of forensic medicine residents using Mini-CEX and DOPS (Direct Observation of Procedural Skills) compared to traditional evaluation. Mini-CEX and DOPS found that the mean scores in the intervention group were significantly higher than those in the control group. In conclusion, the Mini-CEX and DOPS methods had a role in promoting learners’ skills, which is consistent with the results of the present study (19).

In a study on nursing students in Indonesia, Amila et al. concluded that nursing students’ clinical performance was improved using the Mini-CEX method. Based on this study, nursing education planners use the Mini-CEX method to evaluate clinical performance and develop students’ skills (20). Jafarpoor et al. assessed the effect of DOPS and mini-CEX on nursing students in Iran and discovered that using these techniques resulted in improved clinical performance scores and higher satisfaction levels among students (21).

Ramula and Arivazagan found that Mini-CEX improved clinical skills, reduced diagnostic errors, and improved patient care overall (22). Shinde et al. discovered that using Mini-CEX improved skill scores for postgraduate ophthalmology students (23). This study confirmed these findings, thus supporting the use of Mini-CEX as a reliable tool to improve the clinical performance of operating room students. Despite limited evidence, a meta-analysis shows that Mini-CEX positively impacts trainee’s performance (24).

Several key features of the Mini-CEX make it a useful formative approach for improving skills: The Mini-CEX requires at least 2 evaluations, and scores typically improve over time. In addition, trained professionals provide feedback on students’ performance, enabling them to better understand their strengths and areas for improvement. As a workplace-based approach, the mini-CEX is more than just an evaluation; it allows students to gain practical experience and develop their skills, preparing them for future careers.

The highest satisfaction with the OSCE method was related to the “fairness” of this test, which has been mentioned in several studies, including Rasoulian et al., a study in which both professors and residents considered an important advantage of the OSCE to be fair (25). Another study showed that most students thought that OSCEs were fair and that OSCE can be anxiety-inspiring for students in the early years (26). The lowest satisfaction of the samples in both OSCE and Mini-CEX groups was related to “stressfulness.” In a study aimed at comparing anxiety created in several different evaluation methods, the highest level of anxiety was caused by the OSCE test (27).

In a qualitative study, many trainees found the Mini-CEX test stressful (28). Stress levels in the Mini-CEX group could be attributed to its “workplace-based” nature and the student’s lack of experience in the operating room. The OSCE groups’ higher stress levels may be due to the procedures involved in using surgical instruments like needles and scalpel blades.

There was a significant difference in the domain of “objectiveness” related to the checklists in the Mini-CEX, graded with a 10-point Likert scale. As Star has pointed out, tools like checklists reduce the subjectivity of evaluation (3). In addition, the evaluator noted all necessary points to remind the student at the end of the evaluation. Students were given the impression that the evaluators were impartial and unbiased in their judgment and intended to provide constructive feedback for skill improvement.

5.1. Conclusions

The OSCE and Mini-CEX evaluation methods effectively evaluated clinical procedures and received positive student feedback. However, the Mini-CEX evaluation method had a more significant impact on the performance of operating room students. It is recommended to use the Mini-CEX evaluation method while minimizing stressful factors to improve the learning experience of operating room students and train skilled professionals to provide services in medical centers.

5.2. Limitations

This study had some limitations. Due to the shortness of the training course, doing more than 2 Mini-CEX sessions was impossible. In addition, due to the nature of the research, a pre-test could not be performed. Therefore, the “after-only” design was used without a control group.