1. Background

Clinical education is one of the most significant components of medical students’ education and constitutes a principal and vital part of training competent and professional students (1). The role of ideal clinical education in the personal and professional development and improvement of clinical skills of students is inevitable. Assessment is the basis and an inseparable component of medical education. The learners’ motivation for learning the content presented to them is influenced by efficient assessment methods. An assessment method has to be valid and reliable, replicable and practical and has to have a positive effect on students’ learning in order to be acceptable (2). Conventional and personal assessment methods used by teachers, especially in the realm of clinical skills, is one of the main concerns of learners regarding the failure to observe educational justice, which can reduce the learners’ motivation for learning (3). Selecting poor assessment methods can lead to passive, habitual, and repetitive learning, which is sometimes followed by a rapid decrease of knowledge and inability to apply it in real situations (2). Nowadays, multi-purpose and multi-faceted tests which evaluate dimensions such as knowledge, problem-solving skills, communication skills, and teamwork skills are recommended (4).

Direct observation by a clinical specialist or a faculty member is one of the most common methods to assess students’ capabilities in dealing with patients (5). The observation and assessment of learners during the practical procedures on patients and providing the learners with appropriate feedback by the faculty member help the learners to acquire and improve the practical skill and assist them through the direct supervision of clinical care (6).

Experts have long been involved in finding valid and reliable methods to assess the students’ clinical skills effectively (7). Direct observation of procedural skills (DOPS) is a common method used to assess the procedural skills. This assessment method includes observing an apprentice during the performance of a procedural skill on a patient in a real clinical situation (8). A study carried out in the British Medical royal college showed that this method is qualified and competent to assess clinical procedures (9). Also, since providing feedback is one of the basic aspects of this test, the test is considered to have a significant role in clinical education (10).

An important characteristic of this method is the provision of feedback to the learners as well as its structural developmental nature. In this method, each skill is frequently assessed by the assessor and analyzed according to a checklist, and the defects are reported to the learner. Therefore, the learners realize their mistakes in each observation, thereby improving and promoting their skills. The reliability of the DOPS method in assessing the radiology assistants has been confirmed, and it is considered an appropriate method in this specialty owing to the providing of feedback to students and identifying their weaknesses (11).

In their study, Bagheri et al. reported DOPS to be objective, valid, and highly reliable in the clinical assessment of paramedical students. It can promote students’ clinical skills more effectively, singly or along with other conventional methods of clinical assessment (12).

According to the results of Kundra et al. (2014) and Delfino et al. (2013), DOPS improved the students’ scores in the clinical performance test. These studies have reported DOPS as one of the most effective educational methods by which the learners maximize their strengths and greatly minimize their weaknesses (13, 14).

Regarding the educational effect of DOPS, it should be pointed out that using this method not only accounts for motivation and encouragement of the learners but also orients the learners toward learning because the content and method of the test are directly associated with the clinical performance. Given the increasing number of learners in the clinical education environments and lack of appropriate development of manpower and educational resources, developing efficient assessment methods compatible with clinical education in each specialty seems to be of great significance (11). Based on the aforementioned discussion, this study was aimed to determine the effect of assessment of practical skills on the performance of radiology students in clinical settings by the DOPS assessment method.

2. Methods

The present trial investigated 30 sophomore students of radiology who were taking hospital apprenticeship course 3 at the teaching hospitals of Kermanshah University of Medical Sciences in 2017. The data collection tool was a researcher-made checklist consisting of two parts: the first part included demographic data of the students, and the second part included 18 clinical procedures assessing chest imaging skills and techniques such as patient training, selecting appropriate radiation angle, and choosing the right cassette based on the lesson plan of hospital apprenticeship course 3. The content validity of this checklist was confirmed by revising and applying the corrective comments of 10 expert faculty members. The reliability of this scale was approved by equivalent forms method in which two faculty members observed and evaluated at least five students during a radiology procedure by the DOPS assessment method. Then, the agreement between the results of the trainers’ assessment and intraclass correlation test (Kappa test) was analyzed, which yielded a Kappa coefficient of 0.6 and correlation coefficient of 0.80.

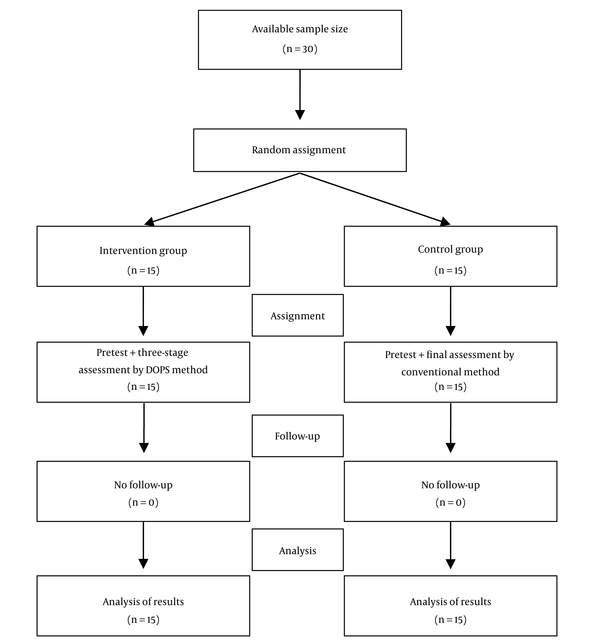

All 30 students who had passed the chest X-ray course were included in the study and were equally divided into control and intervention groups by simple random sampling. A pretest was given to both control and intervention groups to compare the effects of conventional and DOPS assessment methods on students’ clinical skills before the start of a 10-day apprenticeship course based on the constructed checklist. Then, the control group underwent the apprenticeship course under the supervision of trainers, and their clinical skills were evaluated again at the end of the course using the checklist. The students in the control group were evaluated by the DOPS method before and after the apprenticeship course.

In the intervention group, according to the DOPS method, the students’ clinical skills were assessed in three stages during the apprenticeship course through direct observation of trainers during chest X-ray procedure, and purposive feedback was provided to the students after each stage to correct the weak points in radiology procedure. In the DOPS assessment method, the intervals between observations were different depending on the students’ readiness and adequate time for performing the clinical skills (Figure 1).

Each radiology procedure was rated as good (complete procedure) with a score of 2, average (incomplete procedure) with a score of 1, and poor (no procedure) with a score of 0. The maximum final score was 36, and the minimum score was 0 for each observation. Moreover, students’ participation in the study was voluntary, and the study was conducted after taking the required permissions.

Data analysis was done using SPSS (Version 16) software using descriptive and analytical statistics. Fisher’s exact test and independent t-test were used to analyze the homogeneity of the demographic variables in the intervention and control groups. Kolmogorov-Smirnov test was used to analyze the normality of quantitative variables and DOPS scores. Independent t-test was used to compare the mean score of DOPS between the control and intervention groups, and paired t-test was run to compare the mean scores of DOPS before and after the intervention. P < 0.05 was considered significant for all tests.

3. Results

In this study, 30 students with the mean age of 21.9 ± 1.07 years were equally divided into the control and intervention groups; 73.3% were male. The results are shown in Table 1. Both control and intervention groups were homogeneous in terms of demographic variables such as age, gender, grade point average (GPS), and satisfaction with major (Table 1).

The results of Shapiro-Wilk test showed a normal distribution of the DOPS scores in both the intervention and control groups in all three stages (P > 0.05).

The results of the repeated measures analysis indicated a significant trend of change in the intervention group for the mean scores of radiology students’ clinical skills in the three stages by the DOPS method (P = 0.001). These changes were not significant in the control group (P = 0.174). The comparison of the mean scores of assessment of radiology students’ clinical skills by independent t-test showed no significant difference between the control and intervention groups in the first (P = 0.125) and second stage (P = 0.879), but a significant difference was found in the third stage (P = 0.001) (Table 2).

| Group | First Stage | Second Stage | Third Stage | P Valuea |

|---|---|---|---|---|

| Intervention | 14.9 ± 4.3 | 17.46 ± 5.71 | 25 ± 5.25 | 0.001 |

| Control | 17.2 ± 3.5 | 16.93 ± 3.6 | 17.2 ± 3.09 | 0.174 |

| P valueb | 0.125 | 0.762 | 0.001 |

Comparison of the Mean Scores (Mean ± SD) of Radiology Students’ Clinical Skills Assessment Between the Intervention and Control Groups in all Three Observation Stages by DOPS Method

No significant difference was seen in the DOPS mean scores of students’ clinical skills in the intervention group between the first and second stages by Bonferroni follow-up test (P = 0.786), but there was a significant difference between the first and third stages in DOPS mean scores (P = 0.001). Further, there was a significant difference between the second and third stages (P = 0.001) (Table 3).

| Comparison Group 3 | ||

|---|---|---|

| 1 and 2 | 2 and 3 | |

| Number | 15 | 15 |

| Mean ± SD | -1.13 ± 0.99 | -3.90 ± 0.951 |

| P value | 0.786 | 0.001 |

Comparison of the Scores of Students’ Clinical Skills Between the Study Groups for Chest X-Ray Procedure After Intervention

4. Discussion

The results of this study showed that the clinical skills of performing chest X-ray acquired by the radiology students in the intervention group significantly improved after administering the DOPS assessment method compared with the control group students who were assessed by the conventional assessment. This could be due to the structured and timely feedback regarding the performance weaknesses of the students during the performance of the clinical procedures in real clinical settings, which is the main characteristic of the DOPS assessment method.

The results of the study by Nooreddini et al. showed that the mean score of students assessed by the DOPS method was significantly higher than that of the control group (15). Profanter et al. reported the efficacy of rapid feedback by the examiner in increasing the students’ clinical skills, which can promote the safety and health of patients (16). Also, Shahgheibi et al. reported DOPS as a new, active, multi-faceted assessment method in clinical education that leads to significant changes in the learners’ clinical skills compared with the conventional assessment methods. Their findings also showed a significant difference between the mean scores of students’ clinical performance in the three stages of DOPS assessment method during the apprenticeship course (17).

However, the findings of the present study only showed a significant increase in the mean scores of students’ clinical performance assessed by the DOPS method between the second and third stages. This can be due to the complexity and diversity of the stages of a standard radiology procedure, which requires more practice of the technique along with providing of appropriate feedback and informed intervention to eliminate the performance weaknesses of students.

Moreover, the results of Chen et al. showed that the DOPS method, which focuses on providing feedback during the student-patient encounter, promotes the students’ competence and self-confidence (18). The results of Cobb et al. indicated that students assessed by DOPS method had deeper attitude and approach to clinical skills and could acquire higher scores (19). In a systematic review, Ahmad et al. studied 106 articles related to assessment methods of clinical skills and concluded that none of the assessment methods were completely valid and reliable, each having their advantages. Hence, they suggested a combination of assessment methods to examine students’ clinical skills (20).

4.1. Conclusion

The findings of this study showed that direct observation and provision of structured feedback to students during clinical education by DOPS assessment method significantly improves the practical and clinical skills of radiology students at clinical centers and can be used as a more effective method than conventional clinical assessment methods.