1. Background

Simulation environments play a critical role in clinical education, by improving students' scientific and practical skills and preparing them for real-life situations (1). Virtual simulation involves creating computerized scenarios mimicking the real world with the added capability of combining gaming features (2). By reducing the cost of performing simulation in person, virtual simulation improves learners' performance (3). Clinical virtual simulations include dynamic and immersive environments ranging from prehospital to community settings with virtual patients (4). Despite numerous technology-based clinical training programs, there are not many simulations of real-life interactions between a physician and a patient (2). At the College of British Columbia, the Department of Surgery developed the CyberPatient (CP)-platform, which is currently being used in clinical education. The long-standing dream of instructors and students has finally come true with CP. Student learning focuses on problem-solving and clinical decision-making within the CP interactive learning system. In the system, students can observe their laboratory results, clinical examinations, diagnoses, and treatment of their patients' conditions via menu options (3).

Simulation-based training enhances learning by facilitated debriefing (4). After conducting realistic simulated scenarios, students can experience the consequences of their actions or interactions (5). However, the most effective method to perform a facilitated debriefing has not been determined yet (6). Debriefing participants receive a unique form of designed feedback to help them reflect on their performance and identify their strengths and weaknesses after completing the simulation exercise (7).

Debriefing is beneficial to gain a better understanding of how to act and react in difficult situations. Without debriefing, participants' mistakes or non-responses may not only go unnoticed, but also become ingrained in their practice (8).

Despite their importance, debriefings are often unclear as to how they should be conducted. A video replay or review of key debriefing scenes can facilitate learning and improve debriefing quality (9). It has been suggested that video review aligns the perceptions of performance with actual performance and thus makes gaps and deficiencies more visible. Accordingly, video analysis can also provide precision when aiming to improve specific behaviors (10). Video-assisted debriefings have been widely accepted; however, limited empirical evidence supports their educational value (11). Recent research on debriefing implies that additional research is required to determine whether the impact of video playback during debriefing is greater than that of no video playback (6).

Birnbach et al. study, which investigated the effects of video reviews on anesthesia placement, found that video reviews were significantly more effective than a typical anesthesia session. Study participants who underwent videotaping and video review of epidural analgesia in the labor and delivery ward had a greater improvement in overall and selected performance criteria than those who did not (12).

In simulation-based health care education, deliberate practice improves skill acquisition in novice clinicians. Deliberate practice includes setting specific goals, providing detailed feedback on individuals’ performance, allowing sufficient time to practice and review your skills, and ensuring that you have repeated opportunities to refine them (13).

To the best of the authors' knowledge, no study has compared the effectiveness of oral debriefing and video-assisted debriefing in improving medical students' decision-making skills and attitudes toward professionalism.

2. Objectives

The present study aimed to compare the pedagogical effectiveness of video-assisted debriefing and oral debriefing in simulation-based training. By comparing video-assisted debriefing and traditional oral debriefing, it was hypothesized that video-assisted debriefing would improve medical students' decision-making skills and professional attitudes.

3. Methods

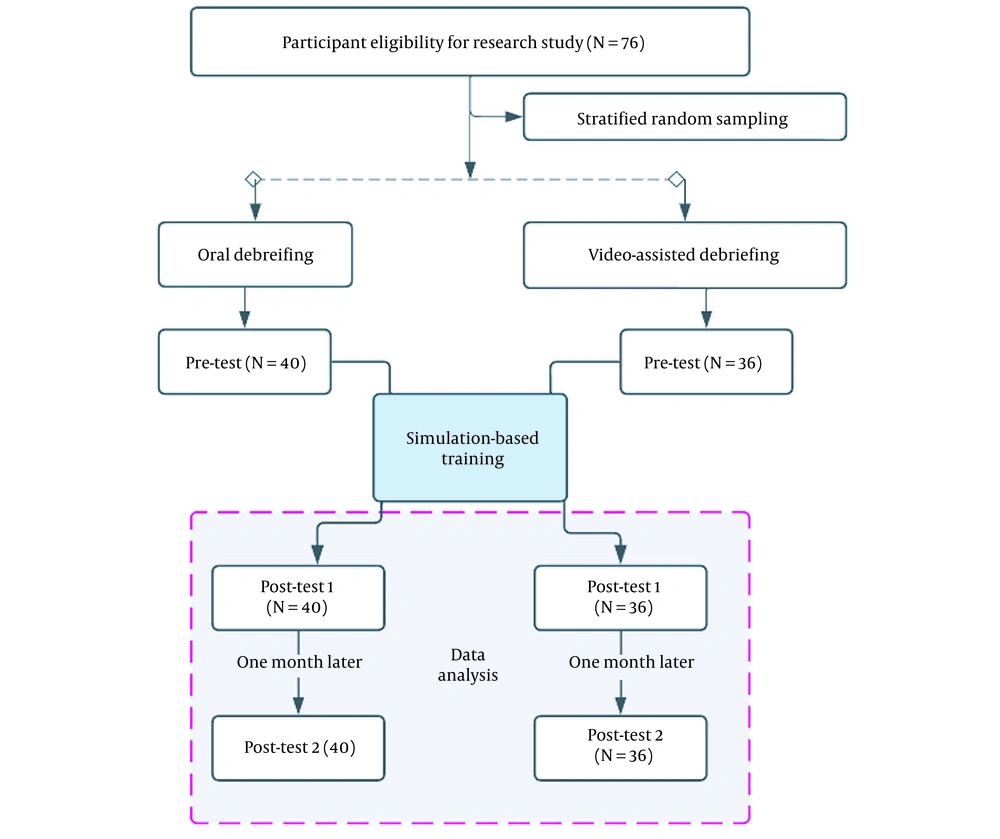

This quasi-experimental study used a pretest-posttest design and was conducted during 2020 - 2021 to compare the effectiveness of oral debriefing and video-assisted debriefing techniques in improving medical students' decision-making skills at the Shahid Beheshti University of Medical Sciences. The study involved 76 medical students who were in the fourth year of their seven-year training program. The participants were selected using a census and were then randomly divided into intervention (video-assisted debriefing, n = 36) and control (oral debriefing, n = 40) groups (Figure 1).

The inclusion criteria in this study were willing to participate in the research and participation in internships at the Mofid Hospital in Tehran for pediatric diseases. Exclusion criteria were refusal to continue the study, failure to attend an educational session, and failure to complete the research instruments in the second data collection phase.

The research study was a part of a doctoral dissertation and was approved by the Ethics Committee of the Shahid Beheshti University of Medical Sciences (Ethics Code: IR.SBMU.SME.REC.1400.044URL: https: ethics.research.ac.ir). Informed consent was obtained from all participants, and they were assured that their personal information would be kept confidential.

The required data were collected using a demographic information survey, the Penn State professionalism questionnaire, and Lauri and Salantera’s (2002) Clinical Decision-making Questionnaire by.

3.1. Clinical Decision-making Questionnaire

The instrument consists of 24 items and addresses four subscales, each of which contains six items corresponding to a step in the decision-making process. The CDM (Clinical Decision-making Questionnaire) uses a five-point Likert scale, with even-numbered items reflecting decisions in unpredictable situations (eg, "When I first meet the patient, I assume there will be problems with care."). There were odd items that include statements that reflect situations in which decision-making needs to occur, for example, in structured tasks or when ample time isn't available for gathering information, (eg, "Based on my preliminary information, I list all the items that I will monitor and ask the patient about."). Each response was scored using instructions ranging from never (1) to rarely (2), sometimes (3), often (4), and almost always (5). Scores ranged from 24 to 120. For phrases with positive and negative semantic loading, the scores ranged from one to five. The reversely-scored items in this questionnaire are 1, 3, 5, 7, 9, 11, 13, 15, 17, 19, 21, and 23 (one represents always, five represents never). In the scoring system, < 67 represents systematic analytic decisions, the scores of 68 - 78 represent intuitive analytic decisions, and > 78 represents intuitive interpretive clinical decisions (14).

Javadi reported the internal correlation of 0.75 for the translated questionnaire using Cronbach's alpha (15). Karimi Naghandar et al. reported that the reliability of this instrument was α = 0.85 (16). For this study, the test-retest method was adopted for 20 subjects to evaluate the reliability of the instrument, and the Cronbach's alpha coefficient of 0.86 was obtained.

3.2. Penn State College of Medicine Professionalism Questionnaire

Several versions of the PSQP (Penn State College of Medicine Professionalism Questionnaire) are offered to assess attitudes toward professionalism among medical students, residents, medical faculty members, and faculty members of biomedical sciences. For each question, respondents select one of four response options (never, a little, somewhat, a great deal) on a five-point Likert scale ranging from low to high. The professionalism questionnaires consist of 36 items reflecting six dimensions previously established by the American Board of Internal Medicine: Accountability (7 items), altruism (3 items), duty (6 items), excellence (10 items), honesty and integrity (8 items), and respect (2 items). The PSQP asks participants to rate how well statements describing others' conduct matched their perceptions of professionalism. The PSQP is developed for medical samples. According to this questionnaire, each statement is assigned a maximum score of 5, and all statements on the integrity component are assigned a maximum score of 30, with a total maximum score of 180 (17) for all professional elements. This is the first instrument assessing medical students’ professional attitudes; hence, it provides a review process for curriculum interventions.

Since the original version of this questionnaire was in English, it was translated into Persian and then back-translated according to the scientific procedure to determine the translation quality of instrument. Regarding the reliability of the instrument, α = 0.98 was obtained for the original version of the questionnaire (17), and α = 0.86 was calculated for the version used in the present study.

3.3. Intervention

Virtual patient scenarios included completing technical and nontechnical skills such as interpreting assessment results, implementing salient interventions, and monitoring improvements in managing routine situations.

First, 12 case studies were selected from the CyberPatient case library corresponding to the clinical course schedule for pediatric diseases during medical student clerkships (Table 1). The educational intervention was conducted in the university’s clinical laboratory for 12 weeks. Under the supervision of a clinical professor, the students participated in a clinical exercise and completed a clinical case each week.

| Name of Virtual Patient | Patient Diagnosis | Clinical Setting |

|---|---|---|

| 1. Jenna Martin | Functional constipation | Outpatient |

| 2. James Rodrigez | Simple febrile seizure | Inpatient |

| 3. Jennifer Lawson | Failure to thrive (FTT) due to cystic fibrosis (CF) | Outpatient |

| 4. Jessica Anderson | Asthma exacerbation | Inpatient |

| 5. John Chan | Umbilical hernia | Outpatient |

| 6. Joseph Redriguez | Attention deficit hyperactivity disorder (ADHD) | Outpatient |

| 7. Kevin Whinery | Intussusception | Inpatient |

| 8. Lawrence Clark | Infantile colic | Outpatient |

| 9. Michael Jefferson | Hypertrophic pyloric stenosis | Inpatient |

| 10. Michael Rose | Nutritional failure to thrive (FTT) | Outpatient |

| 11. Nadia Solanski | Secondary lactase deficiency | Outpatient |

| 12. Richard Mcklain | Epiglottitis | Inpatient |

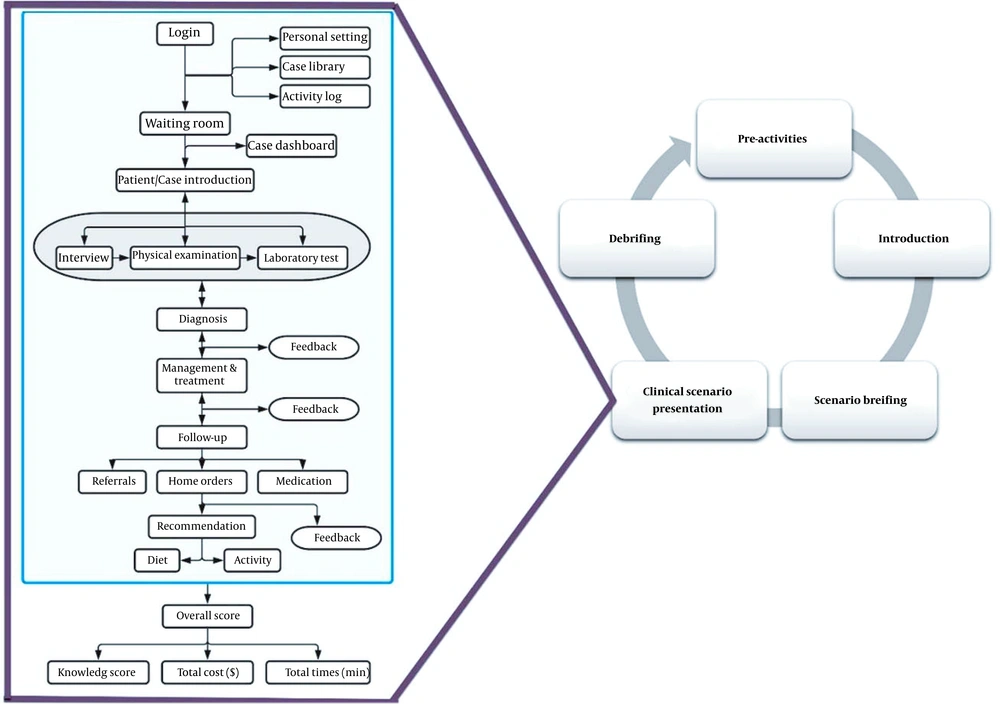

The students received a briefing on how to use the cyberpatient system and what to expect during training. Ten minutes were spent on making students familiar with the software and completing questionnaires on their demographic information and CDM instruments. Moreover, a clinical instructor presented the educational objectives to be achieved after working with the virtual patient in the introductory session. A series of questions were also asked to query the comprehensive prior knowledge of the case. When the clinical professor presented the case, the students began working with the virtual patient. Each session lasted about one hour, starting with the student logging into the simulator (app.cyberpatient.ca) and ending with the system feedback. In this simulated environment, a virtual patient was displayed by selecting the case on the screen. An interactive virtual patient experience was designed with images, videos, and animations. The students selected one part of the virtual patient to be examined and used the mouse to perform inspections, palpations, percussions, and auscultations. During the physical examinations, the software allowed users to listen to the virtual patient's lung sounds and decide whether they were normal or not. According to the collected data, the students should develop a possible diagnosis and treatment plan for the patient. This allowed the students to prescribe medications for the patients. After selecting the appropriate treatment for the patient, the students were expected to make suggestions to promote the patient's diet and lifestyle. The software could record the time spent on each case, the number of errors, and the immediate feedback (Figure 2).

Simulation-based learning cycle for CyberPatient-based intervention. The clinical scenario was presented on the CP platform. The presented platform is with its principal components. You can follow any path from the case dashboard (case encounter), and you can also return to the case presentation from the diagnostics, therapy, or follow-up sections.

3.4. Oral Debriefing Protocol

Each debriefing was held in private and included open-ended questions to guide and facilitate discussion. A 90-minute debriefing session was held in the conference room of the Mofid Hospital in Tehran, 24 hours after the students had worked with each virtual patient. In this study, a 3D model was used for debriefing in the two groups, and all debriefings were conducted according to the INACSL standards. During the oral debriefing, in addition to personal reflections and emotional reactions, the participants were asked to describe patient problem lists and situations, the strengths and weaknesses of performance, and their interpretations. During the debriefing sessions, the participants were asked to answer the following semi-structured questions: (1) How was the simulation? (2) Can you summarize the key events in the simulation? (3) What could be done to improve performance? What were its strengths? (4) Where could the performance be improved, and is there anything different to be done?

3.5. Video-Assisted Debriefing Protocol

The two groups engaged in semi-structured, facilitated oral debriefing; however, the participants in the video group had the opportunity to watch selected videos to reflect on their strengths and areas for improvement. Debriefers were selected between two and four short clips to highlight approximately two or more strengths and performance gaps.

SPSS software version 23.0 was used to analyze the data and compare the effectiveness of oral and video-assisted debriefing techniques in improving medical students' decision-making skills. Descriptive statistics such as mean, standard deviation, and frequency distribution were used to describe the participants’ characteristics. Shapiro-Wilk and Kolmogorov- Smirnov tests were used to examine the distributions of the quantitative variables. The medical students' clinical decision-making and attitudes toward professionalism were compared within and between groups using the paired and independent t-tests.

4. Results

Seventy-six participants participated in the study, with 100% completing the full two-session protocol. The participants were randomly divided into video-assisted debriefing (n = 36) and standard oral debriefing (n = 40) groups. Almost all participants were single (87.3%), 81.7% of whom were female.

The participants’ mean age was 21 ± 4.5 years. Thirty-two (55.1%) persons lived with their families, while 26 (44.9%) individuals lived in dormitories. Most students (86.2%) mentioned experiencing virtual education and simulations.

There was no significant difference between the groups regarding background characteristics, clinical work experiences, and simulation experiences.

The Result of the survey toward medical students' attitudes toward professionalism in Video-assisted debriefing:

An analysis of paired t-tests found that the increase in the Doctor-Patient relationship skills score of students the after was (91.5 ± 3.6) as compared with the score before the intervention (64.6 ± 4.9) was statistically significant (P < 0.001). A statistically significant increase in students' Reflective Skills scores was determined by this test (P < 0.001) after the intervention compared to before. After the intervention, time management scores in students increased statistically significantly (P < 0.001) compared to before. In addition, there was a statistically significant difference between the scores for inter-professional relationship skills in the after stage (32.5 ± 4.8) and before the intervention (52.3 ± 3.2) (Table 2)

| Items | Pre-test (Mean ± SD) | Post-test (Mean ± SD) | Paired t-test |

|---|---|---|---|

| Doctor-patient relationship skills | 9.4 ± 4.64 | 6.7 ± 5.91 | t = 13.16; P < 0.001 |

| 1. Listened actively to patients | |||

| 2. Showed interest in patients as a person | |||

| 3. Showed respect for patient | |||

| 4. Recognized and met patient needs | |||

| 5. Ensured continuity of patient care | |||

| 6. Maintained appropriate boundaries with patients/ colleagues | |||

| 7. Accepted inconvenience to meet patient needs. | |||

| 8. Advocated on behalf of a patient and/or family member. | |||

| Reflective skills | 0.4 ± 3.14 | 7.12 ± 0.48 | t = 55.11; P < 0.001 |

| 9. Admitted errors/omissions | |||

| 10. Accepted feedback | |||

| 11. Solicited feedback | |||

| 12. Maintained composure in a difficult situation | |||

| Time management | 6.18 ± 4.13 | 7.15 ± 5.23 | t = 71.3; P < 0.001 |

| 13. Was on time | |||

| 14. Completed tasks in a reliable fashion | |||

| 15. Was available to patients or colleagues | |||

| Inter-professional relationship skills | 8.7 ± 4.32 | 2.13 ± 3.52 | t = 03.7; P < 0.001 |

| 16. Maintained appropriate appearance | |||

| 17. Addressed own gaps in knowledge and skills | |||

| 18. Demonstrated respect for colleagues | |||

| 19. Avoided derogatory language | |||

| 20. Maintained patient confidentiality | |||

| 21. Demonstrated collegiality | |||

| 22. Assisted a colleague as needed | |||

| 23. Used health resources appropriately | |||

| 24. Respected rules and procedures of the system |

Results of Medical Students’ Clinical Decision-making Skills in Video-Assisted Debriefing:

The paired-sample t-tests was used to compare the medical students’ clinical decision-making skills before (48.04 ± 12.77) and after training (76.49 ± 7.66), and a statistically significant difference was noticed (P = 0.09). The clinical decision-making skills were also significantly different (P = 0.001) before and after a one-month follow-up. There was no statistically significant difference between clinical decision-making skills after training and after one month of follow-up (73.06 ± 4.9).

4.1. Survey Results for Medical Students' Attitudes Towards Professionalism in Oral Debriefing

An analysis of paired t-tests revealed an increase in the scores of doctor-patient relationship skills after the intervention (83.5 ± 2.4); the increase was statistically significant in comparison to the scores before the intervention (69.3 ± 4.2) (P < 0.001). Statistically significant changes in the students' reflective skill scores occurred after the intervention (P < 0.001). The increase in the students' time management scores after the intervention was also statistically significant in comparison to the pre-intervention phase (P < 0.001). Moreover, there was a statistically significant difference between the scores of inter-professional relationship skills after (49.3 ± 4.8) and before the intervention (43.1 ± 3.5) (Table 3).

| Items | Pre-test (Mean ± SD) | Post-test (Mean ± SD) | Paired t-test |

|---|---|---|---|

| Doctor-patient relationship skills | 69.3 ± 4.2 | 83.5 ± 2.4 | t = 10.83; P < 0.001 |

| 1. Listened actively to patients | |||

| 2. Showed interest in patients as a person | |||

| 3. Showed respect for patient | |||

| 4. Recognized and met patient needs | |||

| 5. Ensured continuity of patient care | |||

| 6. Maintained appropriate boundaries with patients/colleagues | |||

| 7. Accepted inconvenience to meet patient needs. | |||

| 8. Advocated on behalf of a patient and/or family member. | |||

| Reflective skills | 15.9 ± 3.1 | 42.3 ± 8.7 | t = 9.87; P < 0.001 |

| 9. Admitted errors/omissions | |||

| 10. Accepted feedback | |||

| 11. Solicited feedback | |||

| 12. Maintained composure in a difficult situation | |||

| Time management | 15.6 ± 13.2 | 28.4 ± 11.3 | t = 3.46; P < 0.001 |

| 13. Was on time | |||

| 14. Completed tasks in a reliable fashion | |||

| 15. Was available to patients or colleagues | |||

| Inter-professional relationship skills | 43.1 ± 3.5 | 49.3 ± 4.8 | t = 6.82; P < 0.001 |

| 16. Maintained appropriate appearance | |||

| 17. Addressed own gaps in knowledge and skills | |||

| 18. Demonstrated respect for colleagues | |||

| 18. Avoided derogatory language | |||

| 20. Maintained patient confidentiality | |||

| 21. Demonstrated collegiality | |||

| 22. Assisted a colleague as needed | |||

| 23. Used health resources appropriately | |||

| 24. Respected rules and procedures of the system |

4.2. Result of Medical Students’ Clinical Decision-making Skills in Oral Debriefing

According to the results of the paired-sample t-tests, the medical students’ clinical decision-making skills were compared before (54.05 ± 9.43) and after training (74.25 ± 6.32), and a statistically significant difference was noticed (P = 0.09). Clinical decision-making skills were also significantly different before and after follow-up (P = 0.001). No statistically significant difference was observed between clinical decision-making skills after training and one month later (71.43 ± 3.9) (Table 4).

| Clinical Decision Making | Mean ± SD | |

|---|---|---|

| Groups | Before | After |

| Video-assisted debriefing | 69.3 ± 4.2 | 83.5 ± 2.4 |

| Oral debriefing | 54.05 ± 9.43 | 74.25 ± 6.32 |

| Independent t-test | t = 1.13; P = 0.26 | t = 7.03; P < 0.001 |

5. Discussion

The present study aimed to determine and compare the effectiveness of video-assisted debriefing and oral debriefing in simulation-based training. According to this rigorous quasi-experimental study, video-assisted debriefing is more effective than oral debriefing in improving medical students’ clinical decision-making skills and professional attitude. The same effectiveness between the two types of debriefings is supported in previous reviews and research (18). To the best of the authors’ knowledge, this is the first study comparing video-assisted debriefing in cases of simulations with the aim of improving clinical decision-making skills and professional attitudes. Video review in simulation-based medical education has been widely used; however, little empirical evidence supports its effectiveness (19).

This study validates the significance of deliberate practice, including repetitive training and debriefings, in promoting practice-based learning and improving clinical decision-making skills among medical students (20).

Similar to our findings in this study, Welke et al. also found multimedia instruction to be effective for delivering crisis resource management lessons. Based on the results of this study, standardized multimedia instruction utilizing simulation scenarios can effectively improve anesthesia trainees' nontechnical skills. Additionally, trainees retained their nontechnical skills after five months of training (21).

Endacott used standardized patients and mannequins to test nurses' clinical decision-making skills in an object-based simulation exercise. This study revealed that standard patient simulation methods improved nurses' clinical decision-making skills more effectively than mannequins. Simulation and informal feedback were used to enhance clinical decision-making in emergencies (22). In this study, the students worked with each virtual patient, and when each case was resolved, we debriefed and concluded the case. We then provided feedback on how the student could improve their performance regarding the concerned case.

Moreover, the medical students in the present study experienced improved problem-solving abilities and learning processes via VP-based training. This type of training promotes performance due to several reasons. Since VP can provide learners with a realistic, less-threatening environment, they can practice their skills using trials and errors (23, 24). Another benefit of this method is that students can learn at anytime, anywhere, and at a pace convenient to them. It also helps students achieve mastery in their problem-solving abilities and skills (25). The present findings also showed a significant retention rate in both groups after one month.

Savoldelli et al. randomly assigned residents to no debriefing, oral debriefing alone, or video-assisted debriefing groups after participating in a series of two intraoperative cardiac arrest simulations. It was found that the oral debriefing and video-assisted debriefing groups improved their crisis management skills significantly. In contrast, those who did not receive debriefings revealed no improvement. There was no significant difference between the oral and video-assisted debriefing groups in terms of improvement scores (26).

Brown examined nursing students’ performance and response times using oral debriefing and video-assisted debriefing techniques during a cardiopulmonary arrest simulation. In the video-assisted debriefing group, response times for cardiopulmonary resuscitation and shock were significantly shorter; however, the students’ performance did not differ between the groups (9). This study expands the literature on video review during simulation-based medical education.

There were some limitations in this study. One of the limitations was that that all participants attended the same institution and were all passing the fourth year of their education. Moreover, this study used the 3D debriefing model; however, alternative models may have effects on the research outcomes. Accordingly, other models should be further investigated. Furthermore, more research is required to understand the limitations of video-assisted debriefing and oral debriefing techniques and detect how to use them effectively.

Educational curricula must incorporate VP to enhance problem-solving skills. Individual and group learning methods must also be used in this regard. Simulation-based education can be used to develop skill acquisition and promote professional attitudes by reducing psychological stress and improving performance during repeated exposures.

According to the findings, virtual reality training improves the medical students’ ability to make clinical decisions in a safe and controlled environment. Moreover, it is a useful technique to enhance their learning. A debriefing should occur alongside VPs if the goal is to enhance the retention of educational topics. In this study, the VAO participants revealed higher levels of learning than the OD participants. Following a virtual simulation, faculty members can consider video-assisted and oral debriefing techniques to support student learning. Furthermore, future researchers are recommended to further our understanding of how to use virtual simulation in debriefing to its maximum potential.